- Why do you need to scrape Twitter?

- What data can you extract from Twitter?

- How to Scrape Twitter with Infatica API

- Features of Infatica Twitter Scraper API

- Twitter Scraping with Python

- What about the Twitter API?

- Is it Legal to Scrape Twitter?

- What is the Best Way to Scrape Twitter?

- Frequently Asked Questions

With data collection tools becoming ever so widespread, more and more companies are trying their hands at Twitter scraping. In this article, we’re providing a step-by-step guide you can use to scrape Twitter profiles and other pages – and an overview of Twitter scraping legality.

Why do you need to scrape Twitter?

As one of the world’s most popular websites, Twitter holds vast amounts of public conversation data: If you analyze it right, you’ll gain valuable insight into opinions, trends, and sentiments. Here are the most common Twitter scraping use cases:

Consumer research. Social media is a great communication tool used between individuals – but they also use platforms like Twitter to interact with companies. Twitter is a great source of consumer behavior data, with millions of tweets and trending topics that can be analyzed for social listening and personalization engagement.

Brand monitoring. By extension, Twitter may also be the go-to place for your existing and prospective customers to interact with your brand: If an active user suffers from fraud or misinformation or notices a copyright violation, they’re likely to take it to Twitter – in this case, you would do well to monitor mentions of your brand and related hashtags.

Financial monitoring. For financial institutions, Twitter is the source of up-and-coming startups, public opinion trends, market fluctuations, political updates, and more – these are all contributing factors that can quickly affect financial indicators like a currency exchange rate.

What data can you extract from Twitter?

Before beginning to scrape Twitter profiles and other data fields, let’s take a closer look at which types of data you can actually collect. The first category is profiles: You can extract the public information it contains, including user bio, follower and tweet count, and more.

Naturally, tweets are another scrapable data type: They typically contain text and visual media (photos and videos), with additional scraping filters like retweeted tweets or tweets with URLs.

Finally, keywords and hashtags are essentially curated groups of tweets, so they can be scraped as well. Additional filters include like count and date to single out specific tweets.

How to Scrape Twitter with Infatica API

Infatica Scraper API is a powerful scraping tool that enables data collection from a plethora of companies – Twitter, Amazon, Google, Facebook, and much more. Let’s see how we can use Scraper API to download Twitter pages at scale:

Step 1. Sign in to your Infatica account

Your Infatica account has different useful features (traffic dashboard, support area, how-to videos, etc.) It also has your unique user_key value which we’ll need to use the API – you can find it in your personal account’s billing area. The input example for theuser_key value is a 20-symbol combination of numbers and lower- and uppercase letters, e.g. KPCLjaGFu3pax7PYwWd3.

Step 2. Send a JSON request

This request will contain all necessary data attributes. Here’s a sample request:

{

"user_key":"KPCLjaGFu3pax7PYwWd3",

"URLS":[

{

"URL":"https://twitter.com/DeepLoNLP/status/1547682092422557697",

"Headers":{

"Connection":"keep-alive",

"User-Agent":"Mozilla\/5.0 (Macintosh; Intel Mac OS X 10.15; rv:71.0) Gecko/20100101 Firefox/71.0",

"Upgrade-Insecure-Requests":"1"

},

"userId":"ID-0"

}

]

}Here are attributes you need to specify in your request:

- user_key: Hash key for API interactions; available in the personal account’s billing area.

- URLS: Array containing all planned downloads.

- URL: Download link.

- Headers: List of headers that are sent within the request; additional headers (e.g. cookie, accept, and more) are also accepted. Required headers are: Connection, User-Agent, Upgrade-Insecure-Requests.

- userId: Unique identifier within a single request; returning responses contain the userId attribute.

Here’s a sample request containing 4 Twitter URLs:

{

"user_key":"KPCLjaGFu3pax7PYwWd3",

"URLS":[

{

"URL":"https://twitter.com/DeepLoNLP/status/1547682092422557697",

"Headers":{"Connection":"keep-alive","User-Agent":"Mozilla\/5.0 (Macintosh; Intel Mac OS X 10.15; rv:71.0) Gecko/20100101 Firefox/71.0","Upgrade-Insecure-Requests":"1"},

"userId":"ID-0"

},

{

"URL":"https://twitter.com/gneubig/status/1547618301622112256",

"Headers":{"Connection":"keep-alive","User-Agent":"Mozilla\/5.0 (Macintosh; Intel Mac OS X 10.15; rv:71.0) Gecko/20100101 Firefox/71.0","Upgrade-Insecure-Requests":"1"},

"userId":"ID-1"

},

{

"URL":"https://twitter.com/boknilev/status/1547599525434363907",

"Headers":{"Connection":"keep-alive","User-Agent":"Mozilla\/5.0 (Macintosh; Intel Mac OS X 10.15; rv:71.0) Gecko/20100101 Firefox/71.0","Upgrade-Insecure-Requests":"1"},

"userId":"ID-2"

},

{

"URL":"https://twitter.com/naaclmeeting/status/1511927414296772611",

"Headers":{"Connection":"keep-alive","User-Agent":"Mozilla\/5.0 (Macintosh; Intel Mac OS X 10.15; rv:71.0) Gecko/20100101 Firefox/71.0","Upgrade-Insecure-Requests":"1"},

"userId":"ID-3"

}

]

}Step 3. Get the response and download the files

When finished, the API will send a JSON response containing – in our case – four download URLs. Upon receiving the response, notice its attributes: Status (HTTP status) and Link (file download link.) Follow the links to download the corresponding contents.

{

"ID-0":{"status":996,"link":""},

"ID-1":{"status":200,"link":"https://www.domain.com/files/product2.txt"},

"ID-2":{"status":200,"link":"https://www.domain.com/files/product3.txt"},

"ID-3":{"status":null,"link":""}

}Please note that the server stores each file for 20 minutes. The optimal URL count is below 1,000 URLs per one request. Processing 1000 URLs may take 1-5 minutes.

Features of Infatica Twitter Scraper API

Ever since Twitter rose to popularity, the developer community has been creating all sorts of tools used to scrape Twitter profiles. We believe that Infatica Scraper API has the most to offer: While other companies offer scrapers that require some tinkering, we provide a complete data collection suite – and quickly handle all technical problems.

Millions of proxies: Scraper utilizes a pool of 35+ million datacenter and residential IP addresses across dozens of global ISPs, supporting real devices, smart retries and IP rotation.

100+ global locations: Choose from 100+ global locations to send your web scraping API requests – or simply use random geo-targets from a set of major cities all across the globe.

Robust infrastructure: Make your projects scalable and enjoy advanced features like concurrent API requests, CAPTCHA solving, browser support and JavaScript rendering.

Flexible pricing: Infatica Scraper offers a wide set of flexible pricing plans for small-, medium-, and large-scale projects, starting at just $25 per month.

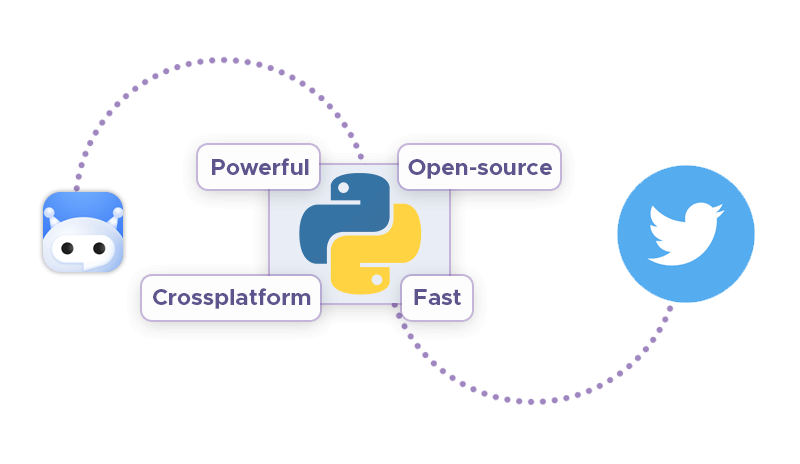

Twitter Scraping with Python

Another method of Twitter scraping involves Python, the most popular programming language for data collection tasks. We can use a scraper written in Python to create a custom data collection pipeline – let’s see how.

❔ Further reading: We have an up-to-date overview of Python web crawlers on our blog – or you can watch its video version on YouTube.

🍲 Further reading: Using Python's BeautifulSoup to scrape images

🎭 Further reading: Using Python's Puppeteer to automate data collection

Scraping user’s tweets

We’ll use snscrape, a Python library for scraping Twitter. Unlike the solutions using Twitter’s own API, snscrape gives us more freedom by foregoing Twitter authentication and login cookies. Keep in mind, however, that this library is still in development – refer to its changelog to see its new features. Let’s install it via this command:

pip3 install git+https://github.com/JustAnotherArchivist/snscrape.gitLet’s import snscrape's Twitter module and pandas, a Python data manipulation library:

import snscrape.modules.twitter as sntwitter

import pandas as pdThen, we use snscrape's TwitterSearchScraper() function to specify the user (in our case, stanfordnlp) and return an object containing tweets from said user. Additionally, we’re using the enumerate() function to only display the user’s 100 last tweets – otherwise, snscrape will fetch all of their tweets:

# Create a list to append all tweet attributes(data)

attributes_container = []

# Use TwitterSearchScraper to scrape data and append tweets to list

for i,tweet in enumerate(sntwitter.TwitterSearchScraper('from:stanfordnlp').get_items()):

if i>100:

break

attributes_container.append([tweet.date, tweet.likeCount, tweet.sourceLabel, tweet.content])Finally, we use pandas to create a dataframe with scraped tweets:

# Create a dataframe from the tweets list above

tweets_df = pd.DataFrame(attributes_container, columns=["Date Created", "Number of Likes", "Source of Tweet", "Tweets"])This is the final code snippet that will extract the given user’s tweets. Upon running it, you’ll see a pandas dataframe listing all dataset items that we scraped:

import snscrape.modules.twitter as sntwitter

import pandas as pd

# Create a list to append all tweet attributes(data)

attributes_container = []

# Use TwitterSearchScraper to scrape data and append tweets to list

for i, tweet in enumerate(sntwitter.TwitterSearchScraper('from:stanfordnlp').get_items()):

if i > 10:

break

attributes_container.append([tweet.date, tweet.likeCount, tweet.sourceLabel, tweet.content])

# Create a dataframe from the tweets list above

tweets_df = pd.DataFrame(attributes_container, columns=["Date Created", "Number of Likes", "Source of Tweet", "Tweets"])

Scraping tweets from text search

To collect historical data, we can modify the code snippet above and specify the date range via the since: and until: parameters:

import snscrape.modules.twitter as sntwitter

import pandas as pd

# Creating list to append tweet data to

attributes_container = []

# Using TwitterSearchScraper to scrape data and append tweets to list

for i, tweet in enumerate(sntwitter.TwitterSearchScraper('natural language processing since:2021-07-05 until:2022-07-06').get_items()):

if i > 150:

break

attributes_container.append([tweet.user.username, tweet.date, tweet.likeCount, tweet.sourceLabel, tweet.content])

# Creating a dataframe to load the list

tweets_df = pd.DataFrame(attributes_container, columns=["User", "Date Created", "Number of Likes", "Source of Tweet", "Tweet"])

Additionally, let’s add this line to convert the pandas dataframe to a CSV spreadsheet:

tweets_df.to_csv("Top Posts.csv", index=True)What about the Twitter API?

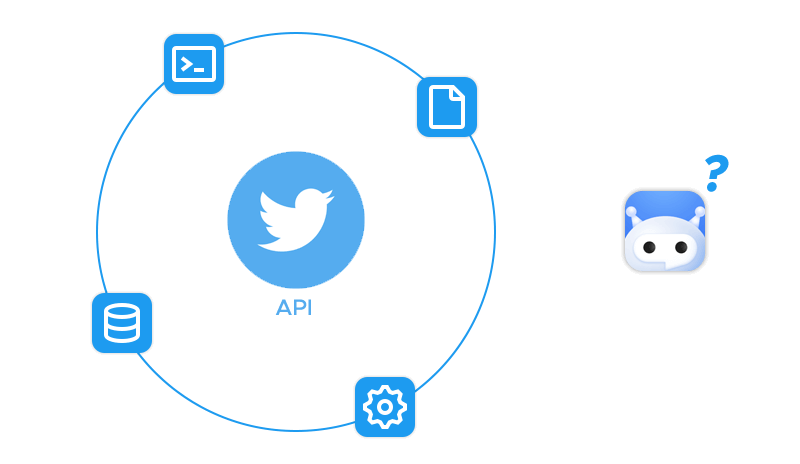

Tech companies understand that user data is their most important asset. Scraping Amazon or Facebook is tricky because of their limited APIs: These companies want to obstruct data collection as much as possible. Other companies are more liberal: Scraping Reddit or Google is easier.

Twitter falls in the latter category: Its API is well-documented and does offer some key features for free (e.g. retrieving up to 500k tweets per month), but it also imposes severe limitations on all of its API tiers: only one project and only one app per account, no access to advanced filter operators and archive, and more. Because of these limitations, we often have to turn to unofficial Twitter scraping APIs when we want to build a more powerful bot.

Is it Legal to Scrape Twitter?

Please note that this section is not legal advice – it’s an overview of latest legal practice related to this topic. We encourage you to consult law professionals to view and review each web scraping project on a case-by-case basis.

🌍 Further reading: See our recent overview of web scraping legality for a detailed legal practice analysis regarding web scraping.

Here’s the quick answer: Yes, Twitter scraping is legal – but this answer comes with some important footnotes: Twitter data may be managed by several region-, country-, and state-level regulations. These include:

- General Data Protection Regulation (GDPR) and

- Digital Single Market Directive (DSM) for Europe,

- California Consumer Privacy Act (CCPA) for California in particular,

- Computer Fraud and Abuse Act (CFAA) and

- The fair use doctrine for the US as a whole,

- And more.

This list shows that European users’ data can be treated differently from its American counterpart. Despite their differences, these regulations agree that Twitter scraping is legal if collected data is transformed in a meaningful way

, as per the fair use doctrine. A good example is creating a product that generates new value for users, like using Twitter datum for sentimental analysis. Conversely, scraping Twitter and republishing copyright-protected data to another website violates all sorts of intellectual property laws – in this case, Twitter may be in the right to sue.

What is the Best Way to Scrape Twitter?

In addition to being a powerful data collection suite, Infatica Scraper API also manages your proxy configuration. This is a crucial component of any web scraping pipeline: Without proxies, your crawler is running the risk of getting detected by Twitter’s anti-bot systems – and getting your IP addresses blocked.

With some programming skills under your belt, you can try building a custom Twitter scraping bot using Python: Although it may require some tinkering on your part, it offers good fine-tuning capabilities. Last but not least, no-code visual scrapers (in the form of browser extensions and dedicated apps) can be a good alternative, compensating (somewhat) underdeveloped functionality with ease-of-use.

Frequently Asked Questions

since:2019-05-01 until:2019-06-01.

mediascrape) that will perform the data extraction loop. The mediascrape library, for instance, can download all images from the given user.

snscrape or Tweepy) that will communicate with Twitter and act as your own API. Keep in mind that this method has both upsides and downsides: You’ll have more freedom, but the scraper may break when Twitter updates its page structure.

twitter-scrape-followers. Per its documentation, you’ll need to use login cookies and the bot_studio library, which allows browser automation, to scrape the list of Twitter followers for the particular user.