- Why Scrape Data from LinkedIn?

- What Data you Can Scrape from LinkedIn?

- How to Scrape LinkedIn with Infatica API

- Features of Infatica LinkedIn Scraper API

- Scrape LinkedIn Using Selenium and BeautifulSoup in Python

- Is scraping LinkedIn legal?

- Conclusion: What is the Best Way to Scrape LinkedIn?

- Frequently Asked Questions

Social media platforms like LinkedIn are growing every year – and so does the importance of LinkedIn scraping . In this article, we’re covering ins and outs of scraping data from LinkedIn – and how to use Infatica’s easy-to-understand LinkedIn scraping API.

Why Scrape Data from LinkedIn?

As a popular social network, LinkedIn is home to public discourse on thousands of topics among millions of users. Bundled together, these individuals posts, comments, and reactions are a goldmine if you’re looking for actionable data – here’s why:

Lead generation effort. LinkedIn profile scrapers are particularly useful for their ability to collect public profile data and create effective outreach campaigns, lists of potential customers, and lists of potential leads.

⭐ Further reading: See our detailed guide to lead generation via web scraping.

Brand monitoring. By extension, your LinkedIn page and relevant groups can be a great way for your clients to interact with your brand – this includes both positive and negative feedback, which you can use to improve your product and create effective outreach campaigns.

Consumer research. LinkedIn scrapers can also provide customer behavior data: Users’ public comments and posts can be analyzed for social listening and personalization engagement.

What Data you Can Scrape from LinkedIn?

As a platform, LinkedIn includes a wide set of page types that aggregate different kinds of data: these include profile info, posts, groups, recommendations, courses, jobs, and more. Here’s a quick overview of what kind of data a typical LinkedIn scraping API can collect:

| LinkedIn Profiles | Scrape public profile information |

| LinkedIn Groups | Scrape public group information |

| LinkedIn job listings | Scrape listed jobs |

| LinkedIn jobs | Scrape job descriptions |

| LinkedIn company profile | Scrape company information |

| LinkedIn search results | Scrape information from keywords and filters |

How to Scrape LinkedIn with Infatica API

Infatica Scraper API is a scraping tool for industry-grade data collection. Supporting platforms like LinkedIn, Amazon, Google, Facebook, and more, it’s sure to become your ultimate LinkedIn scraping API if you give it a try.

Step 1. Sign in to your Infatica account

Your Infatica account has different useful features (traffic dashboard, support area, how-to videos, etc.) It also has your unique api_key value which we’ll need to use the API – you can find it in your personal account’s billing area.

Step 2. Scrape LinkedIn with a single command

For parsing pages from LinkedIn you only need to specify your API key and LinkedIn URL:

curl -X POST "https://scrape.infatica.io/" -d '{"api_key": "API_KEY", "url": "https://www.linkedin.com/"}'This costs 130 credits per request. You don't need to use the render_js parameter.

Features of Infatica LinkedIn Scraper API

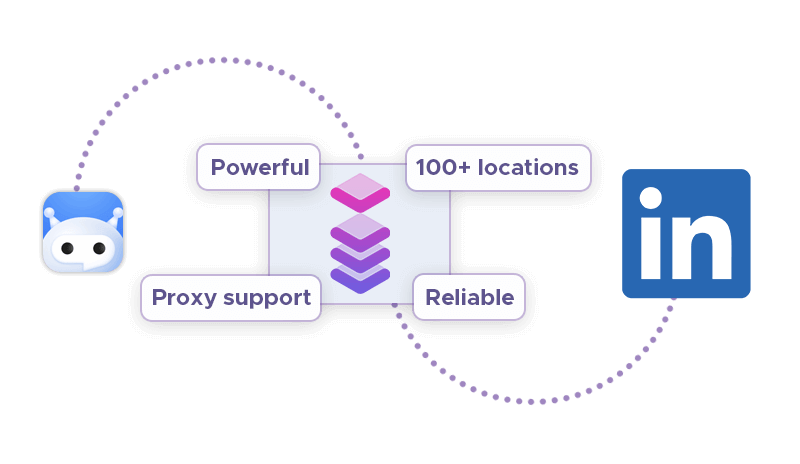

Noticing the struggle of our clients to build a reliable and fast LinkedIn scraping API, we decided to solve this problem by creating an easy-to-use web scraper with support for platforms like LinkedIn, Google, Amazon, Facebook, and many more. The result is Infatica Scraper API and its awesome features:

Millions of proxies: Scraper utilizes a pool of 35+ million datacenter and residential IP addresses across dozens of global ISPs, supporting real devices, smart retries and IP rotation.

100+ global locations: Choose from 100+ global locations to send your web scraping API requests – or simply use random geo-targets from a set of major cities all across the globe.

Robust infrastructure: Make your projects scalable and enjoy advanced features like concurrent API requests, CAPTCHA solving, browser support and JavaScript rendering.

Flexible pricing: Infatica Scraper offers a wide set of flexible pricing plans for small-, medium-, and large-scale projects, starting at just $25 per month.

Scrape LinkedIn Using Selenium and BeautifulSoup in Python

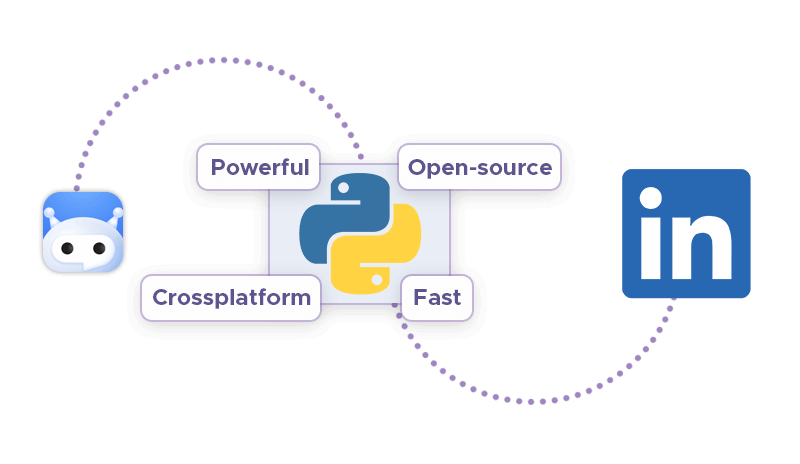

An alternative method of LinkedIn web scraping involves using Python, the most popular programming language for data collection, to build a scraping service of our own. Additionally, we’ll use Selenium, a browser automation suite, and BeautifulSoup, a Python library for parsing HTML documents, to make our bot even

❔ Further reading: We have an up-to-date overview of Python web crawlers on our blog – or you can watch its video version on YouTube.

🍲 Further reading: Using Python's BeautifulSoup to scrape images

🎭 Further reading: Using Python's Puppeteer to automate data collection

Set up the components

First, let’s install the libraries we mentioned above:

pip install selenium

pip install beautifulsoup4Additionally, our setup requires a web driver – an interface for software to remotely control the behavior of web browsers. Popular web drivers include Chromium (Chrome), Firefox, Edge, Internet Explorer, and Safari; in this guide, we’ll be using the Chrome web driver – you can download it here.

Log into your LinkedIn account

We’ll start with initializing the Selenium web driver and submitting a GET request. Then, we’ll locate page elements that manage the process of logging in. Here’s the code snippet to do that:

from selenium import webdriver

from bs4 import BeautifulSoup

import time

# Creating a webdriver instance

driver = webdriver.Chrome("Enter-Location-Of-Your-Web-Driver")

# This instance will be used to log into LinkedIn

# Opening linkedIn's login page

driver.get("https://linkedin.com/uas/login")

# waiting for the page to load

time.sleep(5)

# entering username

username = driver.find_element_by_id("username")

# In case of an error, try changing the element

# tag used here.

# Enter Your Email Address

username.send_keys("User_email")

# entering password

pword = driver.find_element_by_id("password")

# In case of an error, try changing the element

# tag used here.

# Enter Your Password

pword.send_keys("User_pass")

# Clicking on the log in button

# Format (syntax) of writing XPath -->

# //tagname[@attribute='value']

driver.find_element_by_xpath("//button[@type='submit']").click()

# In case of an error, try changing the

# XPath used here.

Scrape LinkedIn profile introduction

LinkedIn data doesn’t load fully unless the page is scrolled to the bottom. To create a LinkedIn profile scraper, we’ll need to input the profile’s URL and scroll the page completely:

from selenium import webdriver

from bs4 import BeautifulSoup

import time

# Creating an instance

driver = webdriver.Chrome("Enter-Location-Of-Your-Web-Driver")

# Logging into LinkedIn

driver.get("https://linkedin.com/uas/login")

time.sleep(5)

username = driver.find_element_by_id("username")

username.send_keys("") # Enter Your Email Address

pword = driver.find_element_by_id("password")

pword.send_keys("") # Enter Your Password

driver.find_element_by_xpath("//button[@type='submit']").click()

# Opening Kunal's Profile

# paste the URL of Kunal's profile here

profile_url = "https://www.linkedin.com/in/kunalshah1/"

driver.get(profile_url) # this will open the link

start = time.time()

# will be used in the while loop

initialScroll = 0

finalScroll = 1000

while True:

driver.execute_script(f"window.scrollTo({initialScroll}, {finalScroll})")

# this command scrolls the window starting from

# the pixel value stored in the initialScroll

# variable to the pixel value stored at the

# finalScroll variable

initialScroll = finalScroll

finalScroll += 1000

# we will stop the script for 3 seconds so that

# the data can load

time.sleep(3)

# You can change it as per your needs and internet speed

end = time.time()

# We will scroll for 20 seconds.

# You can change it as per your needs and internet speed

if round(end - start) > 20:

break

To complete our LinkedIn scraper, we’ll add the BeautifulSoup library to process the page structure. Let’s save the LinkedIn profile page’s source code to a variable and feed it into BeautifulSoup:

src = driver.page_source

# Now using beautiful soup

soup = BeautifulSoup(src, 'lxml')

LinkedIn profile introduction contains elements like first and last name, company name, city, and more – each element has a corresponding HTML tag we’ll need to locate. Let’s use Chrome’s Developer Tools to find them.

We now see that the <div> tag we need is 'class': 'pv-text-details__left-panel. Let’s input this tag into BeautifulSoup:

# Extracting the HTML of the complete introduction box

# that contains the name, company name, and the location

intro = soup.find('div', {'class': 'pv-text-details__left-panel'})

print(intro)

In the HTML output, we’ll notice the HTML tags we were looking for – let’s input these tags in this code snippet to scrape the profile information:

# In case of an error, try changing the tags used here.

name_loc = intro.find("h1")

# Extracting the Name

name = name_loc.get_text().strip()

# strip() is used to remove any extra blank spaces

works_at_loc = intro.find("div", {'class': 'text-body-medium'})

# this gives us the HTML of the tag in which the Company Name is present

# Extracting the Company Name

works_at = works_at_loc.get_text().strip()

location_loc = intro.find_all("span", {'class': 'text-body-small'})

# Ectracting the Location

# The 2nd element in the location_loc variable has the location

location = location_loc[1].get_text().strip()

print("Name -->", name,

"\nWorks At -->", works_at,

"\nLocation -->", location)

Scrape LinkedIn profile experience section

The same method applies to the experience section of a LinkedIn profile – let’s open the Developer Tools and see the corresponding <div> tags. Here’s the code snippet to scrape profile experience:

# In case of an error, try changing the tags used here.

li_tags = experience.find('div')

a_tags = li_tags.find("a")

job_title = a_tags.find("h3").get_text().strip()

print(job_title)

company_name = a_tags.find_all("p")[1].get_text().strip()

print(company_name)

joining_date = a_tags.find_all("h4")[0].find_all("span")[1].get_text().strip()

employment_duration = a_tags.find_all("h4")[1].find_all(

"span")[1].get_text().strip()

print(joining_date + ", " + employment_duration)Scrape LinkedIn Job Search Data

Selenium allows us to automate opening the Jobs section. The code snippet below initializes Selenium, opens the Jobs page, and tells BeautifulSoup to collect this data. Here are the data types and their corresponding HTML tags that we'll input:

| LinkedIn jobs | 'class': 'job-card-list__title' |

| LinkedIn companies | 'class': 'job-card-container__company-name' |

| LinkedIn job locations | 'class': 'job-card-container__metadata-wrapper' |

jobs = driver.find_element_by_xpath("//a[@data-link-to='jobs']/span")

# In case of an error, try changing the XPath.

jobs.click()

job_src = driver.page_source

soup = BeautifulSoup(job_src, 'lxml')

jobs_html = soup.find_all('a', {'class': 'job-card-list__title'})

# In case of an error, try changing the XPath.

job_titles = []

for title in jobs_html:

job_titles.append(title.text.strip())

print(job_titles)

company_name_html = soup.find_all(

'div', {'class': 'job-card-container__company-name'})

company_names = []

for name in company_name_html:

company_names.append(name.text.strip())

print(company_names)

import re # for removing the extra blank spaces

location_html = soup.find_all(

'ul', {'class': 'job-card-container__metadata-wrapper'})

location_list = []

for loc in location_html:

res = re.sub('\n\n +', ' ', loc.text.strip())

location_list.append(res)

print(location_list)

jobs_html = soup.find_all('a', {'class': 'job-card-list__title'})

# In case of an error, try changing the XPath.

job_titles = []

for title in jobs_html:

job_titles.append(title.text.strip())

print(job_titles)

Troubleshooting

Although this code has been tested to work in July 2022, it might break at some point in time: LinkedIn is constantly changing its page structure, in part to prevent LinkedIn data scraping efforts. In this case, you’ll have to manually open the page source and edit this guide’s code, replacing obsolete page elements.

If your internet speed is somewhat slow, connection may fail and LinkedIn may terminate your session. To address this problem, use the time.sleep() function, which will allow the bot more time to connect. For instance, to provide 5 additional seconds, use time.sleep(5).

Is scraping LinkedIn legal?

Please note that this section is not legal advice – it’s an overview of latest legal practice related to this topic. We encourage you to consult law professionals to view and review each web scraping project on a case-by-case basis.

🌍 Further reading: Our blog also features a detailed overview of web scraping legality with analysis of latest legal practice related to data collection, including LinkedIn scraping.

LinkedIn is a global platform, attracting users from all over the world – but regulations that oversee LinkedIn data scraping are created on region-, country-, and even state-level. Here’s a list of intellectual property, privacy, and cybersecurity laws that pertain to the legality of LinkedIn web scraping:

- General Data Protection Regulation (GDPR) and

- Digital Single Market Directive (DSM) for Europe,

- California Consumer Privacy Act (CCPA) for California in particular,

- Computer Fraud and Abuse Act (CFAA) and

- The fair use doctrine for the US as a whole,

- And more.

Here’s the good news: Generally, courts have interpreted these regulations to consider web scraping legal. The fair use doctrine in particular lays out a useful guideline: Collecting data is legal, but you need to transform it in a meaningful way

to maintain its legality – a good example is using a LinkedIn group scraper to create outreach software. Conversely, simply copying the platform’s data and republishing it is illegal.

HiQ Labs v. LinkedIn – and its consequences

HiQ Labs is a data analytics company that used LinkedIn profile scrapers to analyze employee attrition. In 2017, LinkedIn decided to take it to court, arguing that the scale of HiQ Labs’ scraping operation was so big that it was more akin to hacking.

Both in 2017 and 2022, the court ruled in favor of HiQ Labs, commenting that scraping LinkedIn wasn’t a violation of the CFAA norms: LinkedIn’s data is publicly available and doesn’t require authorization; therefore, accessing it doesn’t constitute hacking.

Most importantly, the 2022 decision was carried out by the Supreme Court, which makes other courts much more likely to be pro-scraping in their future rulings. Still, like any major tech company, LinkedIn goes above and beyond to protect its data from web scrapers – and like any major tech company, LinkedIn uses a set of anti-scraping measures: reCAPTCHA, Cloudflare, etc. This makes residential proxies essential for a successful LinkedIn web scraping pipeline – without proxies, you’re running a much higher risk of getting blocked.

Conclusion: What is the Best Way to Scrape LinkedIn?

With an endless list of LinkedIn web scraping in mind, we made sure to engineer Infatica Scraper API to be the optimal choice: Its reliability and high performance are supplemented by Infatica’s proxy network, which helps you maintain your request success rate high.

Power users with more time on their hands can try building their own LinkedIn group scraper using Python and its libraries. Although this solution provides more flexibility and control over the web scraping process, maintaining it and fixing unexpected errors falls on the developer – and this can eat up a lot of their time.

Another alternative involves software with point-and-click interfaces: for instance, Chrome extensions and standalone apps. They can be a great choice when you’re just starting out with LinkedIn scraping: Their graphical interface allows you to collect data without writing code yourself – but this can also be a disadvantage: Should LinkedIn change its page structure, you’ll have to wait for the scraper’s developer to fix this.

Frequently Asked Questions

- Use Infatica Scraper API, which does the majority of heavy-lifting during LinkedIn scraping for you (managing proxies, establishing the connection, saving data, etc.)

- Build a LinkedIn scraper using Python, Selenium, and BeautifulSoup.

- Use a browser extension or an app with a graphical interface.

'class': 'job-card-list__title'for LinkedIn jobs'class': 'job-card-container__company-name'for LinkedIn companies'class': 'job-card-container__metadata-wrapper'for LinkedIn job locations