Web scraping is a technique that involves extracting data from websites for various purposes, such as market research, sentiment analysis, or content aggregation. However, web scraping can be challenging and time-consuming due to the complexity and diversity of web pages.

In this article, we will explore how large language models (LLMs), such as GPT models developed by OpenAI, can help us automate and simplify web scraping tasks. We will explain what LLMs are, what they can do, what their limitations are, and how they can be used in chatbot applications like ChatGPT and Bing Chat. We will also show how to generate code snippets for web scraping using LLMs.

What are GPT models?

GPT is a series of large language models (LLMs) that can generate natural language text based on a given input. LLMs use deep learning algorithms to process and understand natural language. They are trained on massive amounts of text data to learn patterns and connections between words and phrases, allowing them to perform many types of language tasks, such as:

- Generating new text,

- Translating languages,

- Analyzing sentiments,

- Leading conversations, and more.

GPT is considered to be one of the most important advances in technology because it has many potential applications and implications for various fields and industries. For example, Bill Gates has this to say in his recent blog post:

In my lifetime, I’ve seen two demonstrations of technology that struck me as revolutionary.

The first time was in 1980, when I was introduced to a graphical user interface—the forerunner of every modern operating system, including Windows…

The second big surprise came just last year. I’d been meeting with the team from OpenAI since 2016 and was impressed by their steady progress. In mid-2022, I was so excited about their work that I gave them a challenge: train an artificial intelligence to pass an Advanced Placement biology exam. Make it capable of answering questions that it hasn’t been specifically trained for. (I picked AP Bio because the test is more than a simple regurgitation of scientific facts—it asks you to think critically about biology.) If you can do that, I said, then you’ll have made a true breakthrough.

I thought the challenge would keep them busy for two or three years. They finished it in just a few months.

How can I access GPT models?

There is a plethora of services that use GPT integrations (e.g. Quora’s Poe app), but we’ll focus on three official implementations of GPT models instead: OpenAI API, ChatGPT, and Bing Chat. These platforms offer the widest set of features, so let’s take a closer look at their strengths and weaknesses.

📅 Relevance alert: Summaries based on the information available as of March 2023. These are experimental services that are still in development – and their functionality may change or be limited without prior notice. In most cases, these products scale with time: Bing Chat, for instance, has been increasing its daily limits from 6 to 8, 10, 15, and 20 replies per conversation.

OpenAI API

| GPT version(s) | GPT-3.5, GPT-4 (limited beta) |

| Availability | Limited availability for GPT-4 |

| Price | $0.06-$0.12 per 1,000 tokens (GPT-4) |

OpenAI API is a platform that allows users to access new AI models developed by OpenAI, such as GPT-3 and GPT-4. Users can try various natural language tasks, such as text completion, code generation, and more. The OpenAI API also provides features such as embeddings, analysis, and fine-tuning to help users customize the models for their specific needs. Users can sign up for the OpenAI API and get access to a free tier or a paid tier depending on their usage.

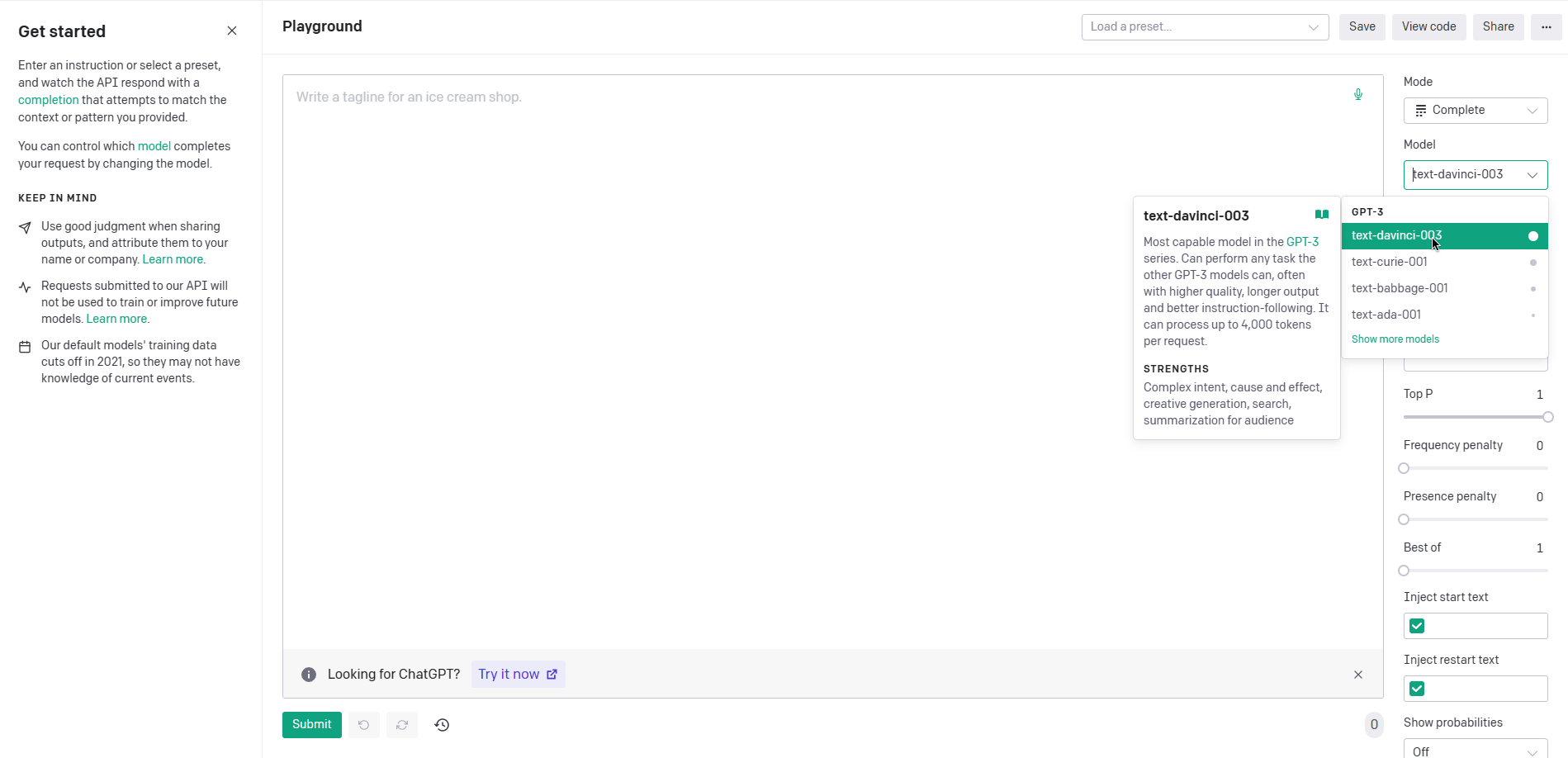

The quickest way to try the API is OpenAI Playground that lets you fine-tune a number of GPT performance and behavior factors such as model type, randomness, output length, and many more.

| OpenAI API pros | OpenAI API cons |

| Offers a spectrum of models with different levels of power suitable for different tasks, as well as the ability to modify your own custom models. | Developing apps that use OpenAI API can be expensive. The cost of training and maintaining AI models can be high, particularly for smaller companies or developers who may need more resources to invest in AI. |

| Simplifies the automation of certain tasks by providing access to a repository of pre-trained models and ML strategies. | Can be complex and require a lot of technical skills and knowledge to use effectively. |

| Plugin support that allows GPT to perform complex mathematical calculations and search the web. |

ChatGPT

| GPT version(s) | GPT-3.5, GPT-4 |

| Availability | Paid subscription required for GPT-4 |

| Price | Free tier; paid tier ($20/mo.) |

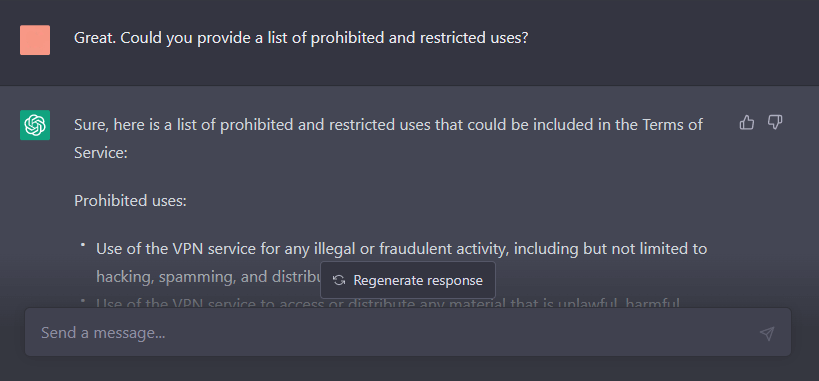

ChatGPT, as the name suggests, implements the GPT functionality via a chatbot interface. Using a conversational GPT model, ChatGPT can interact in a natural and engaging way, answering questions, following instructions, and generating creative content. Here’s a screenshot of its chat interface:

| ChatGPT pros | ChatGPT cons |

| No conversation limits. | Limited input and output capabilities. |

| Better interface for working with code snippets. | Occasional uptime issues even in the paid tier. |

| A maximum of 25 messages every 3 hours when using GPT-4. | |

| No access to web search results. |

Bing Chat

| GPT version(s) | GPT-4 |

| Availability | Requires Microsoft Edge |

| Price | Free |

Bing Chat is an AI assistant integrated into Microsoft’s Edge browser. Similarly to ChatGPT, it uses a conversational GPT model and provides an easy-to-use interface for interacting with it:

| Bing Chat pros | Bing Chat pros |

| Out-of-the-box support for web search, allowing Bing Chat to find relevant information and cite sources. | Limited input and output capabilities. |

| Deep integration with the Edge browser, enabling PDF summarization and other productivity features. | An additional censorship layer by Microsoft which may block certain prompts. |

| A maximum of 20 replies per conversation and a daily limit of 200 replies. (As of March, 26 2023.) |

Using GPT to scrape a static web page

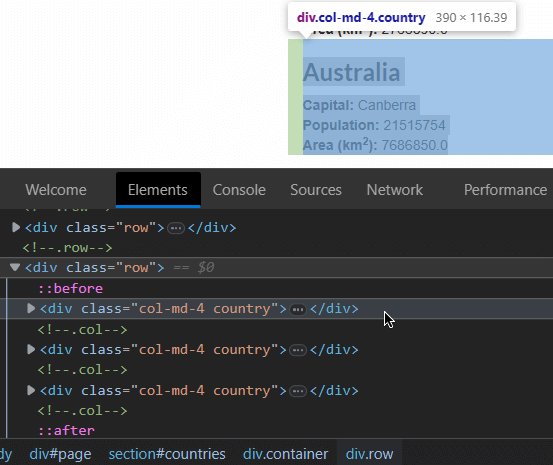

We can start with a website designed for simple web scraping queries. Here is a web page with a list of countries and their capitals, population numbers, and areas – and we can ask our GPT model to help us. First, we’ll need to locate the HTML elements containing the country data – we can do this via the web browser’s dev tools:

Then, we can add these elements to our prompt:

Please generate a code snippet for scraping tweets from this page: https://www.scrapethissite.com/pages/simple/. Look for these class elements: col-md-4 country and country-info. We want to collect the country name, capital, population, and area (e.g. Australia, Capital: Canberra, Population: 21515754, Area (km2): 7686850.0).

Here’s the code GPT generated:

# Import the libraries

import requests

from bs4 import BeautifulSoup

# Define the URL of the web page

url = "https://www.scrapethissite.com/pages/simple/"

# Make a GET request to the URL and store the response

response = requests.get(url)

# Parse the HTML content of the response using BeautifulSoup

soup = BeautifulSoup(response.content, "html.parser")

# Find the <iframe> element that contains the country data

iframe = soup.find("iframe")

# Get the src attribute of the iframe element

iframe_url = iframe["src"]

# Make another GET request to the iframe URL and store the response

iframe_response = requests.get(iframe_url)

# Parse the HTML content of the iframe response using BeautifulSoup

iframe_soup = BeautifulSoup(iframe_response.content, "html.parser")

# Find all the <div> elements with class="col-md-4 country"

countries = iframe_soup.find_all("div", class_="col-md-4 country")

# Create an empty list to store the scraped data

data = []

# Loop through each country element

for country in countries:

# Find the <h3> element that contains the country name and get its text

name = country.find("h3").text.strip()

# Find the <span> element that contains the capital name and get its text

capital = country.find("span", class_="country-capital").text.strip()

# Find the <span> element that contains the population and get its text

population = country.find("span", class_="country-population").text.strip()

# Find the <span> element that contains the area and get its text

area = country.find("span", class_="country-area").text.strip()

# Create a dictionary with the scraped data for this country

country_data = {

"name": name,

"capital": capital,

"population": population,

"area": area

}

# Append the dictionary to the data list

data.append(country_data)

# Print the data list

print(data)Using GPT to scrape Twitter

🏸 Further reading: How to Scrape Twitter: Step-by-Step Guide

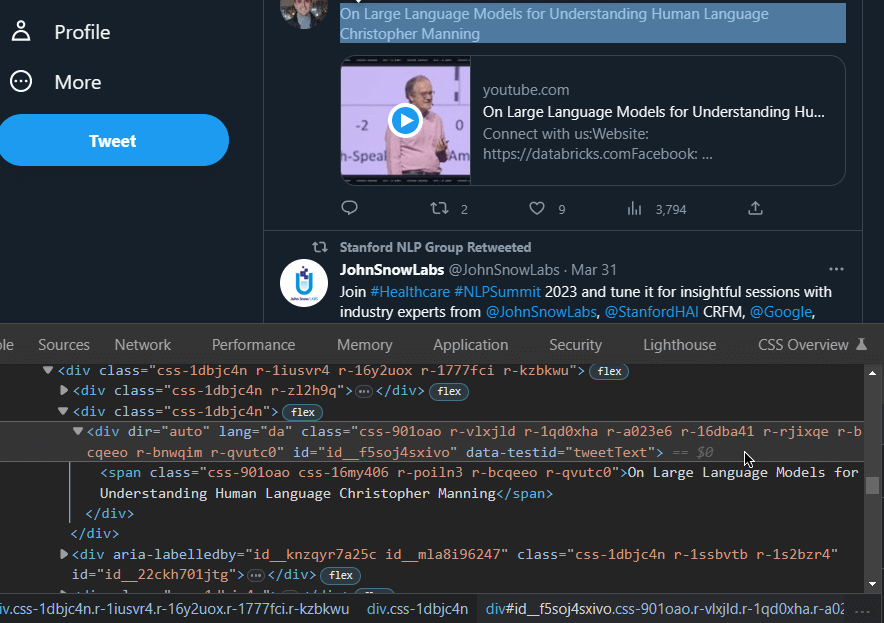

Unlike the previous website, Twitter features dynamic content – this means we’ll need more advanced code. First, let’s create a URL that will define which tweets to scrape: We can do this by using the search button. If we want to scrape tweets that contain the phrase “large language models”, we’ll need this URL: https://twitter.com/search?q=large%20language%20models&src=typed_query.

Then, let’s pick a tweet and analyze which HTML elements correspond to the tweet data. Using dev tools, we can see that we’ll need to focus on the div tag and lang attribute name:

Let’s create a prompt for the GPT model, specifying the HTML elements, tools (Python, Selenium, chromedriver), and their settings:

Please generate a code snippet for scraping tweets from this page: https://twitter.com/search?q=large%20language%20models&src=typed_query. Use Python, Selenium, and chromedriver. Look for elements with the div tag and lang attribute name. Add a 10-second wait period.

Here's the code GPT generated:

# Import the selenium library for web automation

from selenium import webdriver

# Import the time library for adding delays

import time

# Create a Chrome driver object with the path to the chromedriver executable

driver = webdriver.Chrome("/Users/UserOne/GPT-scraping-project/chromedriver")

# Maximize the browser window

driver.maximize_window()

# Navigate to the Twitter search page with the query "large language model"

driver.get("https://twitter.com/search?q=large%20language%20models&src=typed_query")

# Wait for 10 seconds to load the page

time.sleep(10)

# Find all the elements that have the attribute "lang" (which indicates a tweet)

elements = driver.find_elements_by_xpath("//div[@lang]")

# Loop through each element and print its text content

for element in elements:

print(element.text)

# Close the browser and quit the driver

driver.quit()Frequently Asked Questions

As of now, no. As per OpenAI’s Terms of Use:

… OpenAI hereby assigns to you all its rights, title and interest in and to Output. This means you can use Content for any purpose, including commercial purposes such as sale or publication, if you comply with these Terms.