- What is Google Search scraping?

- What is the Purpose of Scraping Google SERPs?

- Which sections of a Google SERP are Scrapable

- Why not use Google’s official search API?

- How to Scrape Google SERP with API

- How do I scrape Google results in Python?

- Other Google Scraping Methods

- Is it legal to scrape Google search results?

- What is the best way for Google Scraping?

- Frequently Asked Questions

We often think of Google as just a search engine – but this platform has developed into a digital powerhouse, whose products define the way we browse the web. Google’s power lies in its data – and you might be wondering how to extract it.

Scraping Google search results is sometimes tricky, but it’s worth the effort: You can use this data to perform search engine optimization, create marketing strategies, set up e-commerce business, and build better products. There are several ways of approaching this task – and in this article, we’re providing an extensive overview of how to scrape Google easily and reliably.

What is Google Search scraping?

We can start with defining this term in general: Web scraping means automated collection of data. Note the word “automated”: Googling “proxies” and writing down the top results manually isn’t web scraping in its real sense. Conversely, using specialized software (scrapers or crawlers) to do this at scale (for dozens of keywords at a time) does constitute web scraping.

By extension, Google search scraping means automation-driven collection of URLs, descriptions, and other search-relevant data.

What is the Purpose of Scraping Google SERPs?

Google may just be the world’s most popular digital product. With more than one billion users worldwide, Google offers a unique insight into customer behavior across each country and region – essentially, Google’s data is the largest encyclopedia of all things commerce. Thousands of companies invest resources into scraping Google SERPs. Armed with this kind of data, they can improve their products on multiple fronts:

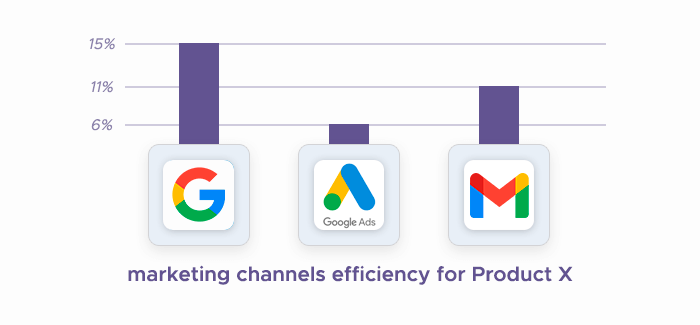

Search engine optimization. Google ranking can break or break the business, so placing high in search results can provide the boost your business needs. To do this, you need to perform SEO upgrades to rank well for the given keywords – and Google SERPs can help you.

Market trends analysis. Google’s data is a reflection of customers’ demand, providing enriched insight into different markets and the products and services dominating these markets. Use Google SERPs to analyze these trends – and even predict them.

Competitor monitoring. Google rankings also reflect the strong and weak sides of your competition: Scrape SERPs to learn more about their performance in various search terms. Other use cases of Google SERPs involve PPC ad verification, link building via URL lists, and more.

Which sections of a Google SERP are Scrapable

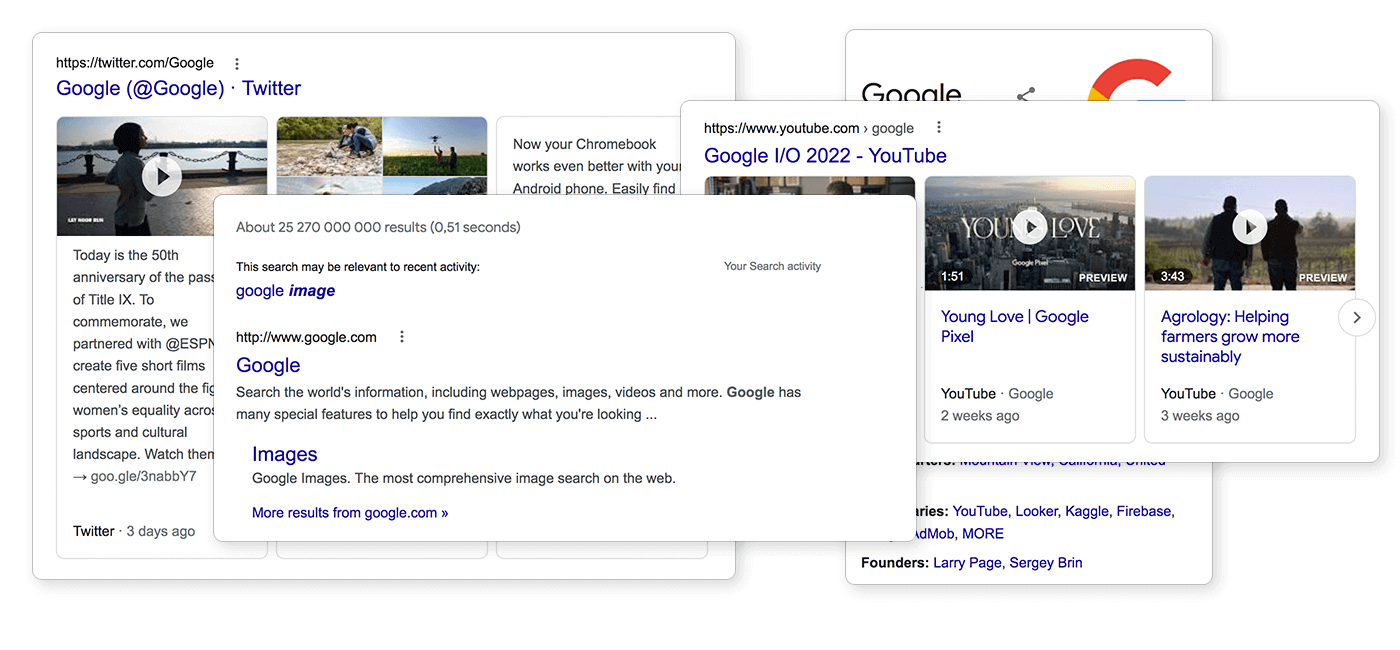

Let’s perform a sample google search to see different SERP sections in action. Searching for “Speed” will mostly give you organic search results – website URLs and short descriptions. You will also see the People also ask section that features popular questions and answers related to your search topic.

On the right, you’ll notice the Featured snippet section: In our case, it showcases the eponymous movie. Searching “France” will net us the Top stories and Related searches sections; and so on. Here’s the list of which SERP components can be scraped:

- Adwords

- Featured Snippet

- In-Depth Article

- Knowledge Card

- Knowledge Panel

- Local Pack

- News Box

- Related Questions

- People also ask

- Reviews

- Shopping Results

- Site Links

- Tweet,

and more.

Why not use Google’s official search API?

Some users prefer official APIs to their third-party counterparts: They’re supported by the platform owners themselves and entail much lower risk of facing account restrictions. Google does offer an official API – but it may be a contestable option:

Pricing. The free tier gets you 100 search queries per day, with paid plans using the pay-as-you-go model: Sending up to 10k requests per day (40 requests per hour) has a monthly fee of $1,500. Additional requests are limited: you cannot exceed 10,000 requests per day – going above this threshold costs even more.

Functionality. The upside of an official API is that it just works. Third-party APIs and scraping services constantly have to deal with changing structures of Google pages, CAPTCHAs, IP address bans, and whatnot; conversely, Google’s official search API may save you a lot of time if you don’t want to deal with the unavoidable technical difficulties of web scraping.

How to Scrape Google SERP with API

One way of collecting Google SERP data involves Infatica Scraper API – an easy-to-use yet powerful tool, which can be a great fit for both small- and large-scale projects. In this example, let’s see how to input various URLs to download their contents:

Step 1. Sign in to your Infatica account

Your Infatica account has different useful features (traffic dashboard, support area, how-to videos, etc.) It also has your unique user_key value which we’ll need to use the API – you can find it in your personal account’s billing area. The user_key value is a 20-symbol combination of numbers and lower- and uppercase letters, e.g. KPCLjaGFu3pax7PYwWd3.

Step 2. Send a JSON request

This request will contain all necessary data attributes. Here’s a sample request:

{

"user_key":"KPCLjaGFu3pax7PYwWd3",

"URLS":[

{

"URL":"https://www.google.com/search?q=download+youtube+videos&ie=utf-8&num=20&oe=utf-8&hl=us&gl=us",

"Headers":{

"Connection":"keep-alive",

"User-Agent":"Mozilla\/5.0 (Macintosh; Intel Mac OS X 10.15; rv:71.0) Gecko/20100101 Firefox/71.0",

"Upgrade-Insecure-Requests":"1"

},

"userId":"ID-0"

}

]

}Here are attributes you need to specify in your request:

- user_key: Hash key for API interactions; available in the personal account’s billing area.

- URLS: Array containing all planned downloads.

- URL: Download link.

- Headers: List of headers that are sent within the request; additional headers (e.g. cookie, accept, and more) are also accepted. Required headers are: Connection, User-Agent, Upgrade-Insecure-Requests.

- userId: Unique identifier within a single request; returning responses contain the userId attribute.

Here’s a sample request containing 4 URLs:

{

"user_key":"7OfLv3QcY5ccBMAG689l",

"URLS":[

{

"URL":"https://www.google.com/search?q=download+youtube+videos&ie=utf-8&num=20&oe=utf-8&hl=us&gl=us",

"Headers":{"Connection":"keep-alive","User-Agent":"Mozilla\/5.0 (Macintosh; Intel Mac OS X 10.15; rv:71.0) Gecko/20100101 Firefox/71.0","Upgrade-Insecure-Requests":"1"},

"userId":"ID-0"

},

{

"URL":"https://www.google.com/search?q=download+scratch&ie=utf-8&num=20&oe=utf-8&hl=us&gl=us",

"Headers":{"Connection":"keep-alive","User-Agent":"Mozilla\/5.0 (Macintosh; Intel Mac OS X 10.15; rv:71.0) Gecko/20100101 Firefox/71.0","Upgrade-Insecure-Requests":"1"},

"userId":"ID-1"

},

{

"URL":"https://www.google.com/search?q=how+to+scrape+google&ie=utf-8&num=20&oe=utf-8&hl=us&gl=us",

"Headers":{"Connection":"keep-alive","User-Agent":"Mozilla\/5.0 (Macintosh; Intel Mac OS X 10.15; rv:71.0) Gecko/20100101 Firefox/71.0","Upgrade-Insecure-Requests":"1"},

"userId":"ID-2"

},

{

"URL":"https://www.google.com/search?q=how+to+scrape+google+using+python&ie=utf-8&num=20&oe=utf-8&hl=us&gl=us",

"Headers":{"Connection":"keep-alive","User-Agent":"Mozilla\/5.0 (Macintosh; Intel Mac OS X 10.15; rv:71.0) Gecko/20100101 Firefox/71.0","Upgrade-Insecure-Requests":"1"},

"userId":"ID-3"

}

]

}Step 3. Get the response and download the files

When finished, the API will send a JSON response containing – in our case – four download URLs. Upon receiving the response, notice its attributes: Status (HTTP status) and Link (file download link.) Follow the links to download the corresponding contents.

{

"ID-0":{"status":996,"link":""},

"ID-1":{"status":200,"link":"https://www.domain.com/files/product2.txt"},

"ID-2":{"status":200,"link":"https://www.domain.com/files/product3.txt"},

"ID-3":{"status":null,"link":""}

}Please note that the server stores each file for 20 minutes. The optimal URL count is below 1,000 URLs per one request. Processing 1,000 URLs may take 1-5 minutes.

How do I scrape Google results in Python?

Python is the most popular programming language when it comes to web scraping. Even though its alternatives (e.g. JavaScript) are catching up and getting their own web scraping frameworks, Python is still invaluable thanks to a wide set of built-in libraries for various operations (files, networking, etc.) Let’s see how we can build a basic web scraper using Python.

🕸 Further reading: We have an up-to-date overview of Python web crawlers on our blog – or you can watch its video version on YouTube.

🍜 Further reading: Using Python's BeautifulSoup to scrape images

🤖 Further reading: Using Python's Puppeteer to automate data collection

Step 1. Add necessary packages

Python packages provide us easy access to specific operations and features, saving us a lot of time. Requests is a HTTP library for easier networking; urllib handles URLs; pandas is a tool for analyzing and manipulating data.

import requests

import urllib

import pandas as pd

from requests_html import HTML

from requests_html import HTMLSession

Step 2. Find the page source

To identify relevant data, we need the given URL’s page source, which shows the underlying page structure and how data is organized. Let’s create a function that does that:

def find_source(url):

"""Outputs the URL's source code.

Args:

url (string): URL of the page to scrape.

Returns:

response (object): HTTP response object from requests_html.

"""

try:

session = HTMLSession()

response = session.get(url)

return response

except requests.exceptions.RequestException as e:

print(e)Step 3. Scrape the search results

To process the search query correctly, we encode it via urllib.parse.quote_plus() – it replaces spaces with the + symbols (e.g. list+of+Airbus+models.) Then, find_source will get the page source. Notice the google_domians list: It contains a list of Google domains which we exclude from scraping. Remove it if you don’t want to miss Google’s own web pages.

def scrape(query):

query = urllib.parse.quote_plus(query)

response = find_source("https://www.google.com/search?q=" + query)

links = list(response.html.absolute_links)

google_domains = ('https://www.google.',

'https://google.',

'https://webcache.googleusercontent.',

'http://webcache.googleusercontent.',

'https://policies.google.',

'https://support.google.',

'https://maps.google.')

for url in links[:]:

if url.startswith(google_domains):

links.remove(url)

return linksTry giving the scrape() function a sample search query parameter and running it. If you input “python”, for instance, you’ll get a list of relevant search results:

scrape(“python”)

['https://www.python.org/',

'https://www.w3schools.com/python/',

'https://en.wikipedia.org/wiki/Python_(programming_language)',

'https://www.programiz.com/python-programming',

'https://www.codecademy.com/catalog/language/python',

'https://www.tutorialspoint.com/python/index.htm',

'https://realpython.com/',

'https://pypi.org/',

'https://pythontutor.com/visualize.html#mode=edit',

'https://github.com/python',]Troubleshooting

Google is particularly wary of allowing third-party access to its data – this is why the company constantly changes its HTML and CSS components to break existing scraping patterns. Here’s what the Search page’s CSS values looked like recently – nowadays, they may be different, thus breaking scrapers that were written back in that year:

css_identifier_result = ".tF2Cxc"

css_identifier_title = "h3"

css_identifier_link = ".yuRUbf a"

css_identifier_text = ".VwiC3b"If your code isn’t working, you can start with the page source to check the CSS identifiers above – css_identifier_result, css_identifier_title, css_identifier_link, and css_identifier_text.

Other Google Scraping Methods

Using an API might seem too complicated for some people. Thankfully, developers address this problem via no-code products with a more user-friendly interface. Some of these products are:

Browser Extensions

These can be a great introduction to scraping Google thanks to their simplicity: A browser extension doesn’t require extensive coding knowledge – or even a separate app to install. Despite their simplicity, browser extensions offer robust JavaScript rendering capabilities, allowing you to scrape dynamic content. To fetch data this way, use the extension’s point-and-click interface: Select the page element and the extension will download it.

Optimal for: no-code users with small-scale projects.

Visual Web Scrapers

These are similar to browser extensions – in both their upsides and downsides. Visual web scrapers are typically installed as standalone programs and offer an easy-to-use scraping workflow – but both visual scrapers and browser extensions may have a hard time processing pages with non-standard structure.

Optimal for: no-code users with small-scale projects.

Data Collection Services

This is arguably the most powerful alternative: You specify target websites and the data you need, deadline, and so on – in return, you get clean data that can be used as soon as possible. You outsource all technical and managerial problems to data collection service developers – and you have to keep in mind that the fees may be substantial.

Optimal for: medium- and large-scale projects with the budget for it.

Is it legal to scrape Google search results?

Please note that this section is not legal advice – it’s an overview of latest legal practice related to this topic.We encourage you to consult law professionals to view and review each web scraping project on a case-by-case basis.

We have a thorough analysis of web scraping legality that includes collecting data from Google, LinkedIn, Amazon, and similar services. In this section, we’ll provide its TL;DR version.

What regulations say

Although the web community is global – Google search results, for instance, come from all across the globe – governing web scraping is actually done by different state-, country-, and region-level regulations. They will define certain aspects of your data collection pipeline: For example, scraping personal data of European users is more limited due to GDPR.

- General Data Protection Regulation (GDPR) and

- Digital Single Market Directive (DSM) for Europe,

- California Consumer Privacy Act (CCPA) for California in particular,

- Computer Fraud and Abuse Act (CFAA) and

- The fair use doctrine for the US as a whole,

- And more.

Whether tech platforms in general or Google specifically, the intellectual property and privacy laws seem to confirm that web scraping is legal – but what you do with collected data is also important: Scraping Google search results to simply monetize them on a different website may be seen as blatant stealing.

Per the DSM directive and the fair use doctrine, what should be done instead is meaningful integration of this data into another service that generates value to its users. A typical example would be an SEO service that helps users improve their websites’ Google rankings.

What Google says

In addition to regulations above, each tech platform offers its own Terms of Use page. Although it doesn’t have the same legal power as, say, GDPR, it often does lay out the dos and don’ts of scraping the given website. Naturally, ToS is a much stricter regulation when it comes to data collection: As the data owner, Google forbids third parties automated access to their services. It also imposes limitations on its own API, only allowing a maximum of 10,000 requests per day.

From Google’s perspective, web scraping is a ToS violation and a bad move overall. Still, Google isn’t known to sue for scraping its content.

What is the best way for Google Scraping?

The web scraping community has produced data collection tools for projects of all scales and budgets: Tech-savvy users can try building a home-made scraping solution tailored to their needs; for more casual data extraction enthusiasts, there are easy-to-use visual interfaces in the web browser. The best option may be hard to define – but we believe that Infatica’s Scraper API has the most to offer:

Millions of proxies & IPs: Scraper utilizes a pool of 35+ million datacenter and residential IP addresses across dozens of global ISPs, supporting real devices, smart retries and IP rotation.

100+ global locations: Choose from 100+ global locations to send your web scraping API requests – or simply use random geo-targets from a set of major cities all across the globe.

Robust infrastructure: Scrape the web at scale at an unparalleled speed and enjoy advanced features like concurrent API requests, CAPTCHA solving, browser support and JavaScript rendering.

Flexible pricing: Infatica Scraper offers a wide set of flexible pricing plans for small-, medium-, and large-scale projects, starting at just $25 per month.

Frequently Asked Questions

Learn more about them in our detailed overview of headless browsers.