In today’s data-driven world, web scraping has become an essential tool for gathering valuable insights from the vast resources available online. In this comprehensive guide, we’ll walk you through the entire process, from initial planning and tool selection to developing, testing, and maintaining your scraper. You'll also explore key ethical and legal considerations to ensure your scraping activities are responsible and compliant. By the end of this article, you'll be equipped with the knowledge and strategies needed to execute a successful web scraping project.

Step 1: Define the Project Goals

Before diving into the technical aspects of web scraping, it’s essential to have a clear understanding of what you aim to achieve. Defining your project goals is the foundation upon which all subsequent steps will be built. This chapter will guide you through the process of setting clear, actionable objectives for your web scraping project.

Identify Your Objectives

What data do I need? Be specific about the type of data you want to collect. Is it product prices, user reviews, social media posts, or something else? Knowing exactly what you’re looking for will help you focus your efforts and choose the right tools and techniques.

Why do I need this data? Understanding the purpose of the data collection is crucial. Are you conducting market research, building a machine learning model, or monitoring competitors? The end goal will influence how you collect, process, and analyze the data.

How will I use this data? Consider how the data will be used once collected. Will it be fed into a database, visualized in dashboards, or used for predictive analytics? This will help determine the format and structure in which the data should be stored.

Scope and Limitations

There may be several scope considerations:

- Data sources: Identify the websites or online platforms from which you’ll be collecting data.

- Data volume: Estimate the amount of data you’ll be collecting.

- Frequency: Decide how often you need to scrape the data.

Additionally, keep these limitations in mind:

- Technical constraints, such as limited access to high-performance servers or bandwidth restrictions.

- Time and resources: Assess the time and resources available for the project.

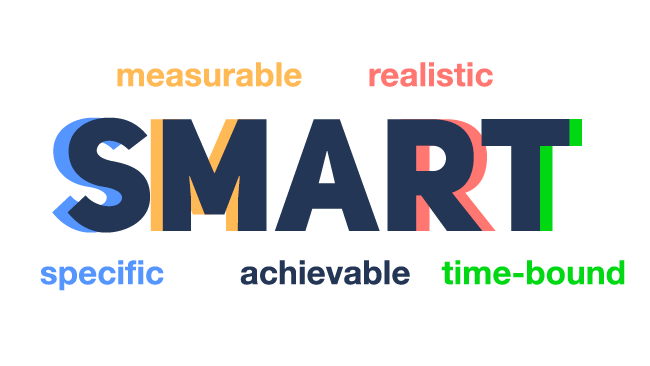

Setting Measurable Goals

With your objectives, scope, and limitations in mind, it’s time to set measurable goals for your project. Measurable goals help you track progress and determine when the project has achieved its objectives. Use the SMART criteria to set your goals:

- Specific: Be clear and specific about what you want to achieve.

- Measurable: Ensure that your goal can be quantified, so you can measure progress.

- Achievable: Make sure the goal is realistic given the resources and constraints.

- Relevant: Ensure the goal is aligned with your overall objectives.

- Time-bound: Set a deadline or timeframe for achieving the goal.

For example, a SMART goal for a web scraping project might be: "Collect and store daily pricing data from the top three competitors’ websites over the next three months, with a 95% success rate in data accuracy."

Step 2: Choose the Right Tools and Technologies

The next step in planning your web scraping project is to choose the right tools and technologies. The tools you select will largely depend on your project’s complexity, the type of data you’re scraping, and your technical expertise. This chapter will guide you through the key considerations when selecting programming languages, libraries, frameworks, and storage solutions.

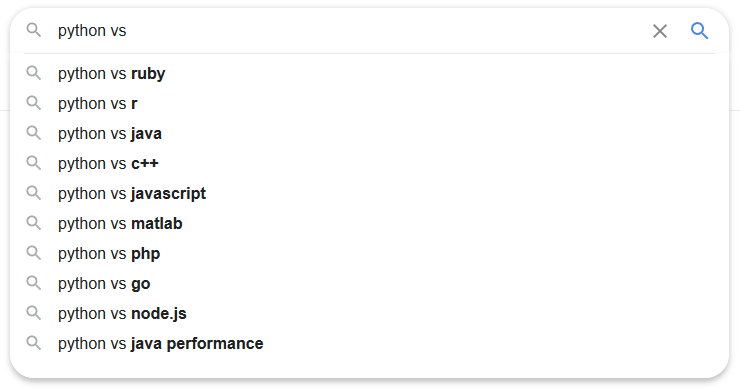

Selecting the Programming Language

The first decision you’ll need to make is which programming language to use for your web scraping project. While several languages can be used for web scraping, the following are among the most popular:

Python is the most widely used language for web scraping due to its simplicity, readability, and extensive ecosystem of libraries. It’s particularly suited for beginners and those who want to quickly prototype their scrapers. Python’s libraries, like BeautifulSoup and Scrapy, offer powerful tools for parsing HTML, handling requests, and managing complex scraping tasks.

JavaScript (Node.js), particularly when used with Node.js, is another popular choice, especially for scraping dynamic websites. With JavaScript, you can manipulate the DOM directly and handle asynchronous requests more naturally. Libraries like Puppeteer and Cheerio make it easier to work with JavaScript-based scraping.

Ruby, with the Nokogiri library, is another option for web scraping. It’s known for its elegant syntax and can be a good choice if you’re already familiar with Ruby or working within a Ruby on Rails environment.

Other languages: Depending on your needs, languages like Java, C, and PHP can also be used for web scraping. These are typically chosen for specific use cases, such as integrating scraping functionality into an existing application built with one of these languages.

Choosing the Right Libraries and Frameworks

Once you’ve chosen your programming language, the next step is to select the libraries and frameworks that will help you accomplish your scraping tasks. Below are some of the most commonly used tools:

BeautifulSoup: A Python library for parsing HTML and XML documents. It creates a parse tree that can be used to navigate the HTML structure and extract the data you need. It’s especially useful for smaller, simpler projects where you need to extract data from a single page or a few similar pages.

Scrapy is a powerful and flexible web scraping framework in Python that allows you to build large-scale, complex scrapers. It’s designed for speed and efficiency, and it includes features like handling requests, following links, managing cookies, and handling asynchronous scraping. Scrapy is ideal for projects that require scraping multiple pages, handling pagination, and storing data in a structured format.

Selenium is a tool for automating web browsers, often used for scraping websites that rely heavily on JavaScript. It allows you to interact with the website as if you were a user, including clicking buttons, filling out forms, and scrolling. Selenium is particularly useful for scraping dynamic content that only loads after user interaction.

Puppeteer is a Node.js library that provides a high-level API to control Chrome or Chromium over the DevTools Protocol. It’s particularly powerful for scraping JavaScript-heavy websites, as it allows you to generate screenshots, PDFs, and even automate user interactions. Puppeteer is often the go-to tool when working within a JavaScript/Node.js environment.

Cheerio is a fast, flexible, and lean implementation of jQuery designed specifically for server-side web scraping in Node.js. It allows you to load HTML, manipulate the DOM, and extract data using a familiar syntax. Cheerio is a good choice for projects where you want the simplicity of jQuery in a Node.js environment.

Playwright is a newer tool from Microsoft that, like Puppeteer, allows for browser automation. It supports multiple browsers and provides powerful features for handling complex scraping scenarios, including scraping dynamic content, handling iframes, and managing multiple browser contexts.

Step 3: Identify and Analyze the Target Website

Before you start writing code, it's crucial to thoroughly understand the website(s) from which you'll be scraping data. Identifying and analyzing the target website is a foundational step that will inform how you design your scraper, what tools you use, and how you handle the data you collect. This chapter will guide you through the process of analyzing a website's structure, dealing with dynamic content, and managing challenges like pagination and large data volumes.

Understanding the Website’s Structure

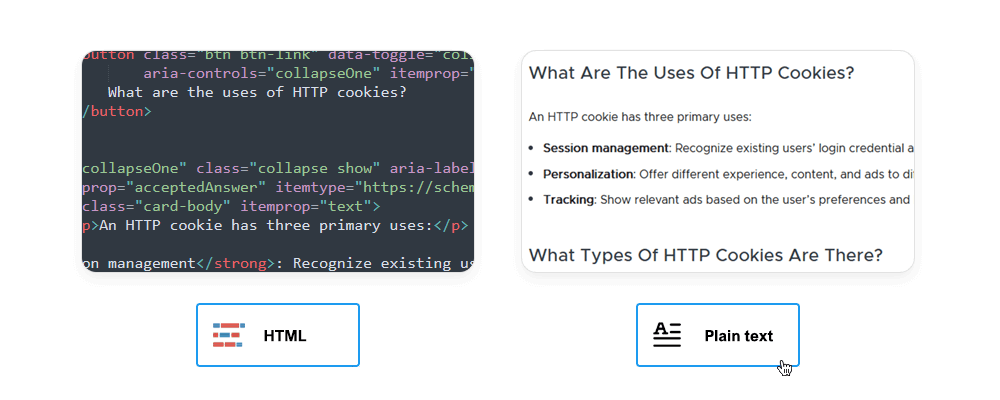

Websites are built using HTML, and understanding the structure of a website’s HTML is key to successfully extracting the data you need. Here’s how to approach this:

Use browser developer tools: The first step is to inspect the website’s HTML structure using your browser’s developer tools. In most browsers, you can do this by right-clicking on the page and selecting "Inspect" or "Inspect Element." This will open the developer console, where you can explore the HTML, CSS, and JavaScript that make up the page.

Identify the data elements: Look for the specific HTML elements (such as <div>, <span>, <table>, etc.) that contain the data you want to extract. Pay attention to the classes, IDs, and attributes associated with these elements, as these will be your anchors for scraping.

Understand the layout: Determine whether the data is organized in a table, list, or another structure. This will help you decide how to navigate and extract the data programmatically. For instance, data in a table may be easier to scrape in a structured format, while data embedded within nested <div> tags may require more complex parsing.

Static vs. dynamic URLs: Determine whether the website uses static URLs (where each page has a unique, unchanging URL) or dynamic URLs (where the URL changes based on user input or navigation). Understanding this will help in planning how to navigate between pages.

Query parameters: If the website uses query parameters (e.g., ?page=2), you can manipulate these parameters to access different parts of the website, such as pagination or search results.

Identifying Anti-Scraping Measures

Many websites deploy anti-scraping measures to protect their content. Recognizing these defenses early on can save you a lot of trouble down the road. Here are some common techniques:

Detection: If a website detects unusual activity, it may present a CAPTCHA to verify that you’re human. Scraping sites with CAPTCHAs can be challenging and may require CAPTCHA-solving services or manual intervention.

Avoidance: To avoid triggering CAPTCHAs, try to mimic human behavior in your scraper by introducing random delays between requests, varying your user-agent strings, and limiting the number of requests per session.

Rotating IP Addresses: Websites may block your IP if they detect excessive requests from a single source. Using a proxy service to rotate IP addresses can help bypass this restriction.

Avoiding Patterns: Ensure that your scraper doesn’t follow a predictable pattern. Randomizing the order of requests, varying the time intervals between them, and mixing in requests for other pages can help avoid detection.

Step 4: Develop and Test the Scraper

With a clear understanding of your project goals, the target website(s), and the tools you'll be using, it's time to start developing your web scraper. Building a scraper is where theory meets practice, and careful development and thorough testing are key to ensuring that your scraper performs reliably and efficiently. This chapter will guide you through the process of writing, testing, and optimizing your scraper, as well as considering alternative solutions like Infatica Scraper.

Writing the Scraper

The development of your scraper involves translating your analysis of the target website into code. This process can vary significantly depending on the complexity of the site and the data you need to extract. Here’s a step-by-step approach:

Install necessary libraries: Start by setting up your development environment. If you’re using Python, this might involve installing libraries like BeautifulSoup, Scrapy, Requests, or Selenium. For JavaScript, you might install Puppeteer or Cheerio.

Use selectors: Utilize CSS selectors, XPath, or other methods to locate the specific elements that contain the data you need. This might involve selecting elements by their tag names, classes, IDs, or attributes.

Extract data: Once you’ve identified the correct elements, write code to extract the text or attributes you’re interested in. For example, you might extract the price of a product, the title of an article, or the URL of an image.

Testing Your Scraper

Thorough testing is essential to ensure that your scraper works correctly and can handle real-world conditions. Here’s how to approach testing:

Monitor Performance: Assess how efficiently your scraper runs. Note the time it takes to complete a scrape and any potential bottlenecks, such as long delays caused by waiting for JavaScript to load.

Scale up gradually: Once you’re confident in your scraper’s performance on a small scale, gradually increase the scope of your testing. Move from scraping a single page to multiple pages, then to the entire dataset.

Test error handling: Introduce intentional errors, such as blocking access to a page or changing the HTML structure, to test how your scraper handles unexpected situations. Ensure that your error handling is robust and that your scraper can recover gracefully.

Monitor for IP blocking: As you scale up your testing, watch for signs that the target website is blocking your IP address. This could include receiving CAPTCHAs, slower response times, or outright denials of service. Implement strategies like rotating proxies or reducing request frequency to mitigate these issues.

Performance Optimization

Optimizing your scraper is key to ensuring it runs efficiently and avoids detection. Here are some strategies:

Mimic human behavior: Introduce random delays between requests to mimic human browsing behavior. This reduces the likelihood of triggering anti-scraping mechanisms on the target website.

Limit request frequency: If you’re scraping large amounts of data, limit the frequency of your requests to avoid overwhelming the server. This can also help prevent your IP from being blocked.

Rotate proxies: Use proxy services to rotate IP addresses, making it harder for the website to detect and block your scraper. This is especially important if you’re scraping from a single IP address.

Randomize user-agent strings: Websites often check the User-Agent string in your requests to identify the type of browser and device making the request. Randomizing this string can help disguise your scraper as a legitimate user.

Considering SaaS Solutions

Building and maintaining a scraper can be complex and time-consuming, especially for large or dynamic websites. Fortunately, there are SaaS (Software as a Service) solutions available that can simplify this process.

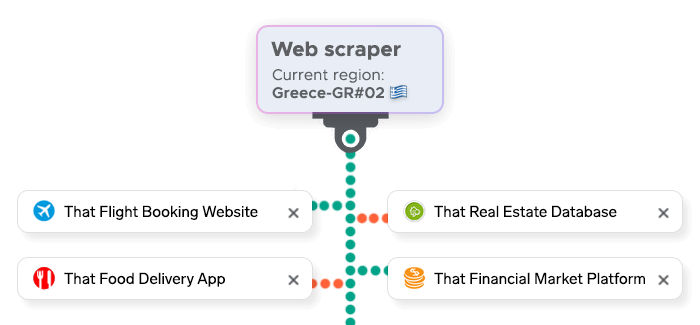

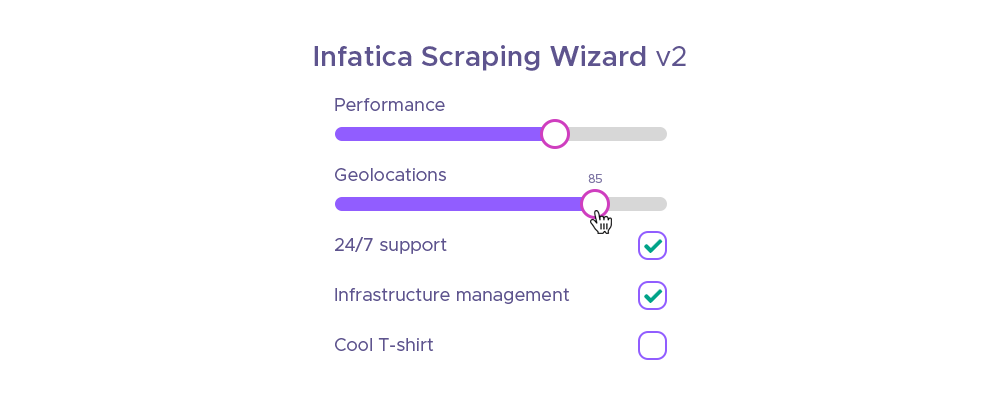

Ease of use: SaaS tools like Infatica Scraper offer user-friendly interfaces that allow you to set up scrapers with little to no coding experience. These tools often include visual workflow designers, making it easy to define what data to extract and how to navigate the website.

Built-in features: Many SaaS scrapers come with built-in features like IP rotation, CAPTCHA solving, and cloud-based data storage. This can save you time and reduce the need for custom development.

Scalability: SaaS solutions are typically designed to scale, making it easier to handle large-scale scraping projects. They often provide options for scheduling scrapes, managing large datasets, and integrating with other tools like databases or data visualization platforms.

When to use SaaS solutions?

- Limited resources: If you’re working on a project with limited resources or expertise, using a SaaS solution can be a cost-effective way to get up and running quickly.

- Complex websites: For websites with complex structures or heavy JavaScript usage, SaaS tools may offer more robust solutions out of the box, without the need for extensive custom coding.

- Ongoing maintenance: If your project requires ongoing scraping and monitoring, SaaS tools can simplify maintenance by handling updates to the website structure and managing backend infrastructure.

Step 5: Store and Manage the Data

After successfully scraping your data, the next critical step is storing and managing it effectively. Proper data management ensures that the information you’ve gathered is accessible, secure, and ready for analysis or further use.

Choosing a Storage Solution

The choice of storage depends on the volume of data, its structure, and how you plan to use it:

Flat Files: For smaller datasets or simple projects, storing data in flat files like CSV, JSON, or Excel can be sufficient. These formats are easy to handle and integrate with data analysis tools.

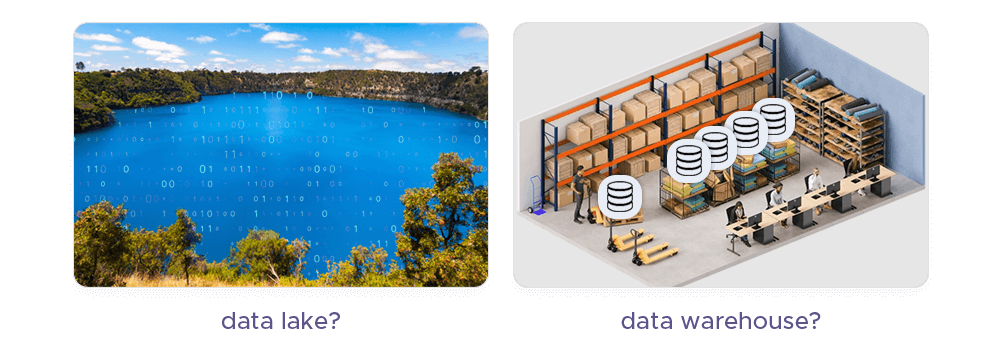

Databases: For larger or more complex datasets, consider using a database.

- SQL Databases: Tools like MySQL, PostgreSQL, or SQLite are ideal for structured data that requires complex queries.

- NoSQL Databases: For unstructured or semi-structured data, MongoDB or CouchDB offer more flexibility and scalability.

Cloud Storage: If you’re dealing with large-scale data or need remote access, cloud storage solutions like AWS S3, Google Cloud Storage, or Azure are excellent options. They offer scalability, security, and easy integration with other cloud-based services.

Organizing and Accessing Data

Data Cleaning: Ensure that the scraped data is cleaned and standardized. This might involve removing duplicates, handling missing values, and transforming the data into a consistent format.

Indexing and Querying: Set up indexing in your database to speed up queries. If you’re storing data in flat files, consider using tools like pandas (in Python) to filter and query the data efficiently.

Backup and Security: Regularly back up your data to prevent loss. Implement security measures, such as encryption, to protect sensitive information.

Step 6: Monitor and Maintain the Scraper

Once your scraper is up and running, it’s essential to monitor its performance and maintain it over time. Websites frequently change, which can break your scraper or lead to inaccurate data collection.

Monitoring the Scraper

Regular Audits: Schedule regular checks to ensure that your scraper is still functioning correctly. This includes verifying that the data being collected is accurate and complete.

Error Handling: Implement robust error handling in your scraper to manage unexpected issues, such as changes in the website’s structure or server downtime. Set up alerts to notify you if the scraper encounters repeated errors.

Performance Metrics: Monitor the performance of your scraper, including its speed, the number of requests made, and the rate of successful data extraction. Tools like logging libraries or custom dashboards can help track these metrics over time.

Maintaining the Scraper

Adapting to Changes: Websites often update their design or layout, which can disrupt your scraper. Be prepared to update your scraper’s code to adapt to these changes. This might involve modifying your HTML selectors, handling new dynamic elements, or adjusting to new pagination methods.

Scalability Adjustments: As your project grows, you may need to scale your scraping infrastructure. This could involve optimizing your code, upgrading your storage solutions, or distributing your scraping tasks across multiple servers or cloud instances.

Documentation: Maintain clear documentation for your scraper, including its functionality, dependencies, and any custom configurations. This is especially important if the scraper will be maintained or updated by others in the future.