- What Is Data Validation?

- Common Types of Data Validation

- Why Data Validation Is Critical in Web Scraping

- Automating Data Validation with APIs and Pipelines

- A Smarter Approach with Infatica Web Scraper API

- Best Practices for Reliable Data Validation

- Common Pitfalls and How to Avoid Them

- Frequently Asked Questions

In today’s data-driven world, businesses rely on accurate and consistent information to make informed decisions. Data validation is the process that ensures the data you collect – whether from internal systems or web sources – is correct, complete, and ready for analysis. Let's explore what data validation is, why it matters, and how structured tools like Infatica’s Web Scraper API can help streamline the process.

What Is Data Validation?

Data validation is the process of ensuring that information is accurate, consistent, and usable before it enters your systems or workflows. In other words, it’s about verifying that your data truly reflects what it’s supposed to represent.

Validation usually happens during or immediately after data collection – checking each data point against predefined rules or logical conditions. For example, a phone number field should contain only digits, a date should match the expected format, and a product price should fall within a realistic range.

It’s important to distinguish between data validation and data verification. While verification confirms that data comes from a trusted source, validation confirms that the data itself makes sense and adheres to expected standards. Both are essential for maintaining high-quality datasets, especially when working with data gathered from diverse or unstructured sources, such as websites and online platforms.

Common Types of Data Validation

| Validation Type | Description | Example |

|---|---|---|

| Format validation | Checks whether data follows a specific format or pattern. | An email address must include “@” and a valid domain (e.g., user@example.com). |

| Range validation | Ensures numeric or date values fall within logical limits. | A product price should be greater than 0; a date should not be in the future. |

| Consistency validation | Compares data across fields or datasets to maintain logical coherence. | A shipping date cannot precede the order date. |

| Uniqueness validation | Prevents duplication of records or identifiers. | Each user ID or transaction ID should appear only once in the database. |

| Presence validation | Confirms that all required fields are filled in and not left blank. | Customer name, email, and payment method fields must contain valid data. |

| Cross-field validation | Ensures that related fields align logically. | If “Country” = USA, then “ZIP code” must follow the U.S. format. |

Why Data Validation Is Critical in Web Scraping

When collecting data from the web, maintaining accuracy and consistency becomes a major challenge. Unlike structured databases, websites vary widely in layout, formatting, and data presentation – and those layouts can change without warning. As a result, scraped data can easily become incomplete, duplicated, or inconsistent.

Data validation ensures that the information extracted from online sources is both usable and trustworthy. Without it, even small errors can cascade into misleading analytics or poor business decisions. Common validation challenges in web scraping include:

- Inconsistent formats: Prices, dates, and measurements often differ between websites or regions.

- Missing fields: Dynamic pages or JavaScript-rendered content may omit certain data elements.

- Duplicate entries: The same product, listing, or user profile may appear multiple times.

- Localization differences: Data like currency, time zones, or decimal separators can vary by region.

- Outdated information: Cached or stale pages can return obsolete results.

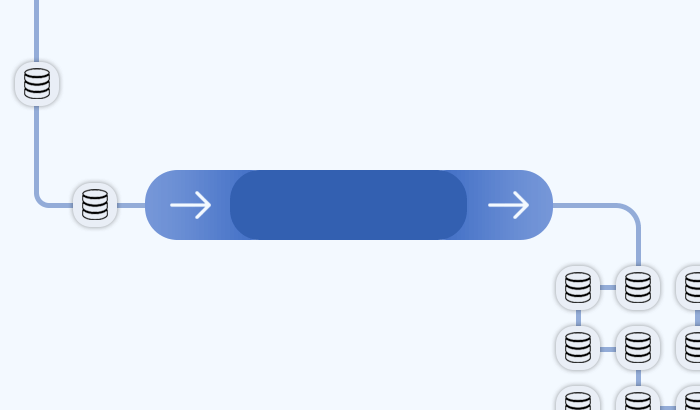

Automating Data Validation with APIs and Pipelines

As data volumes grow, manual validation quickly becomes impractical. Modern organizations rely on automated validation workflows that operate within their data pipelines – continuously checking, cleaning, and enriching data as it moves from source to storage.

Automation ensures that errors are detected early, before they affect analytics or business decisions. It also allows teams to scale data operations without scaling the risk of inconsistencies. A typical automated validation workflow includes:

- Data collection: Raw data is gathered from various sources, such as websites, APIs, or databases.

- Schema enforcement: Each dataset is checked against predefined field types and formats.

- Deduplication: Duplicate entries are detected and removed automatically.

- Normalization: Data is standardized – for example, date formats, currencies, or measurement units are unified.

- Integrity checks: Cross-field and range validations ensure logical consistency.

- Storage and monitoring: Clean, validated data is stored in a database or data warehouse, with ongoing quality monitoring.

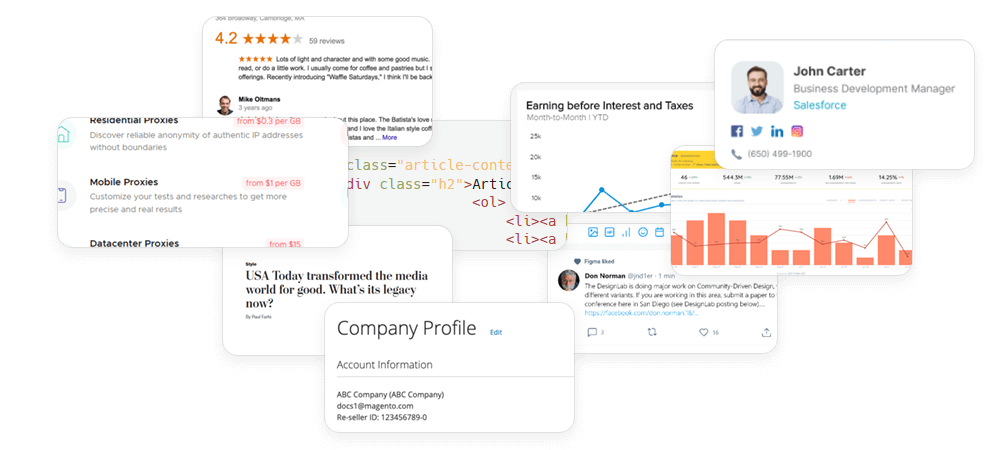

A Smarter Approach with Infatica Web Scraper API

To make automation efficient, the data collection step itself should already produce well-structured and predictable output. This is where tools like Infatica’s Web Scraper API play a crucial role. Instead of dealing with raw HTML and inconsistent page layouts, users receive preformatted JSON or CSV data – minimizing the need for extensive post-scraping cleanup. By integrating Infatica’s Web Scraper API into your pipeline, you can:

- Collect structured data directly from web pages in real time.

- Reduce validation complexity by ensuring consistent data formats.

- Automate large-scale data extraction with minimal human intervention.

Best Practices for Reliable Data Validation

Whether you’re validating scraped data, internal metrics, or third-party datasets, follow these best practices to ensure long-term reliability and accuracy.

Define validation rules early

Establish clear validation criteria before data collection begins. Specify acceptable formats, ranges, and required fields, and document these standards so every system or team involved uses the same reference.

Combine client-side and server-side validation

Use layered validation: quick checks at the collection point (client-side) and more comprehensive, rule-based checks on the backend (server-side). This minimizes processing overhead while maintaining data integrity.

Standardize data formats

Adopt a consistent schema across all sources – including field names, data types, and units of measurement. Standardization reduces validation complexity and makes merging datasets more efficient.

Test and sample regularly

Perform small-scale validation tests before scaling to full data collection. Sampling new or updated datasets helps you detect format changes, broken fields, or unexpected anomalies early.

Monitor continuously

Data validation isn’t a one-time process. Continuous monitoring – through dashboards, anomaly detection, or automated alerts – ensures data quality remains high as websites, sources, and APIs evolve.

Use trusted data sources

Validation is easier when the data itself is consistent and reliable. Collecting information through stable, well-structured pipelines – such as Infatica’s Web Scraper API – helps minimize inconsistencies and errors at the source, reducing the need for extensive revalidation.

Common Pitfalls and How to Avoid Them

Even with well-defined validation rules, data quality can still suffer due to overlooked details or process gaps. Recognizing common pitfalls early helps prevent errors that can compromise entire datasets:

Ignoring inconsistent formats

Problem: Data collected from multiple sources may use different formats for dates, currencies, or numeric separators.

Solution: Apply standardized schemas and normalization rules to all datasets. When collecting data from the web, choose tools that output structured, uniform formats to reduce conversion effort.

Overlooking missing or null values

Problem: Blank fields or incomplete records can distort analytics and lead to false conclusions.

Solution: Identify required fields and set automated alerts for missing data. Apply fallback logic or rescraping procedures for critical fields.

Failing to revalidate after changes

Problem: Websites, APIs, and data models evolve – making old validation rules obsolete.

Solution: Schedule periodic schema reviews and refresh validation scripts regularly to match new data structures.

Duplicating data unintentionally

Problem: The same entries appear multiple times due to aggregation or parallel scraping.

Solution: Use unique identifiers and automated deduplication routines to maintain dataset integrity.

Assuming scraped data is “clean by default”

Problem: Even reliable scraping tools can return unexpected variations caused by layout changes, redirects, or dynamic content.

Solution: Implement post-scraping validation layers that check completeness, accuracy, and logical consistency.