Over the last years, we’ve been exploring the ins and outs of data collection: How to better understand the difference between web scraping and web crawling, how to collect data responsibly, how to use residential proxies, and so on. Although web scraping often looks like magic (we input a command and get structured data from any place on the web in return!), it’s really just a a number of building blocks — internet protocols, web crawlers, utilities, and more — that work together to make data collection possible.

One of these building blocks is cURL, a command line tool for transferring data. In this article, we’re taking a closer look at web scraping with cURL and exploring how it works, its pros and cons, and how to use it to gather data effectively.

What is cURL?

Here’s a quick definition: cURL is an open-source command-line utility for transferring data via the URL syntax. In return, this definition holds a few key terms that can help us understand cURL even better — let’s explore them in greater detail:

Open-source: cURL isn’t a proprietary program that you have to pay for — instead, it's a free project maintained by the programming community. As Everything curl, the most extensive cURL guide, describes it:

A funny detail about Open Source projects is that they are called "projects", as if they were somehow limited in time or ever can get done. The cURL "project" is a number of loosely coupled individual volunteers working on writing software together with a common mission: to do reliable data transfers with Internet protocols, and give the code to anyone to use for free.

This means that, upon reading the article, you can visit cURL’s page on GitHub and contribute to the project, adding new features or fixing some pesky bugs.

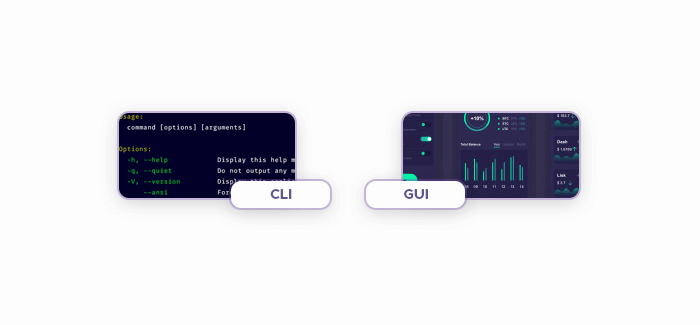

Command-line: cURL doesn’t have a GUI (graphical user interface) — it only has CLI (command-line interface.) This means that you can’t use this software via interacting with its graphical elements like buttons or drop-down menus. Instead, you have to run cURL inside your terminal — special software for command-line applications.

Making the transition from GUIs to CLIs can be disorienting, but it can also increase your productivity: CLIs allow you to execute multiple commands at the same time.

Utility: cURL isn’t a full-blown desktop application — it’s a lightweight utility. Thanks to its small size, different OS manufacturers include cURL in their products like Windows (version 1803 or later), macOS, and Linux distros.

Transferring data: cURL is only designed for one thing — transferring data — but it does this one thing exceptionally well.

URL: cURL uses URLs to navigate the web.

cURL’s full name — client URL — gives us a hint of how it works: It has something to do with URLs. Let's take a closer look at these components.

cURL usage crash course

Since cURL is a command-line utility, you’ll need a terminal application to run it: Some good options are PowerShell in Windows and Terminal in macOS. Open your terminal app and type `curl`: If the utility is properly installed, you’ll get the default welcoming message:

curl: try 'curl --help' or 'curl --manual' for more information.Sending requests

At its most basic level, cURL only needs a URL to access its data. Here’s an example command that makes cURL crawl a website:

curl www.website.com

Running this command will provide you with website.com’s files.

In the previous section we mentioned that command-line utilities allow you to chain multiple commands and run them simultaneously — this is why a typical cURL prompt looks like this:

curl [options] [URL]

Here’s a real-world example:

curl --ftp-ssl ftp://website.com/cat.txt

In the command above, we’re specifying a protocol (--ftp-ssl) to get a file titled cat.txt.

cURL supports a myriad of data transfer protocols — DICT, FILE, FTP, FTPS, GOPHER, HTTP, HTTPS, IMAP, IMAPS, LDAP, LDAPS, MQTT, POP3, POP3S, RTMP, RTMPS, RTSP, SCP, SFTP, SMB, SMBS, SMTP, SMTPS, TELNET, and TFTP, — so sending a request is pretty easy. To change the protocol from the default HTTP, specify it when running a command:

curl ftp://website.com

Since data transfer mostly involves sending and receiving packets, we’ll need to append the corresponding flags to the commands we run. To send a packet, we need to use the POST method, which is marked by the -d (data) attribute. Here’s how to enter user James using the password 12345:

curl -d "user=James&pass=12345&id=test_id&ding=submit" http://www.website.com/getthis/post.cgi

Using cURL with proxies for better data gathering

Although you won’t have any problems with small-scale web scraping projects, increasing the amount of requests may trigger anti-bot systems that some websites employ to protect themselves. This may result in IP bans, cutting off access to the given website completely. This is where proxies come to the rescue.

Proxy servers are server software that acts as the middleman between the user and the website. This offers a number of advantages: Better privacy and anonymity, among other things — and it also makes cURL web scraping easier because it masks you (or your web scraping bots) and helps to avoid IP bans.

The curl man command provides a helpful guide of cURL’s commands and their usage details. Here’s what it has to say about using proxies:

-x, --proxy <[protocol://][user:password@]proxyhost[:port]>

Use the specified HTTP proxy. If the port number is not specified, it is assumed at port 1080.Thankfully, we can easily use cURL together with proxies: We only need to add a flag and its attributes — they will define the proxy settings — while the rest of the command stays the same:

curl --proxy proxy:port -U “username:password” https://website.com

In addition, you can set specific user agents when performing requests with cURL and proxies. As explained in another article in our blog, changing user agents can be beneficial because it makes the requests seem more natural (i.e. as if a real user is sending them.)

curl --proxy-header "User-Agent: Mozilla/5.0" -x proxy https://example.com/

Frequently Asked Questions

There's a few different ways to scrape data with cURL. One way is to use the -i flag to output the headers as well as the body of the response. This is helpful if you're looking for specific information in the headers, such as a Tracking ID or cookie.

Another way to scrape data with cURL is by using the -o flag followed by a filename. This will save the output of your request into the specified file. This is helpful if you're looking to save a lot of data or if you want to view the data offline.

You can also use cURL in conjunction with other programs, such as grep, sed, and awk, to further manipulate and extract the data that you're looking for.

urllib2 module, which comes pre-installed in most versions of Python.