- Strengths of Python for Web Scraping

- Python's Weaknesses and Limitations

- Python's Ideal Use Cases

- Strengths of JavaScript for Web Scraping

- JavaScript's Weaknesses and Limitations

- JavaScript's Ideal Use Cases

- Side-by-Side Comparison

- How Proxy Choice Impacts Scraper Performance

- Choosing the Right Stack: Key Factors

- Frequently Asked Questions

Choosing the right language for your web scraping project can make all the difference between a smooth, scalable data pipeline and one constantly struggling with errors and blocks. Among the most popular options, Python and JavaScript stand out for their versatility and ecosystem strength – but each brings unique advantages and challenges. Let's compare the two languages across key aspects like performance, scalability, and proxy integration!

Python for Web Scraping: Pros, Cons, and Use Cases

Python remains the go-to choice for most web scraping projects – and for good reason. Its simplicity, vast library ecosystem, and compatibility with a wide range of data processing tools make it ideal for both beginners and large-scale data operations.

Strengths of Python for Web Scraping

Rich library ecosystem: Python’s scraping ecosystem is unmatched. Frameworks like BeautifulSoup, Scrapy, and Requests simplify everything from parsing HTML to managing concurrent requests, while headless browser tools like Playwright and Selenium enable dynamic rendering for JavaScript-heavy websites. This makes Python especially powerful for automating repetitive scraping tasks at scale.

Easy to learn and integrate: Python’s syntax is beginner-friendly, letting teams build scrapers faster and maintain them with minimal complexity. It integrates seamlessly with data analysis and visualization tools such as pandas, NumPy, and Matplotlib, allowing you to transform and analyze collected data within the same environment.

Excellent for automation and scalability: Because of its mature frameworks and scheduling capabilities, Python excels at orchestrating large scraping operations – ideal for businesses that monitor prices, products, or content updates across thousands of pages daily.

Strong proxy compatibility: Python scraping libraries make it easy to integrate residential, datacenter, or mobile proxies, which are crucial for maintaining high success rates. Through simple configuration, developers can rotate IPs, manage sessions, and bypass rate limits – key requirements for reliable scraping at scale.

Python's Weaknesses and Limitations

Despite its versatility, Python isn’t without trade-offs.

Performance overhead: Python is generally slower than compiled languages and can struggle with CPU-bound tasks or when rendering dynamic content without a browser automation layer.

Handling modern web apps: Sites built on heavy JavaScript frameworks (like React or Angular) often require integration with headless browsers, increasing setup complexity and resource usage.

However, these challenges can be mitigated with efficient coding practices, asynchronous requests, and robust proxy infrastructure that ensures uninterrupted access to target sites.

Python's Ideal Use Cases

Python’s strengths make it the preferred language for:

- Market and price monitoring – Continuously tracking product or service prices across multiple platforms.

- Lead generation and data aggregation – Collecting and organizing business data for analytics or outreach.

- Research and academic projects – Gathering structured data from open sources for analysis.

- SEO and content monitoring – Analyzing search rankings, metadata, and competitor updates.

When paired with Infatica’s residential or datacenter proxies, Python scrapers gain enhanced stability and scalability, enabling accurate, large-scale data collection without interruption or bans.

JavaScript for Web Scraping: Pros, Cons, and Use Cases

While Python has long dominated the web scraping landscape, JavaScript has become increasingly popular – especially as modern websites rely more heavily on dynamic, client-side rendering. With tools like Puppeteer and Playwright, JavaScript allows developers to scrape data directly from within a browser environment, making it exceptionally effective for complex, interactive pages.

Strengths of JavaScript for Web Scraping

Native handling of dynamic content: Because JavaScript powers most modern web applications, using it for scraping provides a natural advantage. Frameworks like Puppeteer, Playwright, and Cheerio make it simple to render pages, execute scripts, and extract data exactly as users see it – essential for scraping dynamic or single-page applications (SPAs).

Asynchronous execution for faster scraping: JavaScript’s asynchronous architecture enables efficient handling of multiple requests at once. When combined with Node.js, it can scrape numerous pages concurrently, reducing latency and increasing overall throughput – a major benefit for large-scale scraping operations.

Full browser control: Headless browsers allow developers to mimic real-user behavior – scrolling, clicking, logging in, or navigating through multiple pages. This makes JavaScript particularly effective for websites protected by complex front-end scripts or interaction-based authentication systems.

Proxy integration for authenticity and reliability: JavaScript-based scrapers often require a realistic browsing footprint to avoid detection. Integrating residential, static ISP, or mobile proxies allows requests to appear as genuine user traffic. Stable proxy connections reduce CAPTCHAs and access errors – and services like Infatica’s global proxy network offer the speed and reliability needed to maintain continuous browser sessions across regions.

JavaScript's Weaknesses and Limitations

Despite its power, JavaScript scraping comes with challenges:

Higher resource consumption: Running browser-based scrapers is heavier on CPU and memory than Python’s lightweight HTTP-based tools.

Steeper learning curve for scaling: While Node.js offers performance benefits, setting up distributed, fault-tolerant scraping clusters requires more technical expertise.

Maintenance complexity: Frequent front-end updates can break scraping logic, especially on websites that heavily rely on dynamic selectors or obfuscated code.

JavaScript's Ideal Use Cases

JavaScript excels in scenarios where interaction, rendering, or dynamic loading are critical:

- Scraping single-page applications (SPAs) that rely on real-time data.

- Monitoring social media or e-commerce platforms that use infinite scroll or AJAX updates.

- Capturing user-generated content that only appears after certain browser events.

- Testing and automation – using headless browsers for quality assurance and UX validation.

When combined with Infatica’s mobile or static ISP proxies, JavaScript scrapers can maintain long, stable browser sessions that closely replicate real-user conditions – ideal for projects where reliability and authenticity are key.

Side-by-Side Comparison

| Feature | Python | JavaScript |

|---|---|---|

| Ease of Use | Simple syntax and easy learning curve; ideal for beginners. | Moderate difficulty; requires familiarity with asynchronous code. |

| Speed and Performance | Slower in execution but highly efficient for structured data pipelines. | Faster for real-time, asynchronous scraping tasks. |

| Handling Dynamic Content | Requires browser automation (e.g., Playwright, Selenium). | Natively handles dynamic, JS-rendered pages. |

| Library Ecosystem | Mature ecosystem: BeautifulSoup, Scrapy, Requests, pandas. | Strong browser-based tools: Puppeteer, Playwright, Cheerio. |

| Scalability | Excellent with frameworks like Scrapy and multiprocessing tools. | Scalable with Node.js clustering but more complex to manage. |

| Integration with Data Tools | Native integration with analytics and ML tools. | Less suited for post-processing, more for data collection. |

| Proxy Integration | Seamless configuration for rotating residential or datacenter proxies. | Works best with stable residential, ISP, or mobile proxies for persistent sessions. |

| Best For | Large-scale, data-heavy scraping and analytics pipelines. | Browser-level scraping, interactive content, and modern web apps. |

How Proxy Choice Impacts Scraper Performance

Regardless of which language you use – Python or JavaScript – the effectiveness of your web scraping setup ultimately depends on one key factor: proxy quality. Even the most efficient scraper will fail if requests are repeatedly blocked, throttled, or misrouted. That’s why selecting the right proxy infrastructure is essential for maintaining performance, consistency, and scalability.

Overcoming Common Web Scraping Challenges

Modern websites are designed to detect and block automated traffic. These defenses include:

- Rate limiting – restricting the number of requests per IP or session.

- Geo-blocking – limiting content based on user location.

- CAPTCHAs and IP bans – triggered by repetitive or suspicious behavior.

Proxies act as intermediaries between your scraper and the target site, masking your IP and distributing requests across multiple nodes to prevent detection. Without a proxy network, even small-scale scraping projects risk bans and inconsistent data collection.

Matching Proxy Type to Your Scraping Stack

| Language / Tool | Recommended Proxy Type | Why It Works |

|---|---|---|

| Python (Scrapy, Requests) | Datacenter proxies / Residential proxies | High throughput and efficient IP rotation for large-scale scraping. |

| Python (Playwright, Selenium) | Residential proxies / Static ISP proxies | Handles dynamic pages with stable, real-device IPs for persistent sessions. |

| JavaScript (Puppeteer, Playwright) | Static ISP proxies / Mobile proxies | Maintains persistent sessions and authentic browser fingerprints for realistic browsing. |

| JavaScript (Cheerio) | Datacenter proxies | Fast, lightweight scraping for simpler HTML pages where speed and cost-efficiency matter. |

Choosing the Right Stack: Key Factors

The most effective scraping setups balance efficiency, scalability, and reliability, while minimizing detection risks and maintenance overhead.

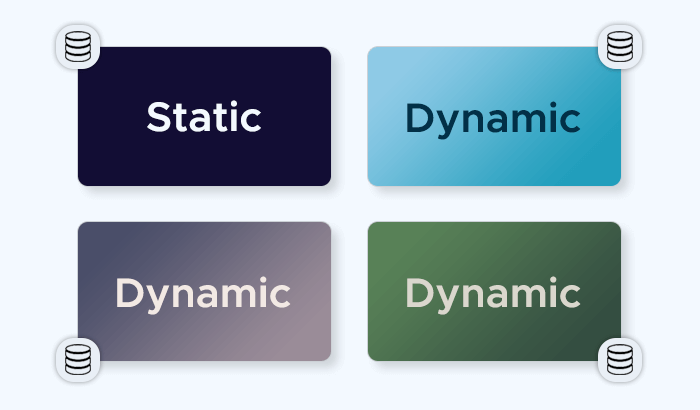

Type of Target Website

The nature of the website you’re scraping plays a major role in determining the right stack:

- Static or lightly dynamic sites: Python excels with lightweight libraries like Scrapy or Requests, which efficiently handle HTML parsing and large data volumes.

- Dynamic or JavaScript-heavy sites: JavaScript (with Puppeteer or Playwright) performs best, as it naturally handles rendering and complex front-end logic.

When websites use advanced anti-bot scripts, pairing your language of choice with residential or ISP proxies helps simulate genuine user behavior and prevent detection.

Data Volume and Frequency

- High-frequency scraping – such as monitoring prices or availability across thousands of pages – benefits from Python’s strong concurrency support and data pipeline integration.

- Low- to medium-frequency scraping that requires user interaction or dynamic rendering is often simpler in JavaScript using headless browsers.

Performance, Scalability, and Resource Constraints

If speed and low resource consumption are top priorities, Python’s asynchronous frameworks (like asyncio or aiohttp) offer efficient request management. If your scraper must maintain stateful browser sessions, JavaScript’s event-driven model is more suitable, especially when paired with stable proxy connections to maintain session continuity.

For enterprise-scale setups, integrating proxy rotation, load balancing, and request throttling ensures stability – areas where Infatica’s network-level tools can greatly simplify implementation.

Anti-Bot Measures and Access Challenges

Even the best codebase fails if target sites aggressively block requests. Robust proxy rotation combined with user-agent diversification and cookie management can prevent detection.

- Python libraries like Scrapy offer built-in middleware for rotating proxies and headers.

- JavaScript scrapers can use proxy APIs for on-demand IP switching during browser sessions.

Infatica’s global proxy pool provides multiple IP types and regions, making it easier to adapt to changing target-site defenses.

Reliable Scraping Starts with Reliable Infrastructure

Infatica’s global proxy network – spanning residential, datacenter, ISP, and mobile proxies – empowers developers to scrape at scale, access localized content, and maintain uninterrupted data flows.

Build your scraping stack on a reliable foundation – explore Infatica’s proxy solutions today.