When working with data on the web, developers often face a common challenge: information is available in HTML format, but applications, APIs, or data pipelines require it in a structured format like JSON. HTML is designed for displaying content in browsers, while JSON is a lightweight format optimized for data storage, transfer, and processing. This article will guide you through the different approaches to converting HTML into JSON, provide step-by-step code examples in Python and JavaScript, and highlight best practices to ensure clean and usable outputs.

What Are HTML and JSON? (Quick Refresher)

Before diving into conversion methods, it’s important to understand the two formats we’re working with:

HTML (HyperText Markup Language)

The standard language used to structure and display content on the web: It organizes information with tags like <div>, <p>, <table>, and attributes that give context to the data. While ideal for human viewing in browsers, HTML isn’t inherently structured for data processing.

JSON (JavaScript Object Notation)

A lightweight, text-based format for representing structured data, which stores data in key–value pairs, arrays, and objects, making it easy to parse and manipulate. It’s universally used in APIs, databases, and web applications because it’s both human-readable and machine-friendly.

Why Convert HTML to JSON?

- Data usability: JSON can be consumed by scripts, databases, and APIs with minimal overhead.

- Consistency: Unlike HTML, JSON enforces a structured format that makes automation easier.

- Flexibility: JSON works seamlessly across programming languages and platforms, making it ideal for integrating extracted web data into applications.

Approaches to Converting HTML to JSON

There’s no single “correct” way to transform HTML into JSON. The best approach depends on the complexity of the data, your programming language of choice, and the scale of the project. Below are the most common methods:

Manual Parsing

How it works: Using string operations or regular expressions to extract text between HTML tags.

When to use: Only for very simple and predictable structures.

Limitations: HTML is hierarchical and often messy; regex-only parsing is error-prone and difficult to maintain.

Using a Parser Library

How it works: Dedicated libraries convert raw HTML into a structured document tree (DOM). You can then navigate elements and extract the data you need. Examples: BeautifulSoup, lxml (Python), Cheerio, JSDOM (JavaScript/Node.js).

Advantages: More reliable than manual parsing, easier to handle nested structures, widely supported.

Limitations: Requires some coding knowledge, and performance may vary with very large datasets.

Dedicated HTML-to-JSON Converters

How it works: Specialized tools or libraries take an entire HTML document and automatically transform it into JSON.

Advantages: Fast, convenient, minimal setup.

Limitations: Less control over the output, results may need additional cleaning, and online tools are impractical for large-scale or automated tasks.

Headless Browsers and Scraping Frameworks

How it works: For pages that generate content dynamically with JavaScript, tools like Puppeteer (Node.js) or Playwright can render the page, capture the final HTML, and allow structured extraction before converting to JSON.

Advantages: Handles complex websites that don’t provide static HTML.

Limitations: Heavier setup, slower performance, higher resource consumption.

Real-World Examples

To make the process more concrete, let’s walk through how to extract data from HTML and convert it into JSON. We’ll use two popular programming languages: Python and JavaScript (Node.js).

Python Example with BeautifulSoup

Python is widely used for web scraping and data processing. Here’s how you can parse HTML and output JSON:

from bs4 import BeautifulSoup

import json

# Sample HTML

html = """

<html>

<body>

<ul id="products">

<li data-id="101">Laptop</li>

<li data-id="102">Smartphone</li>

<li data-id="103">Tablet</li>

</ul>

</body>

</html>

"""

# Parse the HTML

soup = BeautifulSoup(html, "html.parser")

# Extract product list

products = []

for li in soup.select("#products li"):

products.append({

"id": li["data-id"],

"name": li.get_text()

})

# Convert to JSON

json_output = json.dumps(products, indent=2)

print(json_output)

Output:

[

{ "id": "101", "name": "Laptop" },

{ "id": "102", "name": "Smartphone" },

{ "id": "103", "name": "Tablet" }

]

This demonstrates how BeautifulSoup helps navigate HTML structures and extract meaningful data.

JavaScript Example with Cheerio

For Node.js developers, Cheerio offers jQuery-like syntax for parsing HTML:

const cheerio = require("cheerio");

// Sample HTML

const html = `

<html>

<body>

<ul id="products">

<li data-id="101">Laptop</li>

<li data-id="102">Smartphone</li>

<li data-id="103">Tablet</li>

</ul>

</body>

</html>

`;

// Load HTML into Cheerio

const $ = cheerio.load(html);

// Extract product list

const products = [];

$("#products li").each((_, el) => {

products.push({

id: $(el).attr("data-id"),

name: $(el).text()

});

});

// Convert to JSON

console.log(JSON.stringify(products, null, 2));

Output:

[

{ "id": "101", "name": "Laptop" },

{ "id": "102", "name": "Smartphone" },

{ "id": "103", "name": "Tablet" }

]

Key Points

- Both Python and JavaScript use parser libraries to transform HTML into navigable structures.

- Extracted elements are mapped into objects or arrays, which can then be serialized into JSON.

- The same logic applies to more complex data (tables, nested divs, or attributes).

Handling Complex Cases

In real-world scenarios, converting HTML into JSON isn’t always as straightforward as parsing a simple list. Websites often contain nested structures, inconsistent markup, or dynamically generated content. Here are some common challenges and strategies to address them:

Nested HTML Structures

Problem: Data is embedded in multiple layers of tags, such as tables within tables or deeply nested <div> elements.

Solution: Use recursive parsing or targeted selectors. For example, in BeautifulSoup or Cheerio, you can chain selectors to drill down into specific levels of the DOM.

Inconsistent or Malformed HTML

Problem: Many web pages contain broken tags or inconsistent formatting, making parsing unreliable.

Solution: Parser libraries like BeautifulSoup or lxml are designed to handle imperfect HTML. When inconsistencies remain, apply data validation and cleanup rules before converting to JSON.

Extracting Tabular Data

Problem: HTML tables often represent structured data, but converting them into arrays of objects can be tricky.

Solution: Extract <th> headers as JSON keys, extract <td> cells as corresponding values, or loop through rows to generate a list of objects.

Handling Dynamic Content

Problem: Some sites load data with JavaScript, meaning the initial HTML source doesn’t contain the content you need.

Solution: Use headless browsers (Puppeteer, Playwright, Selenium) to render the page before parsing. This ensures the extracted HTML matches what a user would see.

Large-Scale Extraction

Problem: Parsing thousands of pages or very large HTML documents can strain memory and slow performance.

Solution: Use streaming parsers (e.g., Python’s lxml.etree.iterparse) to process HTML in chunks, optimize your extraction logic to capture only the elements you need, or store intermediate results before building the final JSON dataset.

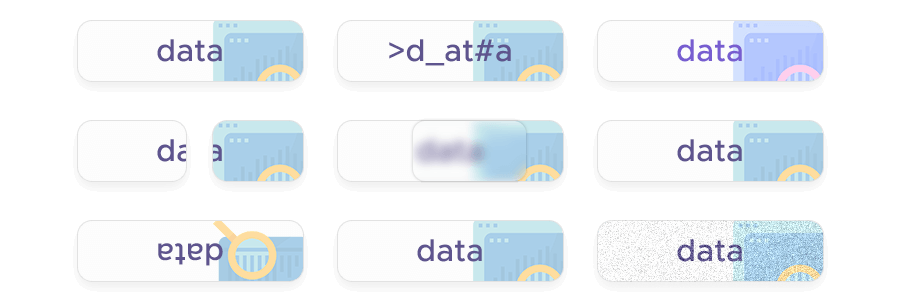

Best Practices

Converting HTML into JSON goes beyond simply extracting elements – the way you structure, clean, and validate your output determines how useful it will be. Following best practices ensures your JSON data is reliable, scalable, and easy to integrate into downstream systems.

Clean and Sanitize Extracted Data

- Strip unnecessary whitespace, HTML entities, or hidden tags.

- Normalize character encoding to avoid issues with special symbols.

- Remove duplicate or irrelevant elements that could clutter your dataset.

Maintain a Consistent Schema

- Define clear keys for your JSON objects (e.g., id, name, price).

- Apply consistent naming conventions and data types across all records.

- If working with multiple pages or sources, ensure the structure remains uniform.

Validate JSON Outputs

- Use validation tools or schema definitions (like JSON Schema) to check the integrity of your data.

- Catch errors early, such as missing keys or malformed values.

Avoid Regex-Only Parsing

- While regex can be useful for extracting simple patterns, it shouldn’t replace a DOM parser.

- Combining regex with a structured library approach can work, but regex alone is too brittle for complex HTML.

Respect Website Policies and Ethics

- Always check robots.txt before scraping.

- Avoid overwhelming servers by using rate-limiting or caching.

- Use proxies responsibly if you’re handling large-scale data collection.

Plan for Scalability

- If processing thousands of pages, build modular scripts that can be parallelized.

- Consider logging and error handling for resilience.

- Store JSON outputs in databases or data lakes for efficient querying and reuse.

Frequently Asked Questions

You can also learn more about:

- Mail us at: sales@infatica.io