A business can hardly survive the competition if it lacks information. That’s why data science became so valuable and highly demanded over the past few years. Companies around the world are trying to navigate the maze of Big Data and get useful insights that will let them understand what to do next. And data wrangling plays the key role here.

What is data wrangling?

Data wrangling or data munging is the processing of information. The goal of this procedure is to make data more understandable and easy to work with.

The thing is that when the information is gathered from the internet or some other source, it’s “raw” — which means that it might contain some false or outdated data, errors, irrelevant pieces, and so on. Data wrangling reworks raw information and makes it more coherent and standardized to simplify the further processing of this data.

It won’t be an exaggeration to say that data wrangling is the biggest part of a data scientist’s job. It’s a very time- and resource-consuming process that requires quite a lot of experience, knowledge, and advanced tools to be executed correctly. But the result is rewarding — munged data becomes clean and suitable for human understanding.

If we generalize the data wrangling process, it will consist of:

- Data gathering,

- Putting pieces of information together according to the requirements of a project, and

- Wiping away all the false and irrelevant data, filling in missing pieces of information.

How is data wrangling done?

Once the information is gathered from the web or any other source, the first step is data preprocessing. During this stage, data scientists get rid of the most obvious mistakes to prepare information for more precise processing.

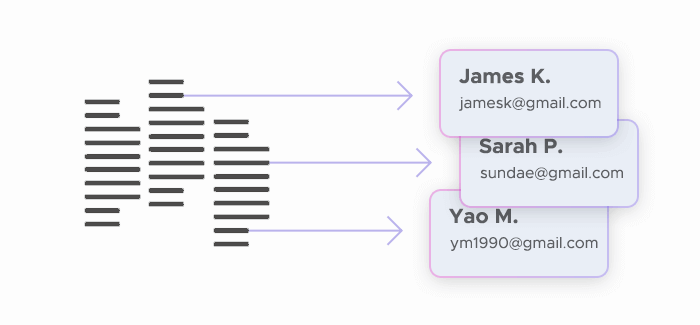

The next step is the standardization of data. At this point information gets transformed into a more understandable and structured format. For example, let’s say your goal is to gather leads, so you’ve scraped social media profiles. Now you need to divide this information into sections: name, email, other contact data, and so on.

Then it’s easier for data scientists to spot mistakes, noise, or missing elements and fix those issues. Once the information seems to be complete and free from errors, the next step is to gather all the cleaned data into a coherent whole so that it’s centralized and easy to consume.

It might seem that at this point all the work is done, but in reality, data scientists have more job to do. They need to match the information with sets of existing data to create an expansive database. And finally, the information has to be filtered to make sure it fits the requirements that were initially set.

Is data wrangling done manually?

It’s virtually impossible for a human to process raw data, so computers are quite actively involved in data wrangling. As machine learning and artificial intelligence get advanced, data scientists use these technologies as tools for data processing.

Current machine learning algorithms utilized for data wrangling can be roughly divided into two types: supervised and unsupervised. Supervised algorithms can standardize and consolidate incommensurable sources of information. They use classification to find known patterns and normalization to restructure data to make it more whole by flattening independent variables.

Unsupervised algorithms can explore unlabeled data. They’re using the clustering approach to detect patterns and structure information.

What tools are used for data wrangling?

There are quite a lot of instruments that help data scientists work with raw information and mung it. For example, Google DataPrep is used to explore, clean, and prepare data, and CSVKit will convert it into a more convenient format. DataWrangler is another tool with a quite self-explanatory name — it cleans and transforms information, with Trifacta serving as a viable alternative to it.

But those are very basic instruments that require little to no programming skills. Usually, data wrangling is executed in Python. And you will find numerous extensive tools for working with data if you’re using this programming language. The most popular one is Pandas — it can process information with labeled axes quite fast and efficiently preventing common issues created by misaligned data.

✅ Further reading: An Extensive Overview of Python Web Crawlers

If you need interactive and ready-to-use graphs, Plotly will be useful for you. It allows us to create different plots, charts, histograms, heatmaps, bars, and other data visualization items. And if you’re working with mathematical expressions, check out Numpy or Theano to speed up your data wrangling.

How can businesses use data wrangling?

Companies from all industries can benefit from structured and easy to consume data. The way they utilize it and the kind of information they need depends on their goals and requirements. For example, a firm can gather and wrangle data to get some general business intelligence such as market trends, the activity of its competitors, and other insights. Or a company can work with structured information to improve marketing strategies and reach marketing goals faster.

Businesses from specific industries will benefit greatly from acquiring and transforming into an easy to use format information they need every day. For instance, real estate companies can work with data about properties, prices, needs of the market, and so on. And travel agencies can obtain essential information about hotels, flights, tourist attractions, and other useful details.

How will data wrangling improve your workflow?

As a process that’s automated greatly, data wrangling will not just save a lot of time for you and data specialists that fetch you the needed information. It will also bring you other benefits.

Clear view

Structured and understandable data is easy to work with. Therefore, it will give you a chance to see the bigger picture and understand some global trends. This will help you to improve your decision-making process as you will be able to make better data-based decisions.

Deeper knowledge

Unprocessed information can be unclear and, as a result, you might lose some useful insights. Data that was wrangled will provide you with the full information on the cause you’re interested in. That’s why you can be sure you know all the details you need to know, and that you’re not missing any important nuances.

Convenient format

You can transform the information in any format you need or even visualize it with colorful graphs that will be extremely useful during board meetings and presentations. And the best thing is that you won’t need to spend sleepless nights trying to put all the pieces of data together — wrangled information is ready to use.

Perhaps, in the nearest future anyone will be able to perform data gathering and wrangling — not just experienced data scientists. As tailored tools become more advanced, and you need less skill to work with them efficiently, we can expect that the data wrangling process will be even more effortless and fast in the upcoming years.