Every data-driven decision starts with one crucial step – separating useful information from noise. Data filtering ensures that only relevant, high-quality records make it into your analysis, reports, or machine learning models. Whether you’re collecting data from internal systems or scraping it from the web, filtering transforms raw, unstructured information into clear, actionable insights – helping businesses save time, reduce errors, and focus on what truly matters.

What Is Data Filtering?

Data filtering is the process of selecting relevant information from a larger dataset – removing any entries that are incomplete, duplicated, or unrelated to your goals. In simple terms, it’s how organizations separate useful insights from digital noise.

Filtering is a key step in any data preparation workflow. Whether you’re analyzing customer feedback, tracking eCommerce prices, or compiling competitive intelligence, raw data often contains inconsistencies and irrelevant details. By applying filters, you ensure that only accurate, high-quality records remain for further analysis.

It’s important to distinguish data filtering from related processes:

- Data cleaning focuses on correcting or standardizing existing data.

- Data transformation changes data format or structure for compatibility.

- Data validation checks if data meets specific rules or constraints.

Filtering, on the other hand, is primarily about exclusion and focus – deciding what to keep and what to discard.

For example, a business collecting product listings might filter data to include only items in stock, within a certain price range, and from verified sellers. This ensures that decision-makers and automated systems rely on precise, relevant datasets – not an overwhelming mix of irrelevant information.

When applied to web scraping, data filtering becomes even more critical: large-scale crawls can generate thousands of records, and filtering helps keep only the data points that truly matter.

Why Data Filtering Matters

Collecting massive datasets is easy – but turning them into something usable requires filtering out the irrelevant, inaccurate, and redundant parts:

Improved Data Accuracy and Consistency

Filtered data eliminates duplicates, errors, and incomplete entries, ensuring your analysis is based on reliable information. This helps teams avoid misleading results and base decisions on facts rather than noise.

Reduced Storage and Processing Costs

By narrowing datasets to only what’s necessary, businesses save on storage, bandwidth, and computational power. Filtering ensures systems don’t waste resources on irrelevant records – a crucial advantage when working with web-scale data.

Faster Analysis and Decision-Making

Smaller, cleaner datasets are easier to analyze. Filtering reduces time-to-insight by focusing analysts and machine learning models on the information that truly matters.

Better Business Outcomes

Accurate, well-filtered data supports more precise forecasting, improved personalization, and better operational decisions. It transforms raw web data into actionable intelligence that can directly impact revenue and efficiency.

Common Data Filtering Techniques

Data filtering can take many forms, depending on your goals and the type of data you’re working with:

Rule-Based Filtering

This technique applies predefined conditions or logical rules to select or exclude data. For example, an analyst might include only transactions above a certain value or remove entries with missing fields. Rule-based filtering is simple, transparent, and easy to automate.

Keyword Filtering

Ideal for textual data, keyword filtering extracts entries that contain or exclude specific terms. It’s widely used for monitoring mentions, collecting product data, or analyzing customer sentiment. For instance, a marketing team could scrape reviews that include the phrase “free shipping” or exclude those marked as “out of stock.”

Statistical Filtering

This method uses quantitative thresholds to identify and remove outliers or anomalies. It’s useful for large datasets where extreme values can distort averages or predictions – for example, filtering out unusually high prices or unrealistic time intervals.

Machine Learning–Based Filtering

When datasets grow too large or complex for manual rules, machine learning can automate the filtering process. Models can be trained to recognize patterns, detect irrelevant data, and make dynamic decisions based on context. This approach is often used in recommendation systems, fraud detection, or predictive analytics.

Data Filtering in Web Scraping

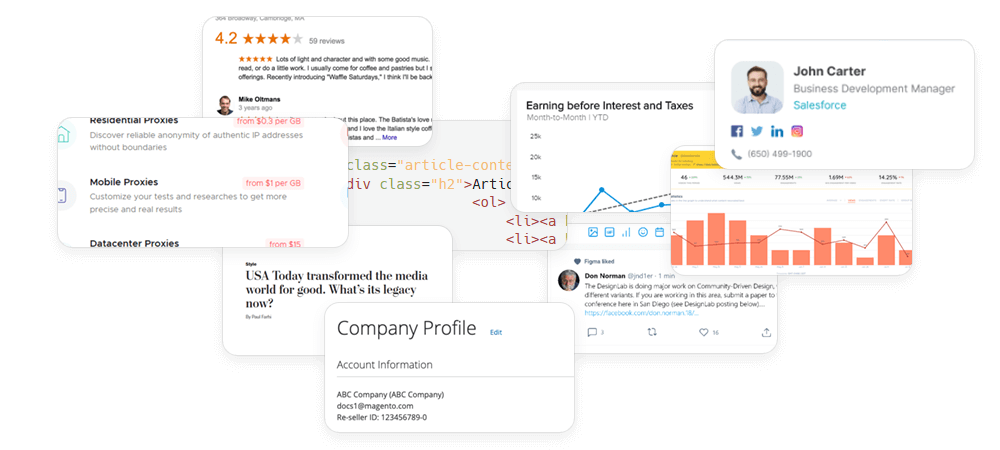

When it comes to web scraping, data filtering isn’t just a post-processing step – it’s a critical part of data collection itself. The web is full of unstructured, duplicate, and irrelevant information. Without proper filtering, scraped datasets can quickly become too large, inconsistent, or misleading to be useful.

Why Filtering Matters During Web Scraping

Raw web data often includes ads, pagination artifacts, or unrelated content. Filtering helps eliminate these distractions, ensuring that only relevant and accurate records reach your system. This makes it easier to focus on meaningful data, whether you’re monitoring product prices, collecting reviews, or tracking market trends. For example:

- Before filtering: A crawler may capture every element on a product page – images, navigation menus, hidden tags, unrelated items.

- After filtering: Only key data fields such as product name, price, availability, and rating remain, structured and ready for analysis.

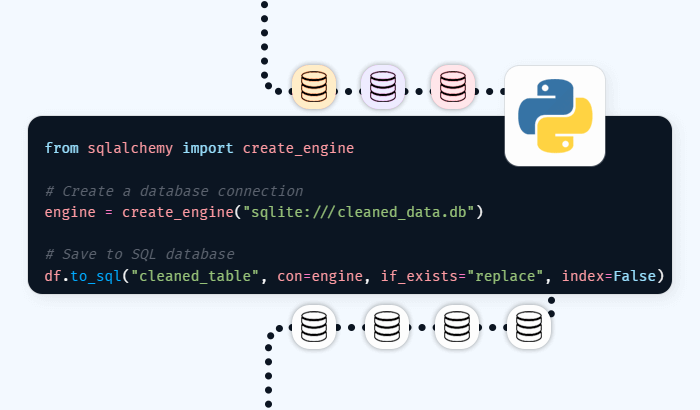

Filtering During vs. After Scraping

There are two main stages where filtering can occur:

- Pre-scraping filtering: Setting rules or parameters to collect only the desired content during extraction. This reduces bandwidth use and processing time.

- Post-scraping filtering: Cleaning and refining the data after it’s been collected. This can help remove residual noise or apply advanced logic once the dataset is complete.

Best Practices for Effective Data Filtering

Effective data filtering requires more than just applying a few basic rules – it’s about designing a structured, repeatable process that ensures your data remains clean, relevant, and aligned with your business goals. Here are several best practices to follow:

Define Clear Filtering Criteria

Start by understanding what matters most to your use case. Identify which fields, metrics, or attributes directly support your objectives. For example, a price-tracking project might only need current prices, product names, and store locations – everything else is noise.

Combine Multiple Filtering Layers

Use a mix of filters to improve precision. For instance, combine rule-based logic (e.g., price < $500) with keyword or tag-based filters (“in stock,” “verified seller”). Layering helps reduce false positives and ensures only high-quality records remain.

Regularly Review and Update Filters

Web structures and data sources change frequently. Periodic reviews help ensure your filters remain effective as websites evolve or business requirements shift. Outdated filters can miss critical information or introduce errors.

Preserve and Log Filtered-Out Data

While filtered records may seem irrelevant, keeping a backup or log can be valuable for audits, troubleshooting, or retraining machine learning models. It’s a safeguard against over-filtering or data loss.

Focus on Data Quality, Not Just Quantity

Filtering should improve the signal-to-noise ratio – not just reduce dataset size. The goal is to retain the most relevant and trustworthy information for analysis and decision-making.

How Infatica Helps You Filter Data Efficiently

Data filtering is most effective when built directly into your data collection process:

Structured, Ready-to-Use Results

Web Scraper API outputs clean, structured data in JSON or CSV formats, making it easy to integrate directly into analytics pipelines, dashboards, or data warehouses. Whether you’re collecting eCommerce data, market intelligence, or business listings, filtering ensures that every record has immediate operational value.

Scalable and Reliable Data Workflows

Infatica’s global infrastructure supports high-volume, concurrent requests without performance loss. This means you can scale your data collection while maintaining consistent filtering quality – from a few hundred to millions of web pages.

By embedding data filtering into your scraping workflow, Infatica helps you reduce costs, improve accuracy, and shorten your time to insight – all while maintaining flexibility for your specific data needs.

Ready to Get Started?

Make your data collection smarter with precise results from the start. Explore Infatica’s Web Scraper API and see how easy it is to extract only the data that matters.