When you open a website, the text, images, and interactive elements you see can be delivered in two very different ways: static content or dynamic content. At Infatica, we work with both content types on a daily basis – helping clients extract clean, structured data from simple HTML pages as well as JavaScript-heavy, interactive sites. In this article, we’ll break down the differences between static and dynamic content, explore the unique challenges each presents, and share best practices for scraping them efficiently and reliably.

What Is Static Content?

Static content is the simplest kind of web content to understand – and to scrape. It’s the kind of page where the server sends the HTML, images, and other assets exactly as they’re stored, without any additional browser-side processing. If you view the page source in your browser, what you see is essentially the same as what the server delivered.

You’ll encounter static content in places like blog posts, product description pages without live stock updates, or a company’s “About Us” section. The information doesn’t change unless the site owner updates the page and republishes it.

For web scraping, static content is a dream scenario. Because everything is already baked into the HTML, you can use a simple HTTP request to grab the page and parse its structure without having to run JavaScript or simulate user interactions. It’s predictable, lightweight, and fast – perfect for large-scale data collection where efficiency matters.

The trade-off is that static content isn’t always fresh. If the page only gets updated once a week, your scraped dataset will only be as current as that schedule allows. That’s why many scraping projects combine static sources with more dynamic ones to balance stability and timeliness.

What Is Dynamic Content?

Dynamic content is a little more elusive. Instead of sending you a fully prepared page from the server, the site delivers a basic HTML shell and then uses JavaScript to fetch and display the actual data in your browser. This means that what you see when you “view source” often isn’t the full story – much of the content is generated after the initial page load.

You’ve probably encountered dynamic content without realizing it. News sites that refresh headlines without reloading the page, e-commerce stores that update product availability in real time, and social media feeds that keep loading more posts as you scroll – all of these rely on client-side scripts to pull in fresh data from the server on demand.

From a scraping perspective, dynamic content is more challenging. You can’t always just send a simple request and parse the HTML – you may need to run a headless browser to execute the JavaScript, intercept the site’s API calls, or simulate user actions like clicking or scrolling. These extra steps take more time, resources, and technical know-how, especially if the site is also trying to detect and block automated traffic.

But with the right approach, dynamic content scraping can be incredibly powerful, giving you access to real-time or highly interactive datasets. At Infatica, we handle this by combining JavaScript rendering at scale with direct API extraction where possible, allowing our clients to collect up-to-the-minute information from even the most complex, script-heavy sites.

Static vs. Dynamic Content: Key Differences

While static and dynamic pages can look the same to a visitor, the way they’re generated – and the way you scrape them – differs significantly.

| Aspect | Static Content | Dynamic Content |

|---|---|---|

| How it’s generated | Fully assembled on the server and sent to the browser as complete HTML. | Browser loads a basic HTML shell, then uses JavaScript to fetch and render data. |

| Typical examples | Blog articles, documentation, “About Us” pages. | Social media feeds, live stock prices, infinite scrolling product listings. |

| Scraping complexity | Low — can be retrieved with a simple HTTP request and HTML parser. | Medium to high — may require headless browsers, API calls, or simulating actions. |

| Performance impact | Fast to scrape; minimal computing resources needed. | Slower to scrape due to rendering and extra requests. |

| Data freshness | Updated only when the page is manually changed. | Can update in real time or at frequent intervals. |

| Common challenges | Occasional structural changes in HTML. | Anti-bot measures, hidden API endpoints, frequent structural changes. |

| Best use cases | Stable datasets, archives, low-maintenance scraping. | Real-time analytics, live dashboards, time-sensitive data extraction. |

| Infatica advantage | Efficient large-scale scraping with minimal infrastructure overhead. | Scalable JavaScript rendering and API extraction for complex, interactive sites. |

Web Scraping Approaches for Each

Now that we understand the differences between static and dynamic content, it’s worth looking at how to approach scraping each type. The techniques vary in complexity and the resources they require, but knowing which method to use can save both time and effort.

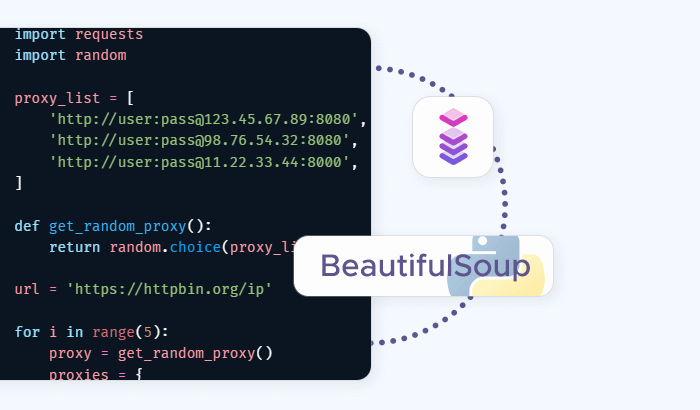

For static content, the process is relatively straightforward. Since the HTML already contains all the information you need, you can send a simple HTTP request to the page and parse the response using tools like BeautifulSoup or lxml in Python. This approach is fast, lightweight, and effective for collecting large amounts of data from blogs, documentation pages, or other predictable sources. Even when scraping at scale, static content usually doesn’t require much infrastructure or complex setups.

Dynamic content, on the other hand, demands a more sophisticated approach. Because much of the data is loaded or modified in the browser with JavaScript, you often need to render the page before you can access the information. Headless browsers like Playwright or Puppeteer allow you to simulate a real user visiting the site, executing scripts, and waiting for content to appear. In some cases, it’s possible to bypass the rendering entirely by identifying and calling the underlying APIs directly, which can be faster and more efficient. Scraping dynamic sites can also involve handling infinite scrolls, click events, or rate limits imposed by the website.

Many websites combine static and dynamic elements, which is where flexibility becomes essential. A product page, for example, may have static descriptions but dynamic pricing and availability. In these cases, a hybrid approach – starting with simple static extraction and then applying targeted dynamic methods for the changing data – often works best.

At Infatica, our scraping infrastructure is designed to handle both scenarios seamlessly. We combine lightweight static extraction for predictable data with scalable dynamic rendering and API integration for more complex sites. This ensures clients can collect accurate, up-to-date data efficiently, regardless of how a website delivers its content.

When to Choose Which Approach

If your project involves data that doesn’t change frequently, like archived articles, product descriptions, or documentation pages, a static scraping approach is often the simplest and most efficient solution. You get the data you need quickly, with minimal infrastructure and processing power. Static scraping works well when you want reliability and predictability without the overhead of rendering JavaScript.

Dynamic scraping comes into play when timeliness and interactivity matter. Social media feeds, live dashboards, stock or pricing data – these are examples where the information updates frequently and may only be available after the browser executes scripts. Here, a dynamic approach, whether through headless browsers or API calls, ensures you capture the most current and complete data.

Many real-world projects involve a mix of both. A hybrid site might offer static product details but load prices, inventory, or reviews dynamically. In these cases, combining approaches is the most effective strategy, allowing you to balance speed, accuracy, and resource usage.

At Infatica, we’ve designed our services to handle this variety. Whether a client needs large volumes of stable, static data or real-time, dynamic updates, our infrastructure is flexible enough to switch seamlessly between techniques. This means you can focus on insights and decision-making, while we handle the complexities of data extraction behind the scenes.