High-quality data is the foundation of any successful natural language processing system. As NLP applications continue to expand across industries, collecting large volumes of relevant, up-to-date text data has become a critical challenge. Let's explore what NLP data collection involves, the common obstacles teams face at scale, how web data supports modern NLP training, and how automated data collection approaches can help streamline NLP pipelines.

What Is NLP Data Collection?

NLP data collection is the process of gathering, preparing, and organizing text data so it can be used to train, fine-tune, or evaluate natural language processing models. Because NLP systems learn language patterns directly from data, the quality, diversity, and structure of the collected text have a direct impact on model performance.

At its core, NLP data collection focuses on acquiring large volumes of language data that reflect how people actually write and communicate. This data can come from a wide range of sources and typically includes both structured and unstructured text.

Types of Data Used in NLP

Most NLP projects rely on a combination of the following data types:

- Unstructured text, such as articles, reviews, forum posts, comments, and documentation. This is the most common input for tasks like sentiment analysis, topic modeling, and text classification.

- Semi-structured data, including web pages or feeds that follow consistent layouts but still require parsing and normalization.

- Structured text data, such as labeled datasets, annotated corpora, or metadata-enriched content used for supervised learning and evaluation.

Sources of NLP Training Data

NLP data can be collected from multiple sources, including:

- Publicly available datasets and open corpora

- Internal documents, support tickets, or knowledge bases

- User-generated content such as reviews, discussions, and Q&A platforms

- News sites, blogs, and other continuously updated web resources

Each source offers different advantages in terms of scale, freshness, and relevance, which is why many NLP teams combine several data streams rather than relying on a single dataset.

The NLP Data Collection Pipeline

Collecting text data for NLP is not a one-step task. It usually follows a multi-stage pipeline:

- Source identification – determining which websites, platforms, or repositories contain relevant language data

- Data extraction – collecting raw text and associated metadata

- Cleaning and normalization – removing noise, duplicates, and formatting inconsistencies

- Annotation and labeling (when required) – preparing data for supervised learning

- Storage and versioning – maintaining datasets for reproducibility and retraining

Common Challenges in NLP Data Collection

While access to text data has never been more abundant, collecting NLP-ready data at scale remains a complex and resource-intensive task.

Data Quality and Noise

Raw text data is rarely ready for immediate use. Web content, in particular, often contains boilerplate elements, duplicated passages, navigation text, and inconsistent formatting. Without thorough cleaning and normalization, this noise can distort language patterns and reduce model accuracy.

Limited Data Diversity and Bias

NLP models trained on narrow or homogeneous datasets tend to inherit bias and perform poorly outside their original domain. Relying on a small set of sources can limit linguistic variation, regional context, and writing styles, making it harder to build robust, generalizable models.

Scalability Constraints

Manual collection methods or small-scale scripts may work during prototyping, but they struggle to keep up with production-level requirements. Scaling data collection across thousands of pages or multiple sources introduces challenges related to throughput, scheduling, and infrastructure management.

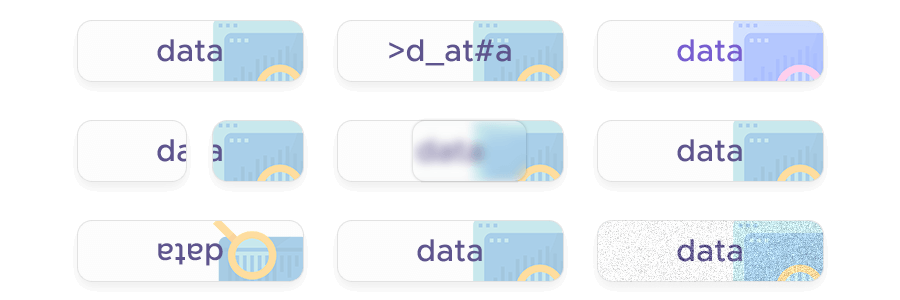

Website Complexity and Dynamic Content

Modern websites frequently rely on JavaScript rendering, infinite scroll, and dynamically loaded content. These elements complicate data extraction and often require more advanced handling than traditional static HTML parsing.

Blocking, Rate Limits, and Anti-Bot Measures

Many websites actively limit automated access through IP blocking, CAPTCHAs, and rate limits. For NLP teams, these restrictions can interrupt data pipelines, reduce coverage, and make consistent data collection difficult without dedicated mitigation strategies.

Data Freshness and Maintenance

Language evolves quickly, especially in domains like e-commerce, news, and social platforms. Static datasets become outdated over time, forcing teams to continuously refresh their data. Maintaining long-term collection workflows adds an additional layer of operational overhead.

Compliance and Ethical Considerations

Collecting text data also involves legal and ethical responsibilities. Teams must consider website terms of service, regional regulations, and data privacy requirements, particularly when working with user-generated content or location-specific sources.

Web Data as a Primary Source for NLP Training

The open web has become one of the most important data sources for modern NLP systems. From news articles and product reviews to forums and documentation, the web reflects how language is used across industries, regions, and contexts. For many NLP applications, web data offers a level of scale and diversity that is difficult to achieve through static or proprietary datasets alone.

Scale and Linguistic Diversity

Web content spans countless topics, writing styles, and levels of formality. This breadth allows NLP models to learn richer language patterns, idiomatic expressions, and domain-specific terminology. Access to large volumes of text is especially valuable for tasks such as language modeling, topic discovery, and semantic analysis, where coverage directly influences model performance.

Domain-Specific and Real-World Language

Unlike curated datasets, web data often captures language as it is used in real situations. Reviews reveal customer sentiment, forums highlight common questions and pain points, and technical blogs document emerging trends. This makes web data particularly useful for training NLP models tailored to specific industries, products, or user groups.

Multilingual and Regional Coverage

The web provides extensive multilingual content, enabling NLP teams to collect text in different languages and regional variants. Geo-specific sources also help capture local vocabulary, spelling variations, and cultural context, which are essential for building accurate multilingual or region-aware NLP systems.

Continuously Updated Content

Language evolves rapidly, and static datasets can quickly become outdated. Web sources are constantly refreshed with new articles, discussions, and user-generated content. By incorporating regularly updated web data, NLP teams can keep their models aligned with current terminology, trends, and usage patterns.

Structured Access to Unstructured Data

Although most web content is unstructured, it often follows repeatable layouts and patterns. When extracted systematically, this text can be transformed into structured datasets suitable for NLP pipelines. Metadata such as publication dates, authors, ratings, or categories can further enrich the training data and improve downstream analysis.

Scalable NLP Data Collection with Web Scraping and APIs

As NLP projects grow in scope and complexity, manual data gathering and one-off scripts quickly become bottlenecks. Web scraping provides a practical way to collect large volumes of text data from diverse online sources and transform them into structured datasets suitable for NLP workflows. When implemented through APIs, this approach becomes easier to scale, maintain, and integrate into production pipelines.

Automating Text Data Extraction from the Web

Web scraping enables the automated extraction of text and metadata from websites that publish valuable language data. This includes articles, reviews, forum discussions, documentation, and other content that reflects real-world language usage.

For NLP teams, automated scraping makes it possible to:

- Collect text at scale across thousands of pages or multiple domains

- Extract consistent fields such as titles, body text, timestamps, and categories

- Schedule recurring crawls to keep datasets fresh

- Handle both static and dynamic, JavaScript-rendered content

Overcoming Technical Barriers at Scale

Large-scale web data collection introduces technical challenges that go beyond simple HTML parsing. Websites often use dynamic layouts, rate limits, and anti-bot mechanisms that can interrupt extraction workflows.

Modern web scraping solutions are designed to address these obstacles by:

- Managing request rates and concurrency

- Supporting rendering for dynamic pages

- Rotating IP addresses and handling access restrictions

- Providing reliable extraction even as website structures change

These capabilities are particularly important for NLP pipelines that depend on consistent data delivery over time.

Why APIs Are Well-Suited for NLP Pipelines

Using a Web Scraper API abstracts much of the complexity involved in building and maintaining custom scraping infrastructure. Instead of managing browsers, proxies, and parsing logic internally, NLP teams can access web data through simple, programmatic requests.

API-based data collection offers several advantages:

- Faster setup compared to in-house scraping tools

- Reduced maintenance and operational overhead

- Built-in scalability for large or growing datasets

- Easier integration with data processing, labeling, and ML pipelines

How Infatica Supports NLP Data Collection

Infatica’s Web Scraper API helps NLP teams collect large volumes of real-world text data from web sources without building or maintaining custom scraping infrastructure. It supports reliable extraction from complex websites, geo-targeted collection for multilingual and regional data, and structured outputs that integrate smoothly into NLP pipelines, from preprocessing to model training.