Website bot detection is getting more attention nowadays – bots can be useful or harmful, depending on their purpose and design. In this article, you will learn what bots do on the internet, why bot detection is important, and how they try to bypass bot detection measures. You will also discover some bot detection tools and techniques for online businesses.

What is Bot Traffic?

Bot traffic is the non-human traffic on websites and apps generated by automated software programs known as bots. This type of traffic is quite common and can be both beneficial and harmful, depending on the bots' purposes. Bots are extensively used for automated tasks because they can operate around the clock and perform actions much quicker than humans. They're designed to handle repetitive and simple tasks efficiently.

The Importance of Bot Detection for Businesses

For many businesses, detecting botnet traffic is a crucial objective: Bot attacks, account takeovers, payment fraud, etc. are real automated threats that they have to deal with constantly. At the same time, they have to make sure that the security solutions don’t affect legitimate users. Let’s analyze these reasons a bit further:

Protection Against Bot Attacks

Bot attacks can impact the security and data integrity: For instance, malicious bots can lead to data breaches, unauthorized access to sensitive information, and financial losses. Bots can also perform credential stuffing by using stolen credentials to access user accounts, leading to identity theft and further financial damages. Finally, Distributed Denial of Service (DDoS) attacks can overwhelm servers, making services inaccessible and damaging a company's reputation.

User experience and brand reputation can also be at risk: Successful bot-driven attacks can lead to customer frustration and loss of trust, tarnishing a brand's reputation and reducing consumer trust. To counter this, businesses use a variety of monitoring and defensive measures that we’ll explore later in this article.

Fraud Prevention

Online payment fraud is an important problem to deal with. Bot detection tools can detect suspicious transactions if they deviate from the norm, indicating fraud. Additionally, they can block bots that attempt to use stolen credit card information or exploit payment systems.

Account creation fraud can be prevented by analyzing behavior patterns, which helps distinguish between legitimate users and bots during the account creation process. Requiring verification steps like phone or email confirmation can deter bots from successfully creating fake accounts.

To combat signup promo abuse, companies use behavioral analytics: It can spot unusual redemption patterns or multiple sign-ups from the same IP address, indicating potential abuse. Some systems can be set to restrict the number of times a promo code can be used, preventing mass coupon abuse by bots. Finally, monitoring and controlling how coupons are distributed can prevent bots from obtaining and exploiting them.

Content Protection

Protecting intellectual property is vital – proprietary data, whether it's written content, media, or code, is often copyrighted material. Bot detection helps prevent unauthorized copying and distribution. Moreover, unique content gives businesses a competitive edge. It also ensures that competitors can't scrape and use this content to their advantage.

Content scraping can negatively impact the company’s revenue streams. For example, when it comes to monetization, many websites rely on exclusive content for revenue, such as subscription services or pay-per-view media on social media platforms. Blocking activity helps protect these revenue models by ensuring only paying customers have access. Also, websites that rely on ad revenue need human traffic for views and clicks – without good bot detection, bots do not contribute to ad revenue and can skew analytics, leading to less effective ad targeting.

User Experience and Performance

Anti-bot systems are a critical component of a website's defense strategy. To maintain website performance, bot detection tools can identify bot traffic and block malicious bots, preventing them from consuming valuable server resources and bandwidth. Additionally, by filtering out bot traffic, servers can more effectively manage legitimate user traffic, maintaining stability and performance.

Preserving user experience is equally important: By preventing bots from overloading servers, bot detection helps the given website to avoid errors and slow load times, – and remain fast for legitimate users.

Analytics Accuracy

For accurate website analytics data, filtering out bot-driven traffic ensures that metrics like page views, sessions, and conversion rates reflect real human user interactions, not those of automated programs. This way, businesses can make informed decisions about marketing strategies, content optimization, and user experience improvements.

Enhanced marketing strategies can also be realized: Understanding true user behavior allows for more effective targeting and personalization of marketing campaigns. Businesses can accurately measure the return on investment for their marketing efforts when they use bot detection techniques to avoid skewed data. Last but not least, these insights can reveal how users interact with different features, guiding the development of APIs that cater to actual user needs.

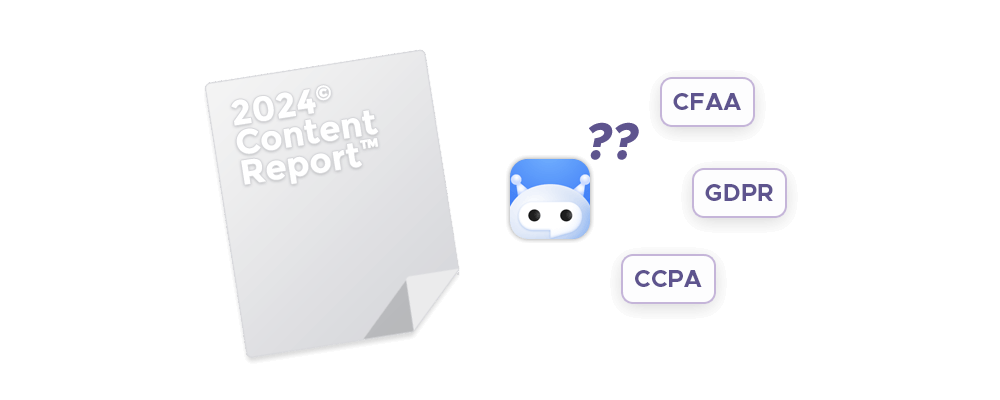

Compliance

In regulated industries such as finance, healthcare, and education, bot detection is essential for several key reasons. Firstly, data privacy and security compliance: Industries must comply with strict data protection regulations like GDPR and CCPA. Anti-bot systems help ensure that only authorized human users are accessing sensitive user data.

Secondly, protection against fraud and cyber threats: In finance, a bot manager can use various software for fraudulent activities like account takeover or transaction fraud – and system activity monitoring helps prevent these activities by identifying malicious bots and keeping auditing access safe.

Why are bots used?

There are various reasons for using bots, with some being a net positive for their industry (e.g. search engine monitoring, price comparison platforms), while others pose security and privacy threats (e.g. credential stuffing or spam distribution).

Malicious Bots

- Credential Stuffing: Bad bots automate the process of logging in with stolen credentials to take over accounts.

- DDoS Attacks: They flood servers with traffic to disrupt services and take websites offline.

- Ad Fraud: They simulate clicks on digital ads, leading to fraudulent advertising costs.

- Fake Account Creation: Even a single bad bot can automate the creation of fake accounts, which can be used for spam or to inflate user numbers.

- Account Takeover: They use brute force attacks to gain unauthorized access to users’ credentials across websites to gain unauthorized access.

Legitimate Bots (e.g. crawlers)

- Customer Support: Good bots can handle customer inquiries, provide instant responses, and improve overall customer service.

- Data Collection: They can gather information from various sources, aiding in market research and decision-making processes.

- SEO Optimization: Search engine bots crawl and index web pages, helping websites rank better in search results.

- Healthcare Assistance: In healthcare, an advanced bot can schedule appointments, send medication reminders, and collect patient data.

- Financial Management: Bots offer financial advice, track expenses, and send balance notifications to users.

- E-commerce: They assist in order processing, product recommendations, and customer feedback collection.

How Does Bot Detection Work?

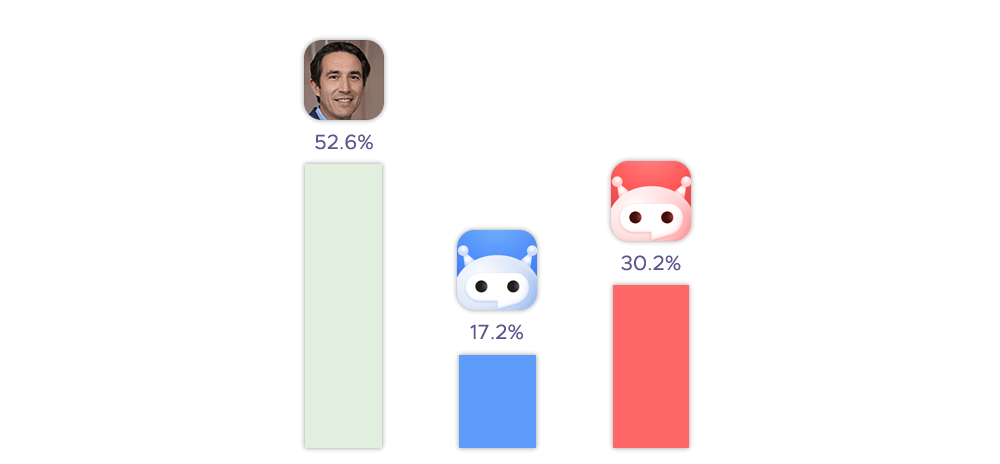

It’s a sophisticated process that distinguishes between human and bot activity, as well as between benign and malicious bots – and it typically involves comparing bot characteristics against the baseline behavior of genuine users.

Abnormal traffic volume and rate are an important marker: Even basic bots can generate massive traffic volume in a short period, unlike humans who browse at a more moderate pace. Also, bots don’t use typical mouse movements, which is atypical for human users who interact with content more slowly.

An unusual session duration – either extremely short or unusually long – may indicate a bot visiting a page and then immediately leaving, or accessing a lot of data points.

Suspicious traffic origin tied to regions that do not match the usual customer base, especially if the language is unfamiliar, can be a sign of bot activity. Requests originating from known malicious domains are often associated with malicious bot traffic.

Finally, unusual behavior patterns, like increases in login failures, password resets, failed transactions, and high-volume new account creations can signal attacks from bot operators.

How Do Bots Avoid Bot Detection?

Bots are constantly evolving and adapting to new situations and challenges. They use various techniques and methods to avoid detection and appear like human users. Some of the common ways that bots try to bypass common bot detection techniques are:

Using proxies or VPNs: Proxies and VPNs are services that allow users to hide or change their IP address and location. With some bot management, they can use proxies or VPNs to mask their identity and origin, and to rotate their IP address frequently.

Spoofing headers or user agents: Headers and user agents are information that browsers send to servers when making requests. They contain data such as the browser name, version, operating system, language, etc. Evasive bots can spoof headers or user agents to mimic different browsers or devices, and to rotate them randomly.

Solving verification challenges: CAPTCHAs or puzzles are particularly effective bot detection measures as only human users can solve them. They are used to filter out bots that cannot pass the test. Bots can use artificial intelligence, optical character recognition, or human farms to solve verification challenges.

Avoiding honeypots: Honeypots are traps designed to trick bots into revealing themselves. They are hidden elements on a web page, such as invisible links or forms, that humans would not interact with, but bots would. Advanced bots can use techniques to detect and avoid honeypots.

Mimicking human behavior: Human behavior is the process of monitoring and evaluating the actions and patterns of users on a website or app. It is used to detect users that exhibit typical bot behavior, such as high request frequency, low dwell time, or repetitive actions. Sophisticated bots can use algorithms to mimic human behavior, such as randomizing their timing, scrolling, clicking, typing, etc.

Generating noise or confusion: Noise or confusion is the process of creating or manipulating data or information to mislead or deceive a bot detection solution. It is used to challenge machine learning models that use data and algorithms to learn from patterns and make predictions. Bots can use adversarial techniques to generate noise or confusion, such as adding irrelevant or false data, modifying existing data, or creating fake feedback loops. This can help them bypass machine learning-based blocking.

Preventing Bot Traffic

Website owners can employ a variety of bot prevention methods and bot detection systems to protect their sites from automated attacks. Here are some effective strategies:

| Method | Purpose | How it works |

|---|---|---|

| Creating robots.txt files | Instruct bots which parts of the site they can or cannot crawl. |

A robots.txt file is placed in the root directory of the website, specifying which user agents (bots) are allowed or disallowed from accessing certain parts of the site.

|

|

Implementing CAPTCHA tests

|

Distinguish between human users and bots by presenting challenges that are difficult for bots to solve.

|

CAPTCHAs can be text-based, image-based, or involve other interactive challenges that a user must solve to proceed.

|

| Setting request rate limits |

Prevent excessive requests from a single IP address, which could indicate bot activity.

|

Rate limiting restricts the number of requests an IP can make within a certain timeframe, blocking or slowing down incoming traffic that exceeds these limits.

|

| Using honeypot traps |

Lure and detect malicious bots by setting traps that are invisible to human users but detectable by bots.

|

Honeypots can be hidden from fields or links that, when interacted with, flag the activity as bot-related.

|

|

Deploying Web Application Firewalls (WAFs)

|

Protect web and mobile applications by filtering and monitoring HTTP traffic between the application and the Internet.

|

Web application firewalls use a set of rules to block common attack patterns and can be configured to manage bot traffic.

|

|

Implementing dedicated bot detection software

|

Analyze traffic for identifying bots and blocking malicious ones while allowing legitimate visitors.

|

These systems use techniques like behavioral analysis, IP reputation, machine learning, and device fingerprinting to distinguish between bots and human users.

|

Solutions for Block-free Web Scraping

Infatica's Scraper API is designed to facilitate block-free web scraping – It can help users collect data from websites without being blocked by common anti-scraping mechanisms. Here are its key features:

- Robust Proxy Network: Scraper API uses a large pool of residential proxies that can decrease CAPTCHAs, request blocks, and blacklists, allowing for uninterrupted scraping.

- JavaScript Rendering: The API features full JavaScript rendering, Ajax support, and pagination handlers, enabling the scraping of dynamic content and complex websites.

- User-Friendly: Infatica aims to make web scraping efficient for power users and intuitive for home users, handling the technical aspects like proxy management.

- Data Extraction: Users can extract data from websites in any format of their choice, streamlining the data collection process and avoiding dynamic fingerprinting.

- Multiple Formats: The API supports exporting data in popular formats like CSV, XLSX, and JSON, providing flexibility in how the data is organized and analyzed.

- Stable and Reliable: Infatica has designed its Scraper API with performance and connection stability in mind, ensuring consistent and reliable scraping operations.