With the vast amount of information available online, scraping has become an essential tool for businesses, researchers, and developers looking to gather data for analysis, monitoring, or automation. In this article, we’ll delve into how to use Excel VBA to perform web scraping. While Excel is primarily known as a spreadsheet application, its built-in VBA scripting language offers powerful capabilities for automating tasks within Excel, including web scraping. By leveraging VBA, you can automate the process of extracting data from websites directly into your Excel workbook.

Prerequisites

Excel VBA is an attractive option for beginners and intermediate users because it’s relatively easy to use and doesn’t require any additional software. With just a basic understanding of Excel and VBA, you can begin scraping and organizing data with minimal setup. Moreover, it seamlessly integrates with the Excel environment, making it easy to process and analyze the scraped data right away.

Before diving into web scraping with Excel VBA, there are a few essential prerequisites to ensure a smooth experience:

1. Basic Knowledge of Excel and VBA

To effectively use VBA for web scraping, you should have a basic understanding of:

- Excel functions: Familiarity with Excel’s interface and working with worksheets.

- VBA basics: Understanding how to open the VBA editor, write simple macros, and debug code. If you’re new to VBA, consider exploring Excel’s built-in macro recorder and reviewing some beginner-friendly tutorials.

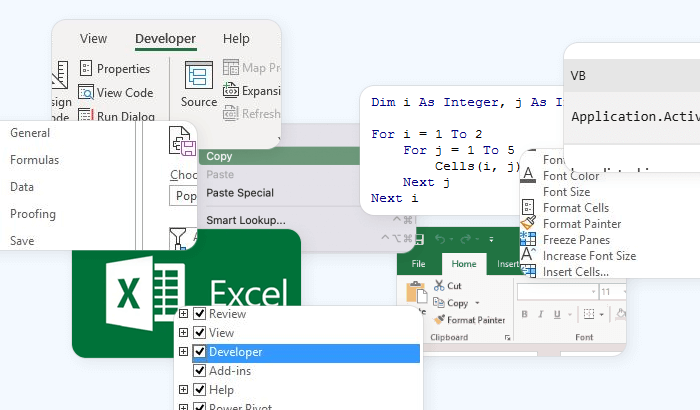

2. Excel Developer Tab

The Developer tab provides access to the VBA editor and tools needed to write and manage your scraping scripts. If the Developer tab is not visible in your Excel ribbon, follow these steps to enable it:

- Go to File > Options.

- Select Customize Ribbon.

- In the right pane, check the box for Developer and click OK.

3. Inspecting Web Pages

To scrape data from a website, you’ll need to understand the structure of its HTML. Most modern web browsers include developer tools to inspect the HTML of a webpage – through these tools, you can locate the specific HTML elements containing the data you want to extract:

- In Google Chrome: Right-click on any part of the page and select Inspect.

- In Microsoft Edge: Use the same steps as Chrome.

- In Mozilla Firefox: Right-click and choose Inspect Element.

4. Limitations and Expectations

While Excel VBA is a powerful tool for small-scale scraping tasks, it has its limitations:

- Performance: VBA is not optimized for high-speed or large-scale scraping compared to languages like Python or Go.

- JavaScript handling: VBA struggles with JavaScript-heavy websites. Additional tools like Selenium or manual adjustments may be required for such cases.

- Website restrictions: Always respect the terms of service of the websites you’re scraping and ensure your scraping activities are ethical and legal.

Setting Up the Environment

Now that you’re familiar with the prerequisites, it’s time to set up your Excel VBA environment for web scraping.

1. Open the VBA Editor

The VBA Editor is where you’ll write and manage your code. To open it:

- Navigate to the Developer tab.

- Click Visual Basic, or press

Alt + F11.

This will open the VBA Editor in a new window, providing an interface for creating and editing your macros.

2. Create a New Module

To write your web scraping code, you’ll need to insert a new module:

- In the VBA Editor, go to Insert > Module.

- A blank code window will appear, ready for your VBA script.

You can rename the module by right-clicking on it in the Project Explorer and selecting Rename. Choose a name relevant to your project, such as WebScraper.

3. Add Necessary References

VBA requires certain libraries to handle HTTP requests and HTML parsing. Enable these references in your VBA project:

- In the VBA Editor, click Tools > References.

- In the list of available references, check the boxes for

Microsoft HTML Object LibraryandMicrosoft XML, v6.0(or the latest version available on your system). - Click OK to save.

4. Organize Your Workbook

Before you begin coding, organize your Excel workbook for easy data storage. Create a new worksheet named ScrapedData or something similar. Use the first row for column headers that describe the data you’ll scrape, such as Title, Price, URL, or any other fields relevant to your project.

5. Test the Setup

To ensure everything is set up correctly, create a simple test macro. In your newly created module, enter the following VBA code:

Sub TestSetup()

MsgBox "VBA environment is ready!"

End Sub

Close the VBA Editor and return to Excel. 3. In the Developer tab, click Macros, select TestSetup, and click Run. If everything is working correctly, a message box saying "VBA environment is ready!" should appear.

Understanding the Basics of Web Scraping in VBA

Web scraping involves extracting data from websites by sending requests, receiving responses, and processing the resulting content – and Excel VBA offers tools to accomplish this using built-in libraries.

1. Core Components of Web Scraping in VBA

To scrape data using VBA, you need to understand the following components:

HTTP requests and responses: Websites deliver data through the HTTP protocol. VBA can send requests to servers using the MSXML2.XMLHTTP object and receive responses containing the HTML of a webpage.

HTML parsing: Web pages are written in HTML, a structured language used to define the layout and content of a page. Using the HTMLDocument object in VBA, you can parse and navigate through the HTML to extract the data you need.

DOM (Document Object Model): The DOM represents a webpage’s structure as a tree of elements. Each element corresponds to an HTML tag (e.g., <div>, <a>, <span>). VBA interacts with these elements to find and extract data.

2. Common VBA Objects for Web Scraping

Here are the key VBA objects and methods used for web scraping:

MSXML2.XMLHTTP: This object allows VBA to send HTTP requests and retrieve the HTML content of web pages. It supports methods such as Open, Send, and ResponseText to communicate with web servers. Here's an example code snippet:

Dim http As Object

Set http = CreateObject("MSXML2.XMLHTTP")

http.Open "GET", "https://example.com", False

http.Send

MsgBox http.ResponseText ' Displays the HTML of the page

HTMLDocument: The HTMLDocument object, part of the Microsoft HTML Object Library, enables VBA to parse and navigate HTML content. You can use it to access elements by tag, class, or ID.

Dim html As Object

Set html = CreateObject("HTMLFile")

html.body.innerHTML = http.ResponseText

MsgBox html.getElementsByTagName("title")(0).innerText ' Extracts the page title

QueryTables: This method can fetch data from simpler, table-based websites directly into Excel. It’s less flexible than XMLHTTP but suitable for some tasks.

With ActiveSheet.QueryTables.Add(Connection:="URL;https://example.com", Destination:=Range("A1"))

.Refresh

End With

Step-by-Step Guide to Web Scraping with Excel VBA

Let’s break the process into four manageable steps: inspecting the webpage, making HTTP requests, parsing and extracting data, and storing the data in Excel:

1. Open a Website and Inspect Its Structure

Before writing any VBA code, you need to understand the structure of the webpage you want to scrape. For this example, we’ll scrape a hypothetical product listing page.

1. Open the webpage: Navigate to the webpage containing the data you want to scrape, such as product names and prices.

2. Inspect elements using developer tools: Right-click on an element of interest (e.g., a product name or price) and select Inspect (or Inspect Element in some browsers). In the developer tools pane, look for the HTML tags surrounding the data. For instance:

<h2 class="product-name">Sample Product</h2>

<span class="product-price">$19.99</span>

Note the tags (<h2>, <span>) and any identifying attributes like class or id.

3. Plan your extraction: Identify the specific attributes (e.g., class="product-name") that make the data unique. Determine how you’ll access multiple items, such as looping through a list of products.

2. Write VBA Code to Make HTTP Requests

Now, let’s fetch the HTML content of the webpage using VBA.

1. Open the VBA Editor: Press Alt + F11 in Excel to open the VBA Editor, then insert a new module (Insert > Module).

2. Set Up the HTTP Request: Use the MSXML2.XMLHTTP object to send a GET request to the target website. Add the following code:

Sub FetchWebPage()

Dim http As Object

Dim url As String

Dim response As String

' Define the target URL

url = "https://example.com/products"

' Create an XMLHTTP object

Set http = CreateObject("MSXML2.XMLHTTP")

http.Open "GET", url, False

http.Send

' Store the response HTML

response = http.ResponseText

' Display the HTML (optional)

MsgBox response

End Sub

This code snippet sends an HTTP GET request to the URL – and then retrieves the HTML content of the page and stores it in the response variable.

🏸 Tip: Test the code by running it (go to the Developer tab, click Macros, select FetchWebPage, and click Run). A message box will display the page’s HTML.

3. Parse and Extract Specific Data Using the HTML Document Object Model (DOM)

After fetching the webpage, the next step is to parse its HTML and extract the desired elements.

1. Set up the HTML parsing: Use the HTMLDocument object to load and process the HTML. Modify the previous code to include parsing:

Sub ExtractData()

Dim http As Object

Dim html As Object

Dim productNames As Object

Dim productPrices As Object

Dim url As String

Dim i As Integer

' Define the target URL

url = "https://example.com/products"

' Fetch the HTML content

Set http = CreateObject("MSXML2.XMLHTTP")

http.Open "GET", url, False

http.Send

' Load the HTML into an HTMLDocument object

Set html = CreateObject("HTMLFile")

html.body.innerHTML = http.ResponseText

' Find elements by class name

Set productNames = html.getElementsByClassName("product-name")

Set productPrices = html.getElementsByClassName("product-price")

' Test the extraction (display the first product name and price)

MsgBox "First product: " & productNames(0).innerText & " - " & productPrices(0).innerText

End Sub

2. Iterate over multiple items: To extract all products, loop through the lists of product names and prices:

For i = 0 To productNames.Length - 1

Debug.Print productNames(i).innerText & " - " & productPrices(i).innerText

Next i

This will output the names and prices to the Immediate Window in the VBA Editor (Ctrl + G to view).

4. Store Extracted Data in Excel

Finally, store the extracted data in your Excel worksheet.

1. Add data to a worksheet: Modify the code to write the product names and prices into the ScrapedData worksheet:

Sub ScrapeAndStore()

Dim http As Object

Dim html As Object

Dim productNames As Object

Dim productPrices As Object

Dim i As Integer

Dim url As String

Dim ws As Worksheet

' Define the target URL

url = "https://example.com/products"

' Set the worksheet

Set ws = ThisWorkbook.Sheets("ScrapedData")

ws.Cells.Clear

ws.Cells(1, 1).Value = "Product Name"

ws.Cells(1, 2).Value = "Price"

' Fetch and parse the webpage

Set http = CreateObject("MSXML2.XMLHTTP")

http.Open "GET", url, False

http.Send

Set html = CreateObject("HTMLFile")

html.body.innerHTML = http.ResponseText

' Extract and store the data

Set productNames = html.getElementsByClassName("product-name")

Set productPrices = html.getElementsByClassName("product-price")

For i = 0 To productNames.Length - 1

ws.Cells(i + 2, 1).Value = productNames(i).innerText

ws.Cells(i + 2, 2).Value = productPrices(i).innerText

Next i

MsgBox "Data successfully scraped and stored in Excel!", vbInformation

End Sub

2. Run the script: Ensure you have a worksheet named ScrapedData. Run the ScrapeAndStore macro. The product names and prices will be populated in the worksheet.

Troubleshooting and Common Issues

While web scraping in VBA can be straightforward, there are several challenges that may arise during the scraping process. In this chapter, we will explore common issues you might encounter, such as handling timeouts, errors, and dealing with JavaScript-heavy websites or blocked requests.

1. Handling Timeouts and Errors

When scraping websites, issues like timeouts or unexpected errors are common. Let's learn how we can handle them effectively!

Timeouts occur when the server takes too long to respond to your request. By default, VBA’s MSXML2.XMLHTTP object may not wait long enough for a response, especially on slower websites. You can modify the timeout settings by using the setTimeouts method of the XMLHTTP object. Here’s an example:

Sub FetchWithTimeout()

Dim http As Object

Dim url As String

url = "https://example.com/products"

Set http = CreateObject("MSXML2.XMLHTTP")

' Set the timeout values in milliseconds: (resolve timeout, connect timeout, send timeout, receive timeout)

http.setTimeouts 5000, 5000, 5000, 5000 ' 5 seconds for each

On Error GoTo HandleError

http.Open "GET", url, False

http.Send

MsgBox "Page fetched successfully!"

Exit Sub

HandleError:

MsgBox "An error occurred: " & Err.Description, vbCritical

End Sub

In this code, the setTimeouts method is used to define the time limits for the connection, request, and response stages. The On Error GoTo HandleError line captures and handles any errors that might occur during the HTTP request.

For general error handling, VBA's On Error statement is invaluable. It helps prevent the script from failing abruptly and allows you to capture error details.

Sub FetchWithErrorHandling()

On Error GoTo ErrorHandler

' Code that may cause an error

Dim http As Object

Set http = CreateObject("MSXML2.XMLHTTP")

http.Open "GET", "https://example.com/products", False

http.Send

MsgBox "Request sent successfully!"

Exit Sub

ErrorHandler:

MsgBox "An error occurred: " & Err.Description, vbCritical

End Sub

This will display a message box with the error description if something goes wrong.

2. Working Around JavaScript-Heavy Sites

Many modern websites use JavaScript to load content dynamically after the page is initially loaded. Since VBA’s XMLHTTP object only fetches the raw HTML and doesn’t execute JavaScript, you may not be able to extract content from these sites directly.

Solution A: Use a headless browser or automation tools. VBA cannot directly execute JavaScript, but you can use a tool like Selenium to control a browser and scrape data from JavaScript-heavy websites. Selenium can interact with JavaScript content by rendering the page in a browser. Here’s an example using Selenium with VBA:

- Install

Selenium WebDriverandSeleniumBasicpackage, which include a set of libraries for controlling the browser.

2. Basic Selenium VBA Script:

Sub ScrapeWithSelenium()

Dim driver As New WebDriver

Dim url As String

' Set URL

url = "https://example.com/products"

' Start Chrome browser (or use other browser drivers like Firefox)

driver.Start "chrome"

' Navigate to the URL

driver.Get url

' Wait for the page to load fully (adjust the waiting time as needed)

driver.Wait 5000

' Extract the product names and prices

Dim productNames As Object

Set productNames = driver.FindElementsByClass("product-name")

Dim i As Integer

For i = 0 To productNames.Count - 1

Debug.Print productNames.Item(i).Text

Next i

' Quit the driver (close the browser)

driver.Quit

End Sub

In this example, we launch Chrome using Selenium, navigate to the page, and extract the product names. Selenium waits for JavaScript to load content before scraping.

Solution B: Look for an API. Sometimes websites that use JavaScript to load content also provide an API (Application Programming Interface) that returns the same data in a structured format like JSON. You can use VBA’s XMLHTTP object to send requests to the API and retrieve the data without having to render the page.

3. Dealing with Blocked Requests or CAPTCHA

Some websites may block scraping requests if they detect unusual activity, such as multiple rapid requests from the same IP address. They may also serve CAPTCHA challenges to prevent automated scraping.

Solution A: Use proxies. Websites may block your IP if they notice too many requests coming from the same source. Using a proxy server can help distribute the requests across multiple IP addresses, making it harder for the site to detect and block scraping activity.

Here’s an example of using a proxy:

http.setProxy "http", "proxyserver.com", 8080

Solution B: Randomizing User-Agent headers. One way to avoid being blocked is by using a random or rotating User-Agent header. This simulates different browsers, making it harder for the site to detect a bot.

Example code snippet:

Sub ScrapeWithUserAgent()

Dim http As Object

Dim url As String

Dim userAgent As String

url = "https://example.com/products"

' Create an XMLHTTP object

Set http = CreateObject("MSXML2.XMLHTTP")

' Randomize the User-Agent header

userAgent = "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.124 Safari/537.36"

http.Open "GET", url, False

http.setRequestHeader "User-Agent", userAgent

http.Send

MsgBox "Page fetched with User-Agent header"

End Sub

Frequently Asked Questions

VBA's XMLHTTP object cannot execute JavaScript, so dynamic content rendered by JavaScript won’t be accessible. To scrape such sites, consider using a tool like Selenium, which controls a browser and can interact with JavaScript-driven pages to extract the data.

You can easily store scraped data in Excel by writing the extracted data to a worksheet using VBA. Use the Cells(i, j).Value method to place data into specific rows and columns. For more advanced storage, consider using Excel tables or exporting the data to a CSV file.