Web scraping often involves combining several of these tools and techniques to extract and manipulate data – and curl is one of the best tools you can master. In this guide, we’ll explore how to use curl for web scraping, covering everything from basic commands to more advanced techniques. After completing this tutorial, you’ll have a solid understanding of how to efficiently and ethically scrape data from the web using curl.

What is curl?

Curl is a versatile and powerful command-line tool used to transfer data to or from a server, using a wide variety of protocols such as HTTP, HTTPS, FTP, and more. Its name stands for "Client URL", and it’s commonly used by developers and system administrators for tasks ranging from simple file downloads to complex API interactions. One of the most useful applications of curl is web scraping, where it can be used to automate the extraction of data from websites.

Web scraping allows you to:

- Automate repetitive tasks: Instead of manually copying and pasting information, curl can automate the process of data collection.

- Access large datasets: You can gather large amounts of data from multiple pages or websites, which can be valuable for research and analysis.

- Monitor website changes: Curl can help you keep track of changes on websites, such as price drops, new products, or updated content.

Understanding HTTP requests

To effectively use curl for web scraping, it’s essential to understand how HTTP requests work. HTTP (Hypertext Transfer Protocol) is the foundation of data communication on the web, and knowing how to interact with it will allow you to retrieve and manipulate the data you need.

Common HTTP methods

HTTP methods are used to indicate the desired action to be performed on a resource. The most common methods you’ll use with curl for web scraping are GET and POST, but it’s helpful to understand the others as well:

GET: The GET method is used to request data from a specified resource. This is the most common method used in web scraping because it retrieves the content of a web page.

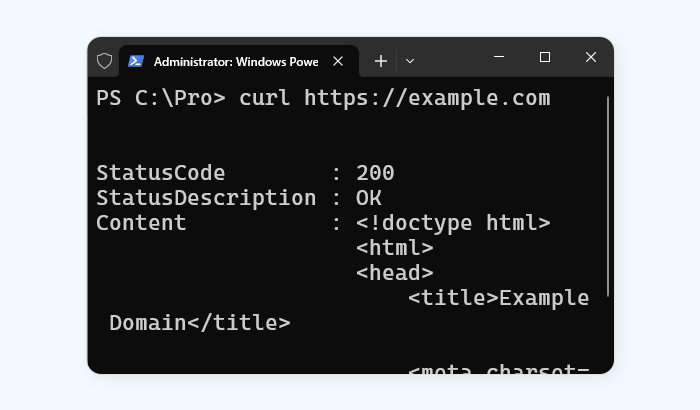

curl https://example.com

POST: The POST method is used to send data to a server to create or update a resource. This is often used when submitting forms on a website.

curl -X POST -d "param1=value1¶m2=value2" https://example.com/form

PUT: The PUT method replaces all current representations of the target resource with the uploaded content. While less common in web scraping, it’s useful for API interactions.

DELETE: The DELETE method removes the specified resource. Like PUT, it’s more relevant to API usage but is good to know.

Curl allows you to specify the HTTP method using the -X flag, followed by the method name (e.g., -X POST).

Headers and cookies

HTTP headers provide essential metadata about the request or response. In web scraping, headers can be crucial for mimicking a browser’s request to avoid detection or to pass necessary information.

Custom headers: You can use the -H flag to include custom headers in your requests. For example, the User-Agent header identifies the client making the request. By default, curl uses its own user agent, but you can change it to mimic a browser.

curl -H "User-Agent: Mozilla/5.0" https://example.com

Cookies: Cookies are small pieces of data stored by the browser to maintain session information. Many websites require cookies to function correctly, especially if you’re logging in or navigating between pages. To send cookies with curl, use the -b flag followed by the cookie string or file:

curl -b "name=value" https://example.com

You can also save cookies to a file using the -c flag and reuse them later:

curl -c cookies.txt https://example.com

curl -b cookies.txt https://example.com/next-page

User-Agent and Referer

Websites often rely on headers like User-Agent and Referer to identify and track users. Modifying these headers can help you avoid detection or access resources that are restricted based on these values.

User-Agent: As mentioned, the User-Agent header lets the server know what client is making the request. Many websites block requests with unknown or missing user agents. By setting the User-Agent to mimic a popular browser, you can often bypass these restrictions.

curl -H "User-Agent: Mozilla/5.0 (Windows NT 10.0; Win64; x64)" https://example.com

Referer: The Referer header indicates the URL of the page that led to the request. Some websites restrict access to certain pages unless the request comes from a specific referer. You can set this header with the -e flag:

curl -e https://example.com https://example.com/target-page

Extracting data from web pages

Once you’ve successfully made an HTTP request using curl, the next step in web scraping is to extract the relevant data from the web page’s response. Let’s learn to save the response data, parsing HTML, and handling JSON, which are the most common formats you'll encounter.

Saving response data

After making a request with curl, the response data (such as the HTML content of a web page) is typically displayed directly in your terminal. However, for web scraping purposes, it’s often more useful to save this data to a file so that you can analyze or process it further.

Saving to a file: You can save the output of a curl request to a file using the -o (lowercase) or -O (uppercase) options.

-o: This option allows you to specify a filename for the output.

curl https://example.com -o output.html

-O: This option saves the file with its original name from the server.

curl -O https://example.com/page.html

Appending data to a file: If you need to append data to an existing file, use the >> operator with curl.

curl https://example.com >> output.html

Parsing HTML

The most common format you’ll encounter when web scraping is HTML, which structures the content of web pages. Extracting specific data from HTML requires parsing, and while curl itself doesn’t have built-in parsing capabilities, you can combine it with other command-line tools like grep, awk, and sed.

Using grep: grep is a powerful tool for searching through text. You can use it to filter out lines of HTML that contain specific tags or keywords.

curl https://example.com | grep "<title>"

This command would extract the title tag from the HTML.

Using sed: sed is a stream editor that can be used to manipulate text. It’s useful for stripping out unwanted HTML tags or extracting specific content.

curl https://example.com | sed -n 's/.*<title>\(.*\)<\/title>.*/\1/p'

This command extracts just the text inside the title tag.

Using awk: awk is a versatile programming language for pattern scanning and processing. It can be used to extract more complex patterns or manipulate multiple fields of data.

curl https://example.com | awk -F'[<>]' '/<title>/ {print $3}'

This command also extracts the text within the title tag, but awk can be extended to handle more complex cases.

Dealing with JSON

Another common format you might encounter, especially when interacting with APIs, is JSON (JavaScript Object Notation). JSON is a lightweight data-interchange format that’s easy for both humans and machines to read and write.

Using jq: jq is a powerful command-line tool for processing JSON. You can use it in conjunction with curl to parse and filter JSON data.

curl https://api.example.com/data | jq '.key'

This command extracts the value associated with the key key from the JSON response.

Filtering JSON data: You can use jq to filter specific fields or even transform JSON data.

curl https://api.example.com/data | jq '.[] | {name: .name, value: .value}'

This command would extract and display only the name and value fields from each object in a JSON array.

Saving JSON data: Just like with HTML, you can save JSON responses to a file for later processing.

curl https://api.example.com/data -o data.json

Extracting and working with text data

Sometimes, the data you need may not be in HTML or JSON, but rather in plain text or CSV format. Curl can still be useful in these cases, and you can use similar command-line tools to parse and manipulate this data.

Extracting text: For text data, you might simply need to filter out the relevant lines or columns.

curl https://example.com/data.txt | grep "keyword"

Handling CSV files: If you’re scraping data in CSV format, you can use tools like awk or csvkit to process the data.

curl https://example.com/data.csv | awk -F',' '{print $1, $2}'

This command extracts and displays the first two columns of a CSV file.

Handling complex requests

As you progress with web scraping using curl, you’ll encounter scenarios where simple GET requests are not sufficient to retrieve the desired data. Websites may require form submissions, session management, or involve redirects. Let’s see how to handle these more complex requests, allowing you to scrape data from more sophisticated and dynamic web pages.

Forms and POST requests

Many websites use forms to collect input from users, which are then sent to the server via POST requests. Scraping such sites requires replicating the form submission process.

Submitting a simple form: To submit a form with curl, use the -d option to pass the form data as key-value pairs. The -X POST option explicitly sets the method to POST, though it’s not always necessary as curl automatically switches to POST when you use -d.

curl -d "username=user&password=pass" -X POST https://example.com/login

This example simulates a login form submission with a username and password.

Submitting forms with multiple fields: For forms with multiple fields, simply list all the key-value pairs, separating them with &.

curl -d "field1=value1&field2=value2&field3=value3" https://example.com/form

Sending form data in JSON format: Some modern web applications expect form data in JSON format. You can send JSON data using the -H option to specify the Content-Type as application/json, and then use -d to send the JSON data.

curl -H "Content-Type: application/json" -d '{"key1":"value1","key2":"value2"}' https://example.com/api

Handling sessions and cookies

Web scraping often involves interacting with websites that require a login or maintain user sessions. Curl allows you to manage sessions using cookies, enabling you to maintain the same session across multiple requests.

Saving cookies: When you log in to a website, the server usually sends back a cookie that identifies your session. You can save this cookie to a file using the -c option.

curl -c cookies.txt -d "username=user&password=pass" https://example.com/login

Using saved cookies: Once you’ve saved the cookies, you can reuse them in subsequent requests by using the -b option. This allows you to perform actions that require authentication, such as accessing user-specific pages.

curl -b cookies.txt https://example.com/dashboard

Maintaining session continuity: By combining -c and -b, you can continuously update and use the same cookie file, maintaining session continuity across multiple requests.

curl -c cookies.txt -b cookies.txt https://example.com/page

Handling redirects

Web pages often use redirects to forward users from one URL to another. Curl, by default, doesn’t follow redirects, but you can enable this behavior with a simple option.

Following redirects: To automatically follow redirects, use the -L option. This tells curl to follow any HTTP 3xx responses (like 301 or 302) and fetch the final destination URL.

curl -L https://example.com/redirect

Limiting the number of redirects: By default, curl will follow up to 50 redirects. If you need to limit this number, you can use the --max-redirs option.

curl -L --max-redirs 5 https://example.com/redirect

Dealing with JavaScript-heavy sites

One of the limitations of curl is that it cannot execute JavaScript. This means that any data loaded dynamically by JavaScript after the initial page load will not be captured by curl. However, there are some workarounds you can use:

Look for alternative endpoints: Sometimes, JavaScript loads data from separate API endpoints that return JSON or other data formats. Inspecting the network traffic using browser developer tools can help you identify these endpoints, which you can then query directly with curl.

curl https://example.com/api/data

Use headless browsers or other tools: For more complex cases where JavaScript execution is essential, you might need to use headless browsers like Puppeteer or tools like Selenium. These tools can automate browser behavior, including JavaScript execution, though they are more complex and require additional setup beyond curl.

Handling rate limiting and CAPTCHAs

As you scrape more complex sites, you might encounter rate limiting or CAPTCHAs, which are designed to prevent automated access.

Rate limiting: To avoid being blocked for sending too many requests in a short time, you can introduce delays between requests using a simple loop in a shell script.

for i in {1..10}; do

curl https://example.com/page$i

sleep 5

done

This script scrapes 10 pages, pausing for 5 seconds between each request.

Dealing with CAPTCHAs: CAPTCHAs are designed to differentiate between human users and bots, making them difficult to bypass with curl alone. If you encounter a CAPTCHA, you’ll likely need to either manually solve it or use a CAPTCHA-solving service, which typically involves more advanced scripting and additional tools beyond curl.

Combining techniques for complex scraping tasks: In real-world scenarios, you might need to combine several of these techniques to accomplish your scraping goals. For example, you could use a combination of session management, form submission, and redirect handling to log into a website, navigate to a specific page, and extract data from it.

# Step 1: Log in and save cookies

curl -c cookies.txt -d "username=user&password=pass" https://example.com/login

# Step 2: Follow redirect to the dashboard

curl -b cookies.txt -L https://example.com/dashboard

# Step 3: Scrape data from the user-specific page

curl -b cookies.txt https://example.com/user/data -o userdata.html

Practical examples

To solidify your understanding of web scraping with curl, let’s use these step-by-step guides for scraping different types of websites and API data.

Example A: Scraping a static website

Static websites are the simplest to scrape since their content is fully loaded when the page is requested. This example walks you through scraping a basic static website to extract some information.

1. Identify the target URL: Start by identifying the URL of the page you want to scrape. For this example, let’s assume the blog’s homepage is https://example-blog.com.

2. Inspect the HTML structure: Open the page in your browser and use developer tools (usually accessible with F12 or right-clicking and selecting “Inspect”) to inspect the HTML structure. Look for the HTML tags that contain the article titles. Assume they are within <h2> tags with a class of article-title.

3. Make the HTTP request: Use curl to fetch the page content.

curl https://example-blog.com -o homepage.html

This command saves the HTML of the homepage to a file named homepage.html.

4. Extract the desired content: Use command-line tools like grep or sed to extract the titles from the saved HTML file.

grep -o '<h2 class="article-title">[^<]*</h2>' homepage.html | sed 's/<[^>]*>//g'

This command finds all <h2> tags with the class article-title and then strips the HTML tags, leaving only the text content.

5. Output the results: The titles of the articles are now displayed in your terminal. You can redirect this output to a file if needed:

grep -o '<h2 class="article-title">[^<]*</h2>' homepage.html | sed 's/<[^>]*>//g' > titles.txt

The extracted titles are now saved in titles.txt.

Example B: Scraping a dynamic website with login

Some websites require you to log in before you can access certain data. This example shows how to log in to a website and scrape data from a user-specific page.

1. Identify the login form: Inspect the login page to identify the form fields. Assume the login form at https://example-site.com/login has fields username and password.

2. Submit the login form: Use curl to submit the login credentials and save the session cookies.

curl -c cookies.txt -d "username=myuser&password=mypass" https://example-site.com/login

This command sends the login data and saves the session cookies to `cookies.txt`.

3. Access the user-specific page: Once logged in, navigate to the page that contains the account balance. Assume this page is at https://example-site.com/account.

curl -b cookies.txt https://example-site.com/account -o account.html

This command uses the saved cookies to maintain the session and downloads the account page to account.html.

4. Extract the account balance: Inspect the account.html file to find the HTML element containing the balance. Assume it’s within a <span> tag with an ID of balance.

grep -o '<span id="balance">[^<]*</span>' account.html | sed 's/<[^>]*>//g'

This command extracts the balance value from the HTML.

5. Output the results: The account balance is displayed in your terminal. Save it to a file if needed:

grep -o '<span id="balance">[^<]*</span>' account.html | sed 's/<[^>]*>//g' > balance.txt

The balance is now saved in balance.txt.

Example C: API data extraction

APIs often provide a structured and efficient way to access data from a website. This example demonstrates how to use curl to extract data from an API endpoint.

1. Find the API endpoint: Identify the API endpoint for the weather data. Assume the endpoint is https://api.weather.com/v3/wx/conditions/current, and it requires parameters like location and apiKey.

2. Make the API request: Use curl to send a GET request to the API with the necessary parameters.

curl "https://api.weather.com/v3/wx/conditions/current?location=12345&apiKey=your_api_key"

This command sends the request and displays the response in JSON format.

3. Filter the desired data: Use the jq tool to parse the JSON response and extract specific fields, like the temperature and weather description.

curl "https://api.weather.com/v3/wx/conditions/current?location=12345&apiKey=your_api_key" | jq '.temperature, .weatherDescription'

This command extracts and displays the temperature and weather description.

4. Save the results: If you want to save the output to a file, redirect it as follows:

curl "https://api.weather.com/v3/wx/conditions/current?location=12345&apiKey=your_api_key" | jq '.temperature, .weatherDescription' > weather.txt

The extracted weather data is now saved in weather.txt.

5. Handling errors: If the API returns an error, curl’s -f option can be used to handle it gracefully, failing silently instead of displaying the error page.

curl -f "https://api.weather.com/v3/wx/conditions/current?location=12345&apiKey=your_api_key" | jq '.temperature, .weatherDescription' || echo "API request failed."

This command will output an error message if the API request fails.

Automating curl requests

Web scraping often involves repetitive tasks, such as making multiple requests to the same website at different times or scraping data regularly. Automating these tasks can save time and ensure consistency. Let’s learn how to use bash scripting to automate curl requests and how to schedule these tasks using cron on Unix-based systems.

Scripting with bash

Bash scripts allow you to automate sequences of commands, making it easier to perform complex tasks with a single command. This section guides you through creating simple bash scripts to automate your curl requests.

1. Start with a basic script: Let’s say you want to automate the process of downloading a webpage. Start by creating a new file with a .sh extension.

nano scrape_site.sh

2. Add the curl command: Inside the script, add the curl command you wish to automate. For example, to download the homepage of a website:

#!/bin/bash

curl https://example.com -o homepage.html

The #!/bin/bash at the top is known as a shebang, which tells the system that this file should be executed using the bash shell.

3. Make the script executable: Save and close the file, then make the script executable using the chmod command.

chmod +x scrape_site.sh

4. Run the script: Now, you can run your script anytime by executing the following command:

./scrape_site.sh

Automating multiple requests

If you need to make multiple requests, you can use loops in your script. For example, to download multiple pages from a website:

#!/bin/bash

for i in {1..10}

do

curl "https://example.com/page$i" -o "page$i.html"

done

This script downloads pages 1 through 10 from the website and saves each page to a separate file.

Handling errors in scripts

To make your scripts more robust, you can add error handling. For example, using if statements to check the success of a curl request:

#!/bin/bash

for i in {1..10}

do

if curl -f "https://example.com/page$i" -o "page$i.html"; then

echo "Downloaded page$i.html successfully."

else

echo "Failed to download page$i.html" >&2

fi

done

This script logs a success message if a page is downloaded successfully and an error message if it fails.

Scheduling scrapes with cron

Once you’ve created a bash script for your curl requests, you might want to run it automatically at regular intervals. This can be accomplished using cron, a time-based job scheduler on Unix-based systems.

1. Access the cron table: To schedule a task, you need to add it to your `crontab` (cron table). Open the crontab editor using:

crontab -e

2. Define the schedule: The cron syntax is made up of five fields: minute, hour, day of the month, month, and day of the week. Each field can be set to a specific value or a wildcard (*) to match any value. For example, to run your script every day at midnight:

0 0 * * * /path/to/scrape_site.sh

This line tells cron to execute scrape_site.sh every day at 00:00 hours.

3. Save and exit: After adding your cron job, save and exit the editor. The cron job is now scheduled, and your script will run automatically at the specified time.

Monitoring and logging cron jobs

To ensure your cron jobs run successfully, it’s important to monitor their output. By default, cron jobs do not produce any output unless there’s an error. You can redirect output to a log file:

0 0 * * * /path/to/scrape_site.sh >> /path/to/scrape_log.txt 2>&1

This redirects both standard output and error messages to scrape_log.txt.