Scrapy is a powerful and flexible framework that simplifies the process of web scraping. Using proxies with Scrapy is essential for any serious web scraping project, especially when dealing with sites that implement rate limiting, IP blocking, or geo-restrictions. In this article, we’ll take a closer look at various proxy types you can use with Scrapy: By setting up proxies correctly, you can enhance your scraping capabilities, avoid detection, and access a wider range of data.

What is Scrapy?

Scrapy is an open-source web crawling framework written in Python, designed for the purpose of extracting data from websites and processing it in various formats, such as CSV, JSON, and XML. It’s a powerful tool that developers and data scientists widely use for web scraping, data mining, and automating the process of collecting data from the web. Scrapy handles tasks such as sending HTTP requests, following links, parsing HTML content, and storing the extracted data in the desired format.

One of Scrapy’s key strengths lies in its flexibility and scalability. It can efficiently handle large-scale data extraction tasks, which makes it suitable for various applications ranging from simple data collection projects to complex web scraping operations.

How Scrapy works

Scrapy operates based on a simple yet powerful architecture. The framework revolves around a few key components:

- Spiders: These are classes that define how a certain site (or a group of sites) will be scraped, including how to follow links and how to extract data from the pages.

- Selectors: Scrapy uses CSS and XPath selectors to identify the parts of the HTML document to extract data from.

- Item pipeline: After data is scraped, it goes through a pipeline where it can be processed, cleaned, validated, and ultimately stored in the desired format.

- Middlewares: These are hooks into Scrapy’s request/response processing, where you can modify requests or responses (e.g., to handle proxies, user agents, or retries).

Use Cases for Scrapy

1. Price monitoring and comparison: E-commerce businesses and individuals can use Scrapy to monitor prices across different online retailers. This data is essential for dynamic pricing strategies, competitive analysis, and finding the best deals.

2. Data mining for market research: Companies can leverage Scrapy to extract valuable information from competitors’ websites, forums, social media, and review sites. This data can provide insights into customer sentiment, product trends, and industry developments.

3. Lead generation: By scraping directories, forums, and social media sites, Scrapy can be used to compile lists of potential leads. This is particularly useful for sales and marketing teams looking to build targeted outreach campaigns.

4. Content aggregation: Scrapy is an excellent tool for aggregating content from multiple sources into a single repository. For instance, news aggregators can use Scrapy to collect articles from different news websites and present them in a unified format.

5. Sentiment analysis: Businesses can scrape reviews, comments, and social media posts using Scrapy to perform sentiment analysis. This allows them to gauge public opinion about their products, services, or brand.

6. Real estate listings: Scrapy can be used to scrape real estate websites to collect data on property listings, including prices, locations, and features. This data can then be used for investment analysis, market research, or building real estate comparison platforms.

7. Academic research: Researchers often need large datasets from various sources to support their studies. Scrapy helps automate the collection of these datasets, which can be critical for research in fields like economics, social sciences, and data science.

8. Job scraping: Job boards and recruitment firms can use Scrapy to scrape job postings from various websites. This data can then be used to build a job aggregation service or to analyze job market trends.

Benefits of Using Scrapy

Efficiency: Scrapy is designed to be fast and efficient, handling multiple requests simultaneously. This allows users to scrape large amounts of data in a relatively short time.

Ease of use: Despite its powerful features, Scrapy is relatively easy to set up and use. It has an active community, comprehensive documentation, and numerous tutorials available, making it accessible even for those new to web scraping.

Extensibility: Scrapy is highly customizable. Users can extend its functionality through middlewares, pipelines, and custom components to suit their specific needs.

Built-in features: Scrapy comes with a range of built-in features such as handling cookies, sessions, redirects, and following links automatically. This reduces the amount of boilerplate code developers need to write.

Data integrity: The framework includes mechanisms to ensure data integrity and allows for error handling, making it robust and reliable for production use.

Community support: Being open-source with a large community of developers, Scrapy is continuously improved and updated. This also means that a wealth of resources and support is available for troubleshooting and learning.

Supports complex scraping projects: With its ability to manage multiple spiders, Scrapy can handle complex scraping tasks across multiple websites in parallel, making it ideal for projects that require data from various sources.

Importance of Proxies for Scrapy

When it comes to web scraping, especially with a powerful tool like Scrapy, using proxies is not just an option—it’s often a necessity. Proxies act as intermediaries between your Scrapy spiders and the target websites, masking your real IP address and providing several advantages that make them indispensable in web scraping. Here’s why using proxies with Scrapy is so popular:

Avoiding IP bans

One of the primary reasons to use proxies with Scrapy is to avoid getting your IP address banned by target websites. Many websites have measures in place to detect and block IP addresses that make too many requests in a short period. If you scrape without proxies, your real IP could be blocked, halting your scraping project. Proxies help you distribute requests across multiple IP addresses, making it less likely that any single IP will be flagged or banned.

Bypassing geo-restrictions

Some websites display different content based on the user’s geographical location. For instance, e-commerce sites might show different prices or products depending on where you’re browsing from. By using proxies with IP addresses from different locations, you can access geo-restricted content and scrape data as if you were in those locations. This is particularly useful for price comparison, market research, and monitoring international competitors.

Enhancing anonymity

Proxies enhance the anonymity of your scraping activities by hiding your real IP address. This is crucial when you need to scrape data without revealing your identity, especially when dealing with sensitive or competitive data. Proxies can make it difficult for websites to track your activities or link multiple requests back to a single entity.

Handling rate limits

Many websites implement rate limiting, restricting the number of requests that can be made from a single IP address within a certain time frame. By using a pool of proxies, Scrapy can distribute requests across multiple IPs, allowing you to scrape at a faster rate without hitting these limits. This means you can collect more data in less time, increasing the efficiency of your scraping operations.

Avoiding captcha challenges

Websites often use CAPTCHA challenges to distinguish between human users and bots. When Scrapy sends numerous requests from a single IP address, it’s more likely to trigger these challenges. By rotating proxies, you can reduce the likelihood of encountering CAPTCHAs, as your requests appear to come from different users. Some proxy providers even offer CAPTCHA-solving services as part of their offering, further streamlining the scraping process.

Accessing blocked websites

In some cases, your IP address might be blocked from accessing certain websites entirely, either due to previous scraping activities or because of restrictions imposed by your network or country. Proxies can help bypass these blocks by providing an alternative IP address that isn’t subject to the same restrictions, allowing you to continue scraping without interruption.

Simulating real-world user behavior

Proxies can be used to simulate real-world user behavior by rotating through different IP addresses, user agents, and session cookies. This makes your scraping activities appear more like genuine browsing activity, reducing the likelihood of detection and improving the quality of the data collected. For instance, using residential proxies with different browser fingerprints can help mimic how real users access a website.

Scraping from multiple accounts

If your scraping project involves interacting with a website that requires login credentials, such as scraping data from multiple user accounts, proxies allow you to log in from different IP addresses. This prevents the website from detecting multiple logins from the same IP, which could trigger security alerts or lead to account bans.

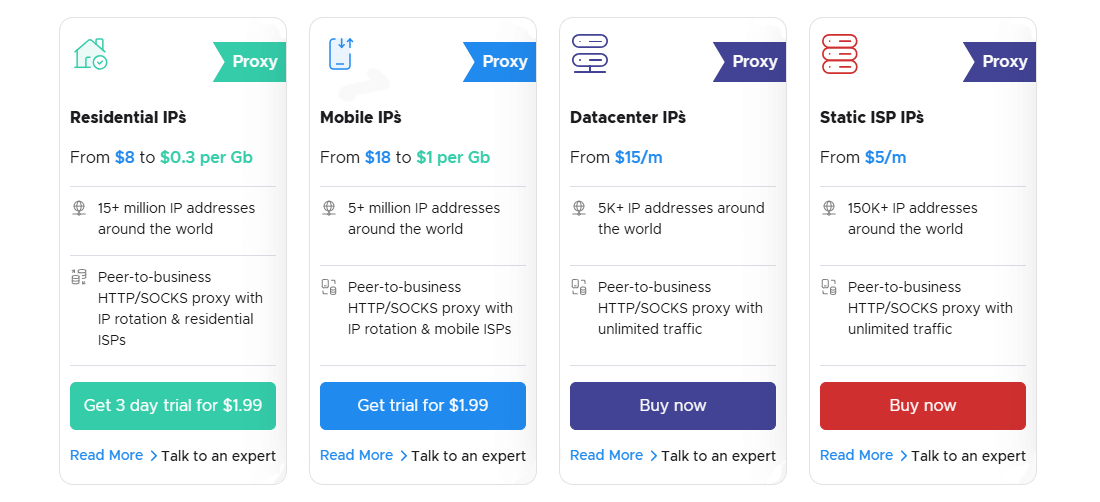

The Best Proxy for Scrapy

| Criteria | Residential Proxies | Mobile Proxies | Datacenter Proxies | ISP Proxies |

|---|---|---|---|---|

| IP source | Real user devices in residential areas | Real mobile devices (3G/4G/5G networks) | Data centers (server farms) | Real ISP-assigned IPs from data centers |

| Anonymity | High | Very High | Moderate | High |

| Speed | Moderate to High | Moderate to Low (due to mobile network speed) | Very High | High |

| Reliability | High (if from a trusted provider) | High (but can fluctuate with mobile network) | Moderate (can be detected by some websites) | High |

| Cost | Moderate to High | High | Low to Moderate | Moderate |

| IP rotation | Frequent (typically) | Frequent (as mobile IPs often change) | Optional (static or rotating available) | Optional (can be static or rotating) |

| Geo-location options | Extensive, often covers many residential areas | Extensive, but depends on mobile network coverage | Limited to data center locations | Moderate, depends on the ISP’s locations |

| Detection risk | Low (harder to detect and block) | Very Low (most difficult to detect and block) | High (easier to detect as non-residential IPs) | Low (similar to residential IPs) |

| Best use cases | Price monitoring. Market research. Social media scraping. | Sneaker sites.Social media automation.Geo-restricted content access. | Bulk data scraping. High-speed scraping. General-purpose web scraping. | E-commerce scraping. Ad verification. Streaming service access. |

| Drawbacks | Higher cost than datacenter proxies. Slower than datacenter proxies. | Expensive. Slower than datacenter or residential proxies. | Easier to block. Often flagged as non-residential by websites. | Less widespread than residential proxies. May have less geographic diversity than residential proxies. |

Quick summary

Choosing the best proxy type for Scrapy depends on the specific requirements of your scraping project, such as the level of anonymity needed, budget, speed, and the nature of the target websites.

Residential proxies: Ideal for tasks where anonymity is crucial and you need to avoid detection, such as price monitoring, market research, and social media scraping. They are more expensive but offer high reliability and low detection risk.

Mobile proxies: Best for highly secure operations where you need the lowest chance of detection, like accessing sneaker sites or managing multiple social media accounts. They are the most expensive and can be slower due to mobile network speed.

Datacenter proxies: Suitable for bulk data scraping where speed and cost are prioritized over anonymity. They are cost-effective but have a higher detection risk as they are easier to identify as non-residential IPs.

ISP proxies: A balanced option that offers high speed and anonymity, suitable for tasks like e-commerce scraping and ad verification. They are less common and more expensive than datacenter proxies but offer better detection resistance.

Using Proxies with Scrapy

Adding proxies to your Scrapy instance involves configuring your Scrapy spiders to route their requests through proxy servers. There are several methods to integrate proxies into your Scrapy project, including manually setting a proxy for each request, using a middleware, or leveraging third-party libraries.

Method 1: Manually setting a proxy for each request

One of the simplest ways to use a proxy in Scrapy is by manually setting the proxy for each request. This can be done by setting the meta dictionary in your spider.

import scrapy

class MySpider(scrapy.Spider):

name = "my_spider"

start_urls = ["https://example.com"]

def start_requests(self):

for url in self.start_urls:

yield scrapy.Request(url, callback=self.parse, meta={'proxy': 'http://yourproxy:port'})

def parse(self, response):

# Your parsing logic here

pass

In this example, replace http://yourproxy:port with the actual proxy URL and port you want to use.

Method 2: Using proxy middleware

For more complex scenarios, such as rotating proxies or using a pool of proxies, you can create or use existing middleware to manage proxies automatically.

Creating custom proxy middleware: You can create custom middleware to dynamically set proxies for each request.

class ProxyMiddleware:

def __init__(self):

self.proxies = [

'http://proxy1:port',

'http://proxy2:port',

# Add more proxies here

]

def process_request(self, request, spider):

proxy = random.choice(self.proxies)

request.meta['proxy'] = proxy

# In settings.py, add your middleware:

DOWNLOADER_MIDDLEWARES = {

'myproject.middlewares.ProxyMiddleware': 543,

}

Using third-party proxy middleware: Several third-party Scrapy middlewares are available that offer advanced features like automatic proxy rotation, failover, and integration with proxy services. One popular option is scrapy-rotating-proxies. First, install the middleware:

pip install scrapy-rotating-proxiesThen, configure it in your settings.py:

# settings.py

DOWNLOADER_MIDDLEWARES = {

'rotating_proxies.middlewares.RotatingProxyMiddleware': 610,

'rotating_proxies.middlewares.BanDetectionMiddleware': 620,

}

# List of proxies

ROTATING_PROXY_LIST = [

'http://proxy1:port',

'http://proxy2:port',

# Add more proxies here

]

With scrapy-rotating-proxies, Scrapy automatically rotates through the proxies in your list and handles retries for banned proxies.

Method 3: Using proxy services

If you're using a proxy service that provides an API, you can integrate it directly into your Scrapy project. Some proxy services offer automatic proxy rotation, CAPTCHA solving, and other features. For example, if your proxy service requires authentication:

# settings.py

# Configure the proxy and authentication

PROXY = "http://username:password@proxyserver:port"

# Configure Scrapy to use the proxy

DOWNLOADER_MIDDLEWARES = {

'scrapy.downloadermiddlewares.httpproxy.HttpProxyMiddleware': 110,

}

# Inside your spider

class MySpider(scrapy.Spider):

name = "my_spider"

start_urls = ["https://example.com"]

def start_requests(self):

for url in self.start_urls:

yield scrapy.Request(url, callback=self.parse, meta={'proxy': PROXY})

def parse(self, response):

# Your parsing logic here

pass

Additional Considerations

- Handling proxy failures: Proxies can fail or get blocked. Implement retry logic in your middleware to switch to a new proxy when a request fails.

- Rotating user agents: To further reduce detection risk, rotate user agents along with proxies. You can use the

scrapy-user-agentspackage or create custom middleware for this purpose. - Respect robots.txt: Always respect a website’s

robots.txtfile and terms of service. Scraping can be legally and ethically sensitive, so ensure your activities comply with relevant laws and guidelines. - Logging and monitoring: Keep an eye on your Scrapy logs to monitor proxy performance and adjust your proxy list as needed. It’s also helpful to track request success rates to identify when proxies might be getting banned.

Scrapy Crash Course

Whether you’re a beginner or looking to refine your skills, this crash course will guide you through the basics of using Scrapy, from setting up your environment to building your first spider and extracting data.

Setting up Scrapy

Before you start using Scrapy, you need to set up your development environment.

Step 1: Install Scrapy: First, make sure you have Python installed (version 3.6 or later is recommended). Then, install Scrapy using pip:

pip install scrapy

Step 2: Create a Scrapy project: Navigate to the directory where you want to create your project and run the following command. Replace myproject with your desired project name. This command will create a directory structure for your Scrapy project, including a spiders directory where your spiders will live.

scrapy startproject myproject

Understanding Scrapy project structure

A typical Scrapy project has the following structure:

myproject/

scrapy.cfg

myproject/

__init__.py

items.py

middlewares.py

pipelines.py

settings.py

spiders/

__init__.py

my_spider.py

scrapy.cfg: The project’s configuration file.items.py: Define the structure of the data you want to scrape.middlewares.py: Custom middlewares for modifying requests and responses.pipelines.py: Process the scraped data (e.g., cleaning, validation, storage).settings.py: Configuration settings for your Scrapy project.spiders/: Directory to store your spiders (the scripts that define how to scrape a website).

Creating your first spider

Step 1: Define a spider: Create a new Python file in the spiders directory, e.g., quotes_spider.py, and start with this basic spider:

import scrapy

class QuotesSpider(scrapy.Spider):

name = "quotes"

start_urls = [

'http://quotes.toscrape.com',

]

def parse(self, response):

for quote in response.css('div.quote'):

yield {

'text': quote.css('span.text::text').get(),

'author': quote.css('span small::text').get(),

'tags': quote.css('div.tags a.tag::text').getall(),

}

next_page = response.css('li.next a::attr(href)').get()

if next_page is not None:

yield response.follow(next_page, self.parse)

name: The name of the spider. This is how you’ll refer to the spider when running it.start_urls: A list of URLs where the spider will begin to scrape.parse: A method that will be called with the downloaded response from the start URLs. It defines how to extract data from the page and follow links to the next pages.

Step 2: Run the spider: To run your spider, navigate to your project directory and use the following command, which will start the spider, and Scrapy will output the scraped data to the console.

scrapy crawl quotes

Exporting scraped data

You can easily export the scraped data to a file in various formats (JSON, CSV, XML). This command will save the output in quotes.json. You can change the file extension to .csv or .xml to get the data in those formats.

scrapy crawl quotes -o quotes.json

Using Scrapy shell for interactive testing

Scrapy Shell is a powerful tool for testing selectors and exploring web pages interactively. You can start Scrapy Shell with:

scrapy shell 'http://quotes.toscrape.com'

In the shell, you can try out CSS and XPath selectors to extract data. For example:

response.css('title::text').get() # Extracts the title of the page

response.xpath('//span[@class="text"]/text()').getall() # Extracts all quote texts

Advanced spider features

Handling pagination: In the example spider, pagination is handled by checking for the "next" link and following it:

next_page = response.css('li.next a::attr(href)').get()

if next_page is not None:

yield response.follow(next_page, self.parse)

Scraping multiple pages: You can extend the start_urls list or use a loop to generate multiple URLs dynamically:

start_urls = [f'http://quotes.toscrape.com/page/{i}/' for i in range(1, 11)]

Customizing requests: You can customize how requests are made by adding headers, cookies, or using proxies:

yield scrapy.Request(url, callback=self.parse, headers={'User-Agent': 'Mozilla/5.0'})

Item pipelines

Item pipelines allow you to process and store the scraped data. To enable and configure item pipelines, modify settings.py:

# settings.py

ITEM_PIPELINES = {

'myproject.pipelines.MyPipeline': 300,

}

Then, define the pipeline in pipelines.py:

class MyPipeline:

def process_item(self, item, spider):

# Process each item (e.g., clean data, validate)

return item

Scrapy settings and configurations

Scrapy is highly configurable through the settings.py file. Here are some common settings:

CONCURRENT_REQUESTS: Number of concurrent requests (default: 16).DOWNLOAD_DELAY: Delay between requests to the same domain (in seconds).USER_AGENT: Set a custom user agent for requests.ROBOTSTXT_OBEY: Whether to respectrobots.txtrules (default:True).LOG_LEVEL: Set the logging level (e.g.,INFO,DEBUG).

Debugging and logging

Scrapy’s logging system helps you debug your spiders. Use these log messages to monitor your spider’s progress and troubleshoot issues.

self.logger.info('A log message')

self.logger.debug('Debugging information')

Best practices

- Modularize code: Keep your spiders, pipelines, and middlewares modular for easy maintenance.

- Respect website terms: Always check a website’s

robots.txtand terms of service to ensure you are scraping legally and ethically. - Use proxies and user agents: Rotate proxies and user agents to avoid detection and IP bans.

- Test with scrapy shell: Before running a spider, test your selectors and logic with Scrapy Shell.

Frequently Asked Questions

Install Scrapy using pip install scrapy. To create a new project, navigate to your desired directory and run scrapy startproject yourprojectname. This generates a project structure with necessary files and folders, including a spiders directory where your scraping scripts are stored.

Scrapy allows you to handle pagination by following links to subsequent pages. In your spider's parse method, use response.follow() to navigate to the "next" page URL and recursively call the `parse` method to continue scraping data from additional pages.

Scrapy Shell is an interactive tool for testing and debugging scraping code. It allows you to load a webpage and experiment with CSS or XPath selectors to extract data. Launch it with scrapy shell 'URL', making it easier to develop and refine your spiders.