Web scraping relies on the ability to locate and extract specific elements from a webpage’s structure – and two of the most widely used methods for this task are XPath and CSS selectors. Both serve as locators, allowing developers to precisely identify and extract data from HTML documents. But which one should you use for your web scraping projects? In this article, we’ll compare XPath and CSS selectors, explaining their key differences, strengths, and use cases.

Overview of XPath

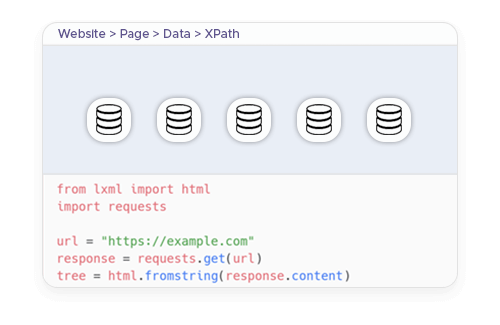

XPath (XML Path Language) is a query language used to navigate and extract elements from XML and HTML documents. It enables web scrapers to locate elements based on their structure rather than relying solely on class or ID attributes. XPath is commonly used in web scraping when working with libraries such as lxml, Scrapy, and Selenium.

How XPath Works

XPath provides a way to traverse an HTML document using different path expressions. It allows scrapers to:

- Select elements based on their tag name, attributes, or text content.

- Traverse both forward and backward through the DOM hierarchy.

- Use advanced functions and conditions to refine searches.

Basic XPath Syntax

XPath expressions resemble file system paths. Some common examples include:

//div– Selects all<div>elements in the document.//a[@class="link"]– Selects all<a>elements with the class link.//ul/li[1]– Selects the first<li>inside a<ul>.//input[@type="text"]/following-sibling::button– Finds a button next to a text input field.

Pros and Cons of XPath in Web Scraping

Pros:

- Can select elements based on hierarchical relationships, making it useful for extracting structured data.

- Offers robust filtering with functions like

contains(),starts-with(), andtext(). - Works well with Selenium, which supports full XPath queries.

Cons:

- XPath queries can be complex and harder to read compared to CSS selectors.

- Performance can be slower in certain cases, especially when running queries in the browser (e.g., with Selenium).

- Some modern websites modify their DOM dynamically, making deep XPath queries unreliable.

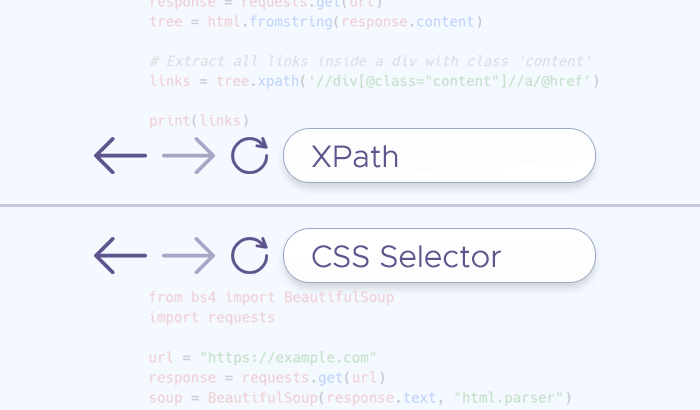

Example: Extracting Elements with XPath

Here’s an example of how to extract data using XPath with lxml in Python. This script fetches the page source, parses it, and extracts all <a> elements inside a <div> with the class content.

from lxml import html

import requests

url = "https://example.com"

response = requests.get(url)

tree = html.fromstring(response.content)

# Extract all links inside a div with class 'content'

links = tree.xpath('//div[@class="content"]//a/@href')

print(links)

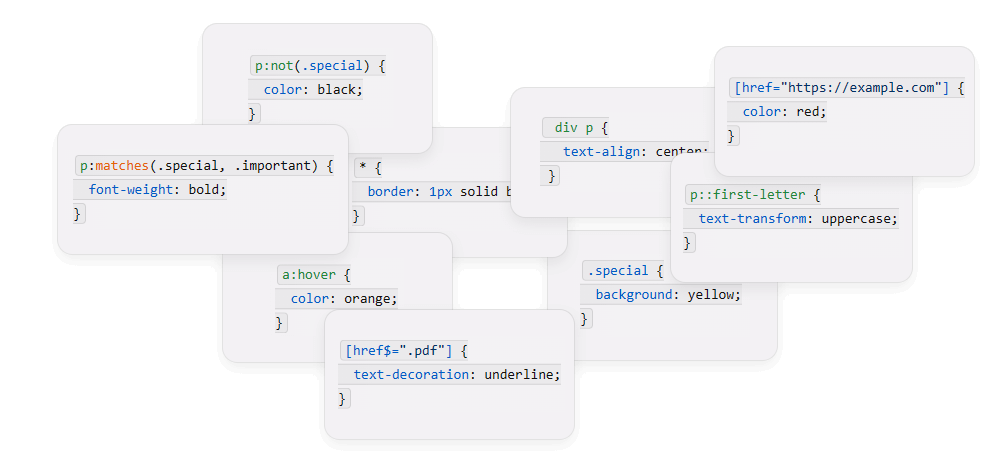

Overview of CSS Selectors

CSS selectors are patterns used to select and style HTML elements. In web scraping, they serve as a powerful way to locate elements within a webpage, particularly when using libraries like BeautifulSoup, Scrapy, or browser automation tools like Puppeteer. Unlike XPath, which navigates the document tree, CSS selectors rely on element types, classes, IDs, and relationships between elements.

How CSS Selectors Work

CSS selectors define rules for selecting HTML elements based on attributes, position, and relationships. They offer a clean and intuitive syntax that many web developers and scrapers find easier to work with compared to XPath.

Basic CSS Selector Syntax

Here are some common CSS selector patterns:

div– Selects all<div>elements..content– Selects all elements with the class content.#main– Selects the element with the IDmain.ul > li:first-child– Selects the first<li>inside a<ul>.input[type="text"] + button– Selects a<button>immediately following a text input.

Pros and Cons of CSS Selectors in Web Scraping

Pros:

- Simpler and more readable syntax compared to XPath.

- Faster performance in many cases, especially when scraping with BeautifulSoup or Scrapy.

- Better compatibility with web technologies since CSS selectors are natively used by browsers and supported in many scraping tools.

Cons:

- Lacks some advanced filtering capabilities found in XPath, such as selecting elements based on text content.

- Cannot traverse the DOM backward (e.g., selecting a parent element from a child).

- May be less effective for deeply nested elements compared to XPath.

Example: Extracting Elements with CSS Selectors

Here’s an example of how to extract data using CSS selectors with BeautifulSoup in Python. This script fetches the webpage, parses it with BeautifulSoup, and extracts all <a> elements inside a <div> with the class content, similar to the code snippet above.

from bs4 import BeautifulSoup

import requests

url = "https://example.com"

response = requests.get(url)

soup = BeautifulSoup(response.text, "html.parser")

# Extract all links inside a div with class 'content'

links = [a["href"] for a in soup.select("div.content a")]

print(links)

XPath vs. CSS Selectors: Key Differences

| Feature | XPath | CSS Selectors |

|---|---|---|

| Syntax Complexity | More complex, especially for advanced queries (e.g., traversing the DOM backward) | Simpler and more readable, widely used in web development |

| Performance | Can be slower in browsers (especially with Selenium), but efficient in XML parsing (e.g., with lxml) | Generally faster, especially when used with BeautifulSoup or Scrapy |

| Selecting by Text Content | Supports text(), contains(), and starts-with() to filter elements by text |

No direct way to filter elements by text |

| Parent Selection | Can navigate both forward and backward (e.g., selecting a parent element from a child) | Only allows forward selection (e.g., child, sibling relationships) |

| Attribute-Based Selection | Supports advanced selection using [@attribute='value'] and contains(@attribute, 'text') |

Can select elements based on attributes using [attribute=value], but lacks functions like contains() |

| Browser Support | Fully supported in Selenium, but not natively supported in browsers for styling | Natively supported in all modern browsers and widely used for CSS styling |

| Tool Compatibility | Works well with Selenium, lxml, and Scrapy | Works well with BeautifulSoup, Scrapy, and browser automation tools like Puppeteer |

| Best Use Cases | Best for complex DOM navigation, selecting elements by text, or handling deeply nested structures | Best for simpler, performance-friendly queries, especially when using modern scraping tools |

Key takeaways:

- If you need simple, fast queries, especially when using BeautifulSoup or Scrapy, CSS selectors are the better choice.

- If you need more control over element selection, such as filtering by text content or navigating backward in the DOM, XPath is more powerful.

- Selenium users may prefer XPath for its flexibility, while those using BeautifulSoup or Scrapy will likely find CSS selectors more efficient.

Practical Comparison in a Web Scraping Script

To better understand how XPath and CSS selectors perform in real-world web scraping, let’s compare them side by side using a Python script. We'll extract the same data using both methods and analyze the differences.

We’ll scrape a webpage that contains a list of articles, each inside a <div class="article"> element. Our goal is to extract:

- The article title (inside an

<h2>tag). - The URL of the article (inside an

<a>tag). - The publication date (inside a

<span class="date">tag).

Using XPath with lxml

from lxml import html

import requests

url = "https://example.com"

response = requests.get(url)

tree = html.fromstring(response.content)

# Extract data using XPath

articles = tree.xpath('//div[@class="article"]')

for article in articles:

title = article.xpath('.//h2/text()')[0]

url = article.xpath('.//a/@href')[0]

date = article.xpath('.//span[@class="date"]/text()')[0]

print(f"Title: {title}\nURL: {url}\nDate: {date}\n")

Pros of using XPath in this scenario:

- Can extract multiple attributes and navigate through the DOM hierarchy efficiently.

- Allows selecting elements by text content if needed.

Cons of using XPath in this scenario:

- The syntax is slightly more complex than CSS selectors.

Using CSS Selectors with BeautifulSoup

from bs4 import BeautifulSoup

import requests

url = "https://example.com"

response = requests.get(url)

soup = BeautifulSoup(response.text, "html.parser")

# Extract data using CSS selectors

articles = soup.select("div.article")

for article in articles:

title = article.select_one("h2").text

url = article.select_one("a")["href"]

date = article.select_one("span.date").text

print(f"Title: {title}\nURL: {url}\nDate: {date}\n")

Pros of using CSS selectors in this scenario:

- The syntax is cleaner and easier to understand.

- Works faster with BeautifulSoup, making it more efficient for simpler scraping tasks.

Cons of using CSS selectors in this scenario:

- Cannot select elements based on text content directly.

- Cannot traverse the DOM backward (e.g., selecting a parent from a child).

Which One Should You Use for Web Scraping?

Now that we’ve compared XPath and CSS selectors in theory and practice, let’s break down which one to use based on different web scraping scenarios.

When to Use XPath

Best for:

- Scraping deeply nested elements in complex HTML structures.

- Selecting elements based on text content (text(), contains()).

- Navigating both forward and backward in the DOM (e.g., selecting parent elements).

- Working with Selenium, where XPath is often more flexible.

- Extracting structured data from XML-based pages.

⚠️ When to avoid:

- If performance is a concern (especially in browser-based scraping like Selenium).

- When a simpler solution like CSS selectors would suffice.

When to Use CSS Selectors

Best for:

- Scraping elements using BeautifulSoup or Scrapy (faster than XPath).

- Selecting elements based on class names, IDs, and attributes.

- Handling modern, JavaScript-heavy websites, especially when paired with Puppeteer or Playwright.

- Writing cleaner, more readable queries.

⚠️ When to avoid:

- If you need to filter elements by text content (CSS doesn’t support this).

- When navigating backward in the DOM is necessary.

Considerations for Different Scraping Tools

| Scraping Tool | Best Choice |

|---|---|

| BeautifulSoup | CSS Selectors (faster and simpler) |

| Scrapy | CSS Selectors (more efficient), but XPath is also supported |

| Selenium | XPath (more flexibility in navigating the DOM) |

| lxml | XPath (designed for XML/HTML parsing) |

| Puppeteer/Playwright | CSS Selectors (native support in browsers) |

How Proxies Complement Web Scraping

Regardless of whether you use XPath or CSS selectors, websites often implement anti-scraping measures, such as rate limiting, CAPTCHAs, and IP bans. This is where proxies come in:

- Rotating residential proxies can help distribute requests across multiple IPs.

- Datacenter proxies offer high-speed scraping for less restrictive sites.

- Mobile proxies are useful when scraping mobile-optimized pages.

If you’re dealing with Google scraping or protected websites, combining Infatica’s proxies with the right scraping techniques ensures smooth, uninterrupted data collection.