Collecting web data has become a critical step for businesses and researchers aiming to train AI systems – however, traditional methods of web scraping often involve technical challenges, high costs, and resource-intensive processes. Infatica Scraper API offers a smarter, more efficient solution, streamlining data collection to empower AI innovation – and in this article, we’ll explore why you should try it!

Importance of Web Data for Machine Learning

Data serves as the foundation for machine learning: It enables models to learn patterns, make predictions, and adapt to various tasks. Without high-quality data, even the most advanced algorithms fail to deliver meaningful results.

Learning from Patterns

ML models learn by identifying patterns and relationships in the data provided – and the quality and quantity of data determine how well the model generalizes to unseen scenarios. For example:

- In image recognition, models learn visual features from labeled images.

- In NLP, models understand language nuances by analyzing text data.

Improving Model Accuracy

Large, diverse datasets allow models to better capture the variability in real-world scenarios. This helps reduce overfitting (when a model performs well on training data but poorly on new data) and improves overall accuracy.

- A speech recognition model trained on data from multiple accents performs better across diverse populations.

- A recommendation system with data from varied user behaviors provides more personalized suggestions.

Training Specific Use Cases

Data tailored to specific use cases allows ML models to focus on niche tasks. For instance:

- Autonomous driving models require high-quality sensor data from road scenarios.

- Fraud detection systems need transaction data with labeled fraudulent patterns.

Supporting Feature Engineering

Features, the measurable properties of data, are crucial for model training – and a rich dataset supports the extraction of meaningful features, which improves model performance:

- In predictive maintenance, time-series sensor data can be transformed into trends and thresholds to predict equipment failure.

- In customer segmentation, demographic and behavioral data enable the creation of meaningful customer profiles.

Enhancing Robustness and Fairness

Diverse datasets ensure models are robust and unbiased, avoiding errors caused by limited or skewed data:

- An AI hiring tool must be trained on inclusive data to avoid bias against gender or ethnicity.

- A healthcare model needs representative patient data to provide accurate diagnoses across demographics.

Enabling Continuous Learning

Modern AI systems benefit from continuous data collection to update models over time. This process, called retraining, allows models to adapt to changes in:

- Market trends (e.g., recommendation engines evolving with consumer preferences).

- User behavior (e.g., chatbots learning from interactions to provide better responses).

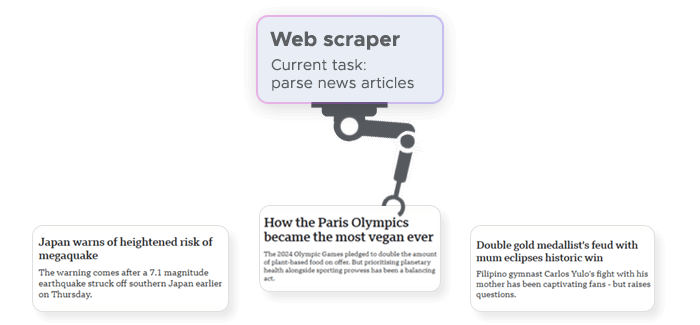

Types of Parsable HTTP Content for Machine Learning

Various web data types can be extracted from HTTP responses using tools like Infatica Scraper API, which simplifies data parsing and collection. Let’s take a closer look at the most common types of parsable HTTP content and their potential Its applications include:

1. HTML Content

HTML is the backbone of web pages, containing the structural and semantic markup that organizes content. Its applications include:

- Text analysis: Extracting articles, reviews, or product descriptions for natural language processing (NLP) tasks like sentiment analysis or summarization.

- Webpage categorization: Analyzing headings, meta tags, and content to classify websites.

- E-commerce insights: Parsing pricing, availability, and specifications for competitive analysis and recommendation systems.

2. JSON and XML APIs

Many modern websites and services provide data through APIs in JSON or XML formats, which are structured and easy to parse. Their applications include:

- Structured data collection: Gathering clean, hierarchical datasets for training models, such as user profiles or transaction histories.

- Dynamic updates: Training models on time-sensitive data, such as stock prices or weather forecasts.

- Knowledge graphs: Building entity-relation models for tasks like question answering or semantic search.

3. Images and Multimedia Links

Many webpages embed images, videos, and audio files linked in their HTML or served as separate media. Their applications include:

- Computer vision: Training image classification, object detection, or facial recognition models.

- Multimodal learning: Combining text and image data for tasks like visual question answering (VQA).

- Video analytics: Analyzing video metadata or frames for applications like surveillance or entertainment recommendations.

4. Tabular Data

Found in HTML tables or as downloadable formats like CSV or Excel files, tabular data is highly structured. Its applications include:

- Data science: Feeding clean, organized datasets into ML models for regression, classification, or clustering tasks.

- Financial analysis: Extracting datasets like historical stock prices for predictive modeling.

- Operational ML: Training models to optimize logistics, inventory, or other structured processes.

5. Logs and Transactional Data

Websites often expose logs or transactional records via HTTP endpoints, which include timestamps, IPs, or user actions. Their applications include:

- Behavioral analytics: Training models to detect user behavior patterns or anomalies.

- Cybersecurity: Building intrusion detection systems using logs of suspicious activity.

- Recommendation engines: Utilizing clickstream data to suggest relevant products or services.

6. Metadata

Metadata in headers or embedded tags, such as Open Graph, Twitter Cards, or schema.org markup. Its applications include:

- SEO analysis: Training models to optimize search engine rankings.

- Content classification: Identifying relevant properties like authorship or content type.

- Knowledge extraction: Augmenting datasets with structured semantic data.

Manual Web Data Collection vs. Infatica Scraper API

| Aspect | Manual/In-House Data Collection | Infatica Scraper API |

|---|---|---|

| Setup and Infrastructure | Requires building and maintaining a web scraping framework, including servers, proxies, and storage. | Turnkey solution with no need for additional infrastructure investment. |

| Cost | High upfront and ongoing costs for hardware, proxy networks, and development resources. | Cost-efficient pricing with predictable operational expenses. |

| Scalability | Limited by internal resources and infrastructure; scaling often requires significant upgrades. | Easily scalable to handle large volumes of data requests without additional effort. |

| Expertise | Requires hiring or training skilled developers and staying updated on web scraping techniques. | No specialized expertise required; Infatica handles the complexities. |

| Reliability | Risk of encountering blocked requests, CAPTCHAs, and IP bans, leading to interruptions. | Built-in mechanisms to bypass blocks, handle CAPTCHAs, and ensure uninterrupted data collection. |

| Geolocation Flexibility | Difficult to access data from multiple locations without a global proxy network. | Extensive global proxy pool ensures seamless access to geolocation-specific data. |

| Legal and Compliance Risks | Must ensure compliance with web scraping regulations independently, which can be complex. | Infatica provides solutions designed to adhere to compliance standards. |

| Development Time | Time-intensive to build, test, and maintain custom scraping scripts. | Ready-to-use API that minimizes time-to-market. |

| Data Quality | Inconsistent results due to reliance on custom-built, error-prone tools. | High-quality data with robust error handling. |

| Adaptability | Manual updates required to handle changes in website structure or dynamic content. | Automatically adapts to website changes with minimal user intervention. |

| Focus on Core Business | Diverts internal resources and focus away from core business goals. | Frees up resources to concentrate on business-critical tasks. |