Web scraping and web crawling are terms that are often used interchangeably. However, these are two different activities even though they share the same goal — to bring you organized and high-quality information. Therefore, it’s important to figure out the difference between crawling and scraping if you want to better understand the web scraping process. In this article, we’re taking a closer look at web crawling vs. web scraping, highlighting their use cases, and analyzing their role in the data gathering pipeline.

What is Web Crawling?

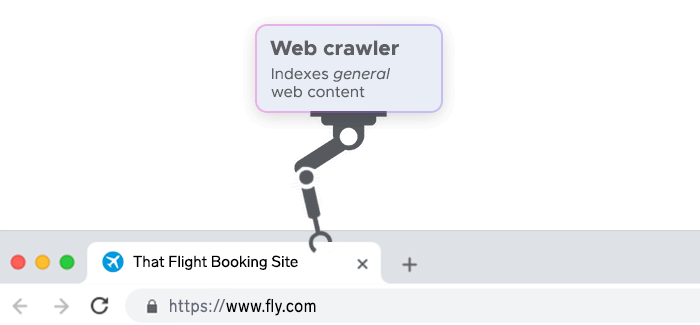

Crawling means going through a web page, understanding and indexing its content. The most prominent example of this activity is what any search engine like Google does — it sends special bots (collectively called Googlebot, in Google's case) to websites.

These bots are usually called crawlers or spiders (because spiders crawl, too.) They go through the content of each single page, all the while trying to analyze the page's purpose — and then storing indexed data. After that, the search engine can quickly find the relevant websites for its users when they look something up online.

In its essence, it’s a process of recognizing what the given web page is about and cataloging this information.

How Web Crawlers Work

A web crawler typically starts with a list of seed URLs, which are the initial pages to visit. As the crawler visits each page, it extracts the content and meta tags of the page, such as the title, keywords, links embedded, images, etc. The crawler also follows the hyperlinks on each page and adds them to a queue of collected URLs to visit later. This way, the crawler can discover new pages and expand its coverage of the web. The crawler stores the collected information in a database or a file system, where it can be processed and indexed for later use.

Purpose of Web Crawling

Its main purpose is to index the web pages for search engines, such as Google and Bing. By crawling the web, search engines can create a large and up-to-date database of web pages and their content, which they can use to provide relevant and fast results for user queries. It can also help search engines to rank the web pages according to their popularity, quality, and authority, based on various factors such as the number and quality of links, the frequency and recency of updates, the user behavior, etc.

What are the advantages of Web Crawling?

It has several advantages for both the search engines and the users. Some of the advantages are:

- Enabling search engines to provide comprehensive and accurate information about the web, which can benefit users who are looking for specific information or topics. It can also help users to discover new and relevant web pages that they might not be aware of otherwise.

- Allowing search engines to keep track of the changes and updates on the web, which can improve the freshness and timeliness of the search results. The web crawling process also helps search engines to detect and remove new links that are broken, duplicate content, spam, and malicious pages, which can enhance the quality and security of the search results.

- Helping in other scenarios, such as web analytics, web archiving, web mining, web testing, web monitoring, etc. It can help to gather and analyze data sets from the web, such as the trends, patterns, behaviors, opinions, sentiments, etc., which can provide valuable insights for various domains and applications.

What is Web Scraping?

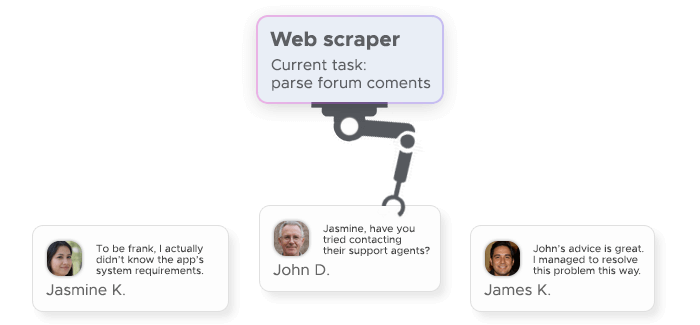

This process is similar to crawling — we could even say that crawling is a part of scraping. Bots (scrapers) go through the content of a web page — crawl through it — to extract data. Then, the scraper processes the obtained information, transforms it into a human-friendly format, and brings the results to you.

Some scrapers need precise data to fetch required results — you must provide them with the keywords that are relevant to the information you need, and often even with source websites. However, an advanced scraper extracts data more or less autonomously: They use artificial intelligence to figure out the relevant sources where they could gather the data you need.

As you can see, the web crawling vs web scraping difference is significant. The latter serves as an indexing activity, while the former is useful for data gathering.

How Web Scraping Works

Data scraping tools can access web pages through the Hypertext Transfer Protocol (HTTP) or a web browser and then parse the HTML code of the page to locate and extract the data of interest. The scraper's input field can also follow the links on each page and scrape data from multiple web pages. The scraped data can then be stored in a local file or a database for further processing and analysis.

Purpose of Web Scraping

Its purpose is to collect large amounts of data from various websites and transform it into a structured and usable format. It can be used for various use cases, such as market research, competitor analysis, price comparison, lead generation, sentiment analysis, and more. It can also help users to access data that is not available through an Application Programming Interface (API) or that is restricted by the website owner.

What are the advantages of Web Scraping?

It offers several advantages for both the data collectors and the data users. Some of the advantages are:

- Saving time and resources by using an automated script for the data collection process and eliminating the need for manual copying and pasting. Web scrapers can also collect data faster and more efficiently than humans.

- Providing access to a large amount of data from various sources and domains, which can enhance the diversity and quality of the data.

- Enable data analysis and visualization, which can help users to discover patterns, trends, and insights from the data. It can also support data-driven decision making and problem solving for many businesses and researchers.

When Is Web Crawling Used?

It’s used when the goal is to discover and index all the web pages on the internet or a specific domain. It is essential for indexing within search engines, such as Google and Bing, that need to provide relevant and up-to-date results for user queries. It is also used for web archiving and updating content, which preserves the historical snapshots of web pages for future reference. Crawling can also be used for web analytics, which measures and analyzes the traffic and behavior of web users, helping to understand the structure, content, and popularity of websites.

When Is Web Scraping Used?

While crawling is a tool that’s primarily used by search engines, web scraping has many more business uses. Anyone — from a simple student to a scientist and to a business — can benefit from this technology and use it for research, pricing analysis, social media, HR, recruiting, and more. However, you might experience some delays because of certain restrictions. We'll discuss the issues and solutions later.

Academic research

To conduct academic research the right way, the research team needs data — and the more of it, the better: This enables scientists to draw more accurate conclusions. The internet has no shortage of data, but gaining access to it may be tricky — especially for non-technical professionals.

Web scraper can quickly fetch and parse any information the user needs. Simply tell the scraper which data to look for — and the bot will go sniffing around the internet.

Market research

An essential process that every company should adopt is market research: A continuous analysis of the company's offer and how it compares against the competition. Here are some typical questions to answer – with the right data and tools, businesses can find answers to any question:

- Are you sure that your business really offers the best price for the given product?

- Is there someone who has already implemented the idea you came up with last night?

- What are the conditions of service your competitors offer to their customers?

Marketing research

This use case might seem similar to the previous one, but it's somewhat different: Marketing managers can analyze data about retail marketing campaigns of competitors, target audience of a business they’re working with, the challenges of competition, and much more. Scraping can bring marketing managers unparalleled intelligence that will let them improve their strategies.

Machine learning

Artificial intelligence, along with its subset, machine learning, requires a lot of data to learn and advance. Web data extraction can supply the ML system with a sufficient amount of information without creating a hassle for developers — that’s why scrapers are an integral part of machine learning.

At its core, it’s useful whenever we need accurate and extensive data to work with, so that’s why this technology has become so popular over the past few years: It simplifies and streamlines data gathering significantly.

E-commerce Price Monitoring

Extracting data can help e-commerce businesses to monitor and compare product details with their competitors. By scraping data from various e-commerce websites, they can gain insights into the market trends, customer preferences, and pricing strategies. It can also help them to optimize their pricing, increase their sales, and improve their customer satisfaction.

Real Estate and Property Data

Real estate businesses and investors, too, can collect and analyze property data from various sources. By scraping data from websites such as Zillow, Realtor, Redfin, etc., they can access information such as property type, location, size, price, amenities, ratings, etc. Using this data, they can also find potential leads, evaluate market opportunities, and make data-driven decisions.

Job Market Analysis

Recruiters and job seekers can gather and examine job data from various websites and platforms. By scraping data from websites such as Indeed, Monster, Glassdoor, etc., they can obtain information such as job title, description, location, salary, skills, etc. They can also track and compare the job market trends, identify the best candidates or employers, and optimize their hiring or job search process.

Financial Data Extraction

Financial businesses and analysts can extract and aggregate financial data from various websites and sources. By scraping data from websites such as Yahoo Finance, Bloomberg, Nasdaq, etc., they can access information such as stock prices, market indices, company data, news, etc. They can also help them to conduct financial research, analysis, and forecasting, and generate valuable insights and reports.

Social Media Monitoring

Businesses and marketers can monitor and measure their social media activity and performance. By scraping data from social media platforms such as Facebook, Twitter, Instagram, etc., they can collect information such as user profiles, posts, comments, likes, shares, etc. They can also track and analyze their brand reputation, customer feedback, market trends, and competitor strategies.

Key Differences in Approach

Let’s analyze these two concepts of data collection in a convenient comparison table:

| Criteria | Web Scraping | Web Crawling |

|---|---|---|

| Purpose | To extract specific data from one or more target websites and transform it into a structured and usable format. | To discover and index all the web pages on the internet or a specific domain (typically used by search engines). |

| Score | Focused and selective. Only scrapes the data that is relevant to the project. | Broad and comprehensive. Crawls every page and link that it can find. |

| Frequency | Depends on the project requirements and the data source. Can be done once or periodically. | Usually done continuously or at regular intervals to keep the index updated and fresh. |

| Data type | Structured or semi-structured. Can be in various formats such as JSON, XML, CSV, Excel, etc. |

Unstructured or raw. Usually in HTML format. |

| Tools and technologies | Web scraping tools such as ProWebScraper, Webscraper.io, Scrapy, BeautifulSoup, etc. |

Tools such as Googlebot, Bingbot, Apache Nutch, Heritrix, etc. |

| User-Agent identification | Often uses a custom or fake user-agent to avoid detection and blocking by the website owner. | Usually uses a legitimate and identifiable user-agent to respect the website owner’s preferences and policies. |

Is web scraping legal?

In the mind of most web data enthusiasts, their activity is perfectly legal: "There is no law that would forbid online users to gather publicly available information!" US courts, however, have been drawing a different conclusion — and to this day, there's been no legal consensus on this matter: Different judges have different opinions regarding the legality of crawling and web scraping.

In the end, there’s no quick answer: It all comes to the privacy (and convenience) of other users. As long as you’re not trying to reach private product data or use gathered information with malicious intentions, you’re not breaking any law. If your web scraping projects simply gather data that you could find by yourself (with respect towards connection request limits, of course), you’re not violating anyone’s privacy.

Issues you might face during web scraping

Many website owners don't want their content to get scraped simply because they’re not pleased with giving advantage to their competitors. That’s why most sites are protected from scraping with various techniques. Here are the problems that might slow your data gathering process down.

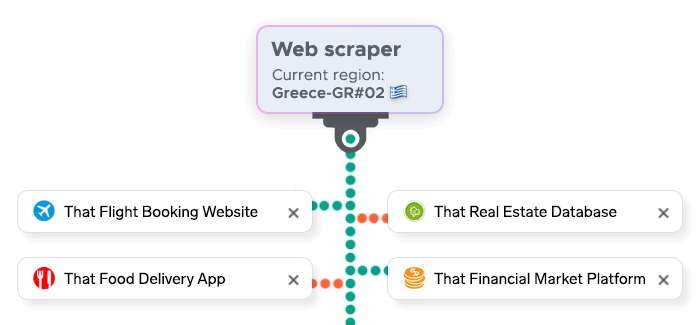

Location-based restrictions

Some websites won’t allow users from certain countries to view the content: This happens because IP addresses from these countries are the most common "offenders" (as the websites themselves see it.) Noticing an influx of web scraping bots from Region N, many websites find it easier to restrict access to users from said region altogether, although it's unfair to regular users.

Anti-scraping measures

Most websites can detect the activity of bots and deny them access to the content to protect it from getting scraped. In both scraping and web crawling, CAPTCHAs are one of the anti-scraping technologies you might need to deal with during automated data gathering.

The behavior of a scraper

A web scraper is a robot, and it behaves like one. This makes it easy to detect for websites, so if you run the scraper without improving the way it works, your data gathering process will get jammed.

How to fix web scraping-related problems?

Each potential problem has a solution, and data collection ones are no exception.

Use proxies

Residential proxies will let you bypass geo-restrictions. Also, they will let your bot avoid IP blocks. Without proxies, the scraper will send requests to the destination servers from the same IP address. Proxies will provide IP rotation, allowing the bot to set a new IP for each request. Then, its activity will look less suspicious.

Use headers libraries

Requests from real users contain headers that tell the destination website about the browser, operating system, and so on. For both crawling and web scraping, you can find ready-to-use libraries with headers — feed them to your scraper so that it doesn’t send suspiciously empty requests.

Slow down

A slow pace will bring you further. Don’t overwhelm servers with hundreds of requests per second. Set your scraper to send fewer inquiries so that its activity doesn’t look like a DDoS attack.

Web scraping is a useful but complex process that requires expertise and additional tools. That’s why many businesses outsource data gathering to data scientists. But despite the technical complexity, scraping became a popular approach to gaining some kind of intelligence.

How Infatica can solve these problems

Use Infatica's proxies with your crawlers and scrapers and avoid restrictions thanks to these features:

- 99,9% uptime and low response time: Infatica guarantees a high availability and reliability of its proxy network, with a 99,9% uptime and a low response time. This means that you can access the web data you need without interruptions or delays.

- Top speed and performance: Infatica delivers a top speed and performance for your web scraping and crawling projects, with a fast and stable connection and a large bandwidth. This means that you can collect and process large amounts of web data in a short time and with a high quality.

- Privacy and security: Infatica protects your privacy and security by providing you with anonymous and secure proxies, with a strong encryption and a strict no-logs policy. This means that you can browse the web without revealing your identity or compromising your data.

Conclusion

In this article, we have covered the key points of web crawling vs web scraping, the two methods of extracting web data for various purposes. We have also compared and contrasted their features, advantages, and use cases. We hope this article has helped you to understand the difference in web crawling and scraping, and how they can be used as web data partners to provide solutions for your projects.