Images are an essential part of modern digital projects – whether you’re building datasets for machine learning, conducting academic research, or simply curating content for personal use. While Google Images provides one of the largest and most accessible repositories of pictures, manually collecting them is time-consuming and inefficient. That’s where web scraping comes in. In this guide, we’ll walk through how to scrape Google Images using Python. You’ll learn how to write a script that can fetch and download images automatically, giving you a repeatable and scalable way to collect visual data.

Understanding the Basics of Google Image Scraping

Before we dive into code, it’s important to understand how Google Images works under the hood – and why scraping it can be challenging.

Unlike a simple static website, Google Images loads its results dynamically. When you search for a keyword, only a handful of image thumbnails are delivered at first. As you scroll down, more images load in the background via JavaScript. This means:

- A simple

requests.get()won’t be enough to capture everything. - To scrape multiple images, you’ll often need tools that can handle JavaScript rendering (e.g., Selenium or Playwright).

Tools and Libraries You’ll Need

To scrape Google Images effectively, you’ll need a few Python libraries and supporting tools. Here’s a quick overview of what we’ll use in this tutorial:

Core Python Libraries

requests– For making HTTP requests. Useful for simpler scraping tasks and fetching image files.BeautifulSoup(frombs4) – For parsing HTML and extracting data like image URLs.os/pathlib– For handling file paths and saving downloaded images.

Handling Dynamic Content

Since Google Images relies heavily on JavaScript to load more results as you scroll, a simple request won’t capture everything. That’s where headless browsers come in:

selenium– Automates a browser (like Chrome or Firefox) to simulate real user behavior such as scrolling, clicking, and loading dynamic content.playwright(optional) – A newer alternative to Selenium, offering faster and more modern browser automation.

Data Storage and Organization

pandas(optional) – If you want to keep track of your results (e.g., image URLs, metadata, and download status) in a structured format.

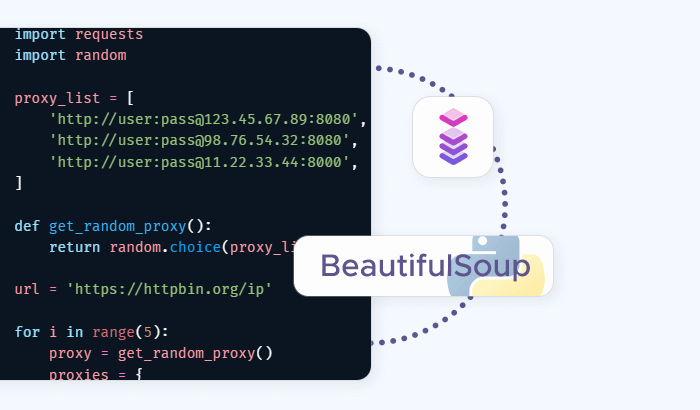

Proxies and Request Management

When scraping at scale, using a single IP address can quickly get you blocked. That’s why many developers integrate:

- Proxy services – to rotate IPs and avoid detection.

- User-Agent randomization libraries (e.g., fake_useragent) – to make requests appear as though they come from different browsers.

👉 For example, Infatica’s residential proxies can be plugged directly into your Python requests or Selenium configuration, ensuring your scraper doesn’t stall after a handful of requests.

Step-by-Step Tutorial: Scraping Google Images With Python

Now that you have your tools ready, let’s walk through how to build a simple Google Images scraper in Python. We’ll start small – fetching a handful of images – and then expand to handle more advanced scenarios like scrolling and proxies.

Step 1: Set Up Your Environment

You can install the basics with:

pip install requests beautifulsoup4 selenium pandas(Playwright requires a separate setup step: pip install playwright and then playwright install.)

We’ll also need a web driver for Selenium. For Chrome, download ChromeDriver and make sure it matches your browser version.

Step 2: Basic Script to Fetch Image Search Results

Google Images is dynamic, but even a simple requests approach can get us started with thumbnails.

import requests

from bs4 import BeautifulSoup

query = "golden retriever puppy"

url = f"https://www.google.com/search?q={query}&tbm=isch"

headers = {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64)"

}

response = requests.get(url, headers=headers)

soup = BeautifulSoup(response.text, "html.parser")

# Extract image elements

images = soup.find_all("img")

for i, img in enumerate(images[:5]): # limit to 5 results

print(f"{i+1}: {img['src']}")

This prints out image sources. Many of these are thumbnails or base64-encoded images, but it’s a good first step.

Step 3: Using Selenium for Dynamic Loading

To get more complete results, we’ll use Selenium to load the page, scroll down, and collect image URLs.

from selenium import webdriver

from selenium.webdriver.common.by import By

import time

query = "golden retriever puppy"

url = f"https://www.google.com/search?q={query}&tbm=isch"

driver = webdriver.Chrome() # ensure chromedriver is in PATH

driver.get(url)

# Scroll to load more images

for _ in range(3):

driver.execute_script("window.scrollBy(0, document.body.scrollHeight);")

time.sleep(2)

# Extract image elements

images = driver.find_elements(By.TAG_NAME, "img")

for i, img in enumerate(images[:10]):

print(f"{i+1}: {img.get_attribute('src')}")

driver.quit()

Now we’re fetching higher-quality results as the page dynamically loads.

Step 4: Downloading Images Locally

Once you have image URLs, you can download them with requests.

import os

save_dir = "images"

os.makedirs(save_dir, exist_ok=True)

for i, img_url in enumerate(images[:10]):

try:

img_data = requests.get(img_url).content

with open(os.path.join(save_dir, f"img_{i}.jpg"), "wb") as f:

f.write(img_data)

print(f"Saved img_{i}.jpg")

except Exception as e:

print(f"Could not save image {i}: {e}")

Step 5: Adding Proxies (Optional but Recommended)

If you run this script repeatedly, Google may block your IP. To reduce the risk:

- Add random delays (

time.sleep) between requests. - Rotate headers and user agents.

- Use proxy servers for IP rotation.

Example with requests:

proxies = {

"http": "http://username:password@proxy_host:proxy_port",

"https": "http://username:password@proxy_host:proxy_port"

}

response = requests.get(url, headers=headers, proxies=proxies)

👉 Services like Infatica’s residential proxies provide automatic IP rotation, so you don’t need to manage proxy lists manually. This ensures your scraper remains stable even when scaling up to thousands of requests.

Handling Roadblocks: Avoiding Bans and CAPTCHAs

Scraping Google Images works fine in small bursts, but when you scale up, you’ll almost certainly hit roadblocks. Let’s look at the most common issues and how to solve them.

Problem 1: CAPTCHAs Breaking Your Script

If Google suspects you’re a bot, it will present a CAPTCHA challenge. Since these require manual solving, they can interrupt your automation. Solution:

- Reduce request frequency.

- Rotate user agents and headers so requests look like they come from different devices.

- Use headless browsers (like Selenium or Playwright) that better mimic human interaction.

- Employ proxy rotation to make traffic appear natural.

Problem 2: Incomplete or Low-Quality Image Results

Sometimes you’ll scrape the page but only capture low-resolution thumbnails or a limited set of images. Solution:

- Use Selenium to simulate scrolling – more images will load dynamically.

- Click on thumbnails programmatically to reveal higher-resolution versions.

- Ensure your scraper waits long enough for images to load.

Problem 3: Scaling to Thousands of Images

Collecting just a few dozen images is easy. But if you’re building a dataset with thousands of pictures, you’ll need a more robust setup. Solution:

- Automate retries for failed requests.

- Save metadata (URLs, download status) in a database to avoid duplicates.

- Use rotating residential proxies to distribute traffic and avoid hitting rate limits.

Storing and Using Your Scraped Images

Once you’ve successfully scraped Google Images, the next step is deciding what to do with them. Proper storage and organization will save you time and make your dataset easier to use.

Saving Images Locally

The simplest approach is to save images directly onto your machine. Organize them in folders based on your search queries:

import os

def save_image(content, folder, filename):

os.makedirs(folder, exist_ok=True)

with open(os.path.join(folder, filename), "wb") as f:

f.write(content)

This structure is especially useful if you’re training an image classifier, since most machine learning frameworks expect labeled folders.

Using a Database or Metadata File

Instead of (or in addition to) just saving image files, you might want to keep track of metadata such as: original image URL, file name and local path, download timestamp, and source query.

You can store this in a CSV file with pandas:

import pandas as pd

data = {

"url": image_urls,

"filename": [f"img_{i}.jpg" for i in range(len(image_urls))]

}

df = pd.DataFrame(data)

df.to_csv("images_metadata.csv", index=False)

For larger projects, a database (e.g., SQLite, PostgreSQL) may be more efficient.

Cloud Storage for Large Datasets

If you’re scraping thousands of images, local storage may not be practical. Instead, consider:

- AWS S3 or Google Cloud Storage for scalable hosting.

- Version control with DVC (Data Version Control) to manage dataset updates.

Conclusion

While a simple script is enough for small-scale projects, scaling up requires more careful planning. Google’s defenses against automated scraping are strong, and without safeguards, your scraper may break after just a few requests. That’s why techniques like request throttling, user-agent randomization, and – most importantly – using rotating proxies are essential for reliability.

If you want to take your scraping projects further, consider using a proxy provider like Infatica. Our residential, datacenter, ISP, and mobile proxies are built to keep scrapers running smoothly, even at scale. With automatic IP rotation and global coverage, you can focus on your project instead of fighting against blocks and CAPTCHAs.