Web scraping is a powerful technique to extract data from websites for various purposes, such as market research, price comparison, data analysis, etc. However, web scraping can also be challenging and risky, especially when websites have anti-scraping measures that can block or ban web scrapers.

One of the common ways to avoid web scraping blocking is to use residential proxies, which are servers that act as intermediaries between web scrapers and websites. Residential proxies can help web scrapers hide their origin and identity, bypass geo-restrictions and rate limits, and access more accurate and relevant data. In this guide, you will learn: What are residential proxies and how they work; what are the use cases of residential proxies in web scraping; what are the downsides of using free residential proxies.

What are residential proxies?

Residential proxies are servers with IP addresses that are connected to real residential addresses by internet service providers (ISPs). They are often used for web scraping because they make use of real IP addresses that are less likely to be detected or blocked by websites.

How do residential proxies work?

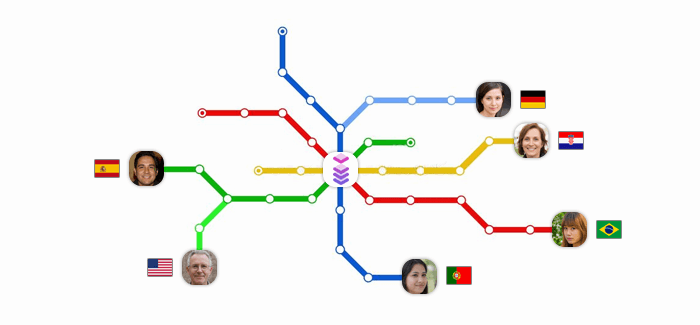

Residential proxies work by routing your internet traffic through an intermediary server (the proxy server) before reaching its intended destination. As a result, the destination website sees the IP address of the proxy server instead of your actual IP address.

Residential web scraping proxy can also rotate the IP address associated with each request. This means that each request is sent using a different IP address from a large and diverse pool of residential IPs. This makes it even harder for websites to block or track your requests.

Residential work proxies can provide you with proxy IP addresses from various locations and countries. This can help you bypass geo-restrictions and access content that is only available in certain regions. This can also help you access more accurate and relevant data by mimicking real users from different areas.

Residential proxies use cases in web scraping

Web scraping can be challenging and risky, especially when websites have anti-scraping measures that can block or ban web scrapers. One of the common ways to avoid web scraping blocking is to use a scrape proxy, which is a server that acts as intermediaries between web scrapers and websites. Equipped with residential proxies, the scraper can hide its origin and identity, bypass geo-restrictions and rate limits, and access more accurate and relevant data. Some of the use cases of scraper proxies are:

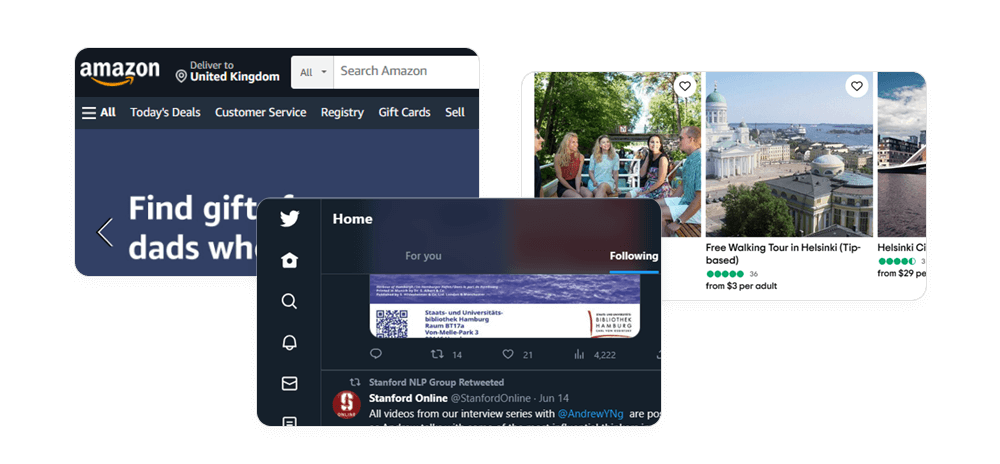

E-commerce: Web scraping can help you collect data from e-commerce websites, such as product details, prices, reviews, ratings, etc. This can help you compare products, monitor competitors, analyze customer feedback, etc. Web crawler proxy can help you access e-commerce websites without getting blocked or banned by using real IP addresses from different locations.

Social media: Web scraping can help you collect data from social media platforms, such as posts, comments, likes, shares, etc. This can help you analyze social media trends, sentiment, influence, etc. Web scraping proxy service can help you access social media platforms without getting blocked or banned by using real IP addresses from different devices.

Travel: Web scraping can help you collect data from travel websites, such as flights, hotels, car rentals, etc. This can help you compare prices, find deals, book services, etc. Web scraping proxies can help you access travel websites without getting blocked or banned by using real IP addresses from different regions.

How to pick the right proxy for scraping?

There are heaps of scraping proxies providers – and each comes with their strengths and downsides. Here are the key factors you should focus on when shopping for residential proxies:

IP address pool. This is the total number of unique IPs offered by the scraping proxy service. As your web scraping operation goes on, some IP addresses may get blocked – and a large IP pool provides easier IP rotation and less downtime. A good starting point is 3 million IPs; as for Infatica, we’re currently offering over 10 million residential IP addresses.

Global coverage. Using country-specific IPs is necessary for entering local markets – and some providers of proxies for scraping can help you with that. For example, Infatica offers over 150 geolocations.

IP sourcing ethics. This ensures that the proxy service for scraping acquires IP addresses ethically, which includes:

- Obtaining informed consent from proxy network users and

- Rewarding them for sharing their IPs.

Although this factor may seem like an afterthought, an ethically-sourced residential proxy network is actually more reliable and offers better performance. You can read Infatica’s white paper to learn more about this topic:

Infatica’s Residential Proxy Pool Handbook

Are “free proxies” OK for web scraping?

There are different tier levels of proxies: free ones and their paid counterparts. Free proxies for bots are proxies that are available for any web scraper without any cost or registration. They are often found online on various websites or forums. Free proxy scraper lists may seem tempting and convenient for web scraping, but they are actually a bad idea for several reasons:

Security concerns: Free scraping proxies are often unsecure and unreliable. They may expose your data and activities to malicious third parties, such as hackers, spammers, or scammers. They may also inject ads, malware, or viruses into your web traffic. They may also steal your personal or sensitive information, such as passwords, credit card numbers, etc.

Subpar infrastructure: Free proxy scrapers often have subpar infrastructure due to the large number of users. They may have slow speed, low bandwidth, high latency, and frequent downtime. They may also have poor performance and stability. Free proxy for crawling may also fail to handle complex or dynamic websites that require JavaScript or cookies.

Small IP address pools: Free proxy for scraping often have small IP address pools that are easily detected or blocked by websites. They may also have low diversity and availability of IP addresses. They may also have high IP rotation frequency that can trigger website defenses. They may also have high IP overlap or collision with other users.

Therefore, using a free proxy for scraping can be a bad idea that can compromise your security, quality, and efficiency. It is better to use paid proxies that offer high-quality proxies and service. Paid proxies can provide you with secure, fast, reliable, and diverse proxies that can help you perform web scraping without any hassle or risk.

So, how to get free proxies? If you don’t want to put your online security at risk, contact a paid proxy provider and ask for a test period: In most cases, you’ll get a free/cheap trial with a set amount of web traffic.

Conclusion

Residential proxies can help web scrapers perform various tasks, such as: collecting data from e-commerce websites, such as product details, prices, reviews, ratings; collecting data from social media platforms, such as posts, comments, likes, shares; collecting data from travel websites, such as flights, hotels, car rentals, etc.

However, using free residential proxies for web scraping is a bad idea that can compromise your security, quality, and efficiency. Free residential proxies are often unsecure, unreliable, and limited. It is better to use paid residential proxies that offer high-quality proxies and service. Paid residential proxies can provide you with secure, fast, reliable, and diverse proxies that can help you perform web scraping without any hassle or risk.

Frequently Asked Questions

There are some best practices and tips to use residential proxies for web scraping effectively, such as:

- Use a reputable and reliable proxy provider that offers high-quality proxies and service.

- Use a proxy rotation or management tool that can automatically switch and balance proxies based on various criteria.

- Use a web scraping tool or API that can handle proxies, browsers, and captchas efficiently.

- Use a reasonable request rate and delay that can avoid triggering website defenses.