With the onset of digital technologies and mobile devices, news data is ever so present in our lives – and news scraping can help us collect this data. But how can we approach a news scraping pipeline to organize it more effectively? Why collect news data at all? In this article, we’ll take a closer look at these questions – and showcase that news scraping can be an important source of actionable data.

What is news scraping?

News scraping is the automated process of extracting news articles, headlines, and other related content from online news websites. This technique involves using web crawlers or bots to navigate through web pages, identify relevant data such as article titles, publication dates, authors, and full texts, and then extract and store this information in a structured format, such as databases or files. News scraping is commonly used for aggregating news from multiple sources, conducting sentiment analysis, tracking trends, or creating customized news feeds.

How news scraping works

A news scraping pipeline is a systematic approach to extracting, processing, and storing news articles from various online sources. Here’s an overview of how such a pipeline typically works:

1. Source identification: Determine the websites from which you want to scrape news articles. These can include news portals, blogs, or specific sections of websites. Often, news sites provide RSS feeds which can be a straightforward source for scraping the latest articles.

2. Fetching data: Implement web crawlers to navigate and retrieve the HTML content of web pages. Tools like Scrapy, BeautifulSoup, and Selenium are commonly used. Some news websites offer APIs (e.g., News API, NY Times API) which provide structured access to their content without the need to scrape HTML.

3. Parsing and extracting content: Use HTML parsers (like BeautifulSoup or lxml) to parse the HTML content and extract relevant data such as titles, publication dates, authors, article texts, and metadata.

4. Cleaning and normalizing data: Remove unwanted elements like ads, navigation menus, or scripts. This may involve regex and string operations – and standardize data formats, such as date formats and text encoding.

5. Storing data: Store the cleaned and structured data in a database (SQL or NoSQL). Databases like MongoDB, MySQL, or PostgreSQL are popular choices. Sometimes, storing data in files (like JSON or CSV) is sufficient for smaller datasets or simpler projects.

6. Deduplication: Implement mechanisms to detect and remove duplicate articles to ensure the uniqueness of stored data..

7. Updating and monitoring: Set up cron jobs or other scheduling systems to run the scraping process at regular intervals, ensuring that the news database stays current.

8. Data enrichment and analysis: Add additional metadata like sentiment analysis, keyword extraction, and categorization – and use the collected data for various analytical purposes like trend analysis, topic modeling, and more.

What news data can you collect?

During news scraping, various types of data can be collected from news articles and websites. Here are some of the key data elements typically extracted:

| Data type | Description | Use cases |

|---|---|---|

| Article title | The headline of the news article. | Provides a quick summary of the article’s content and is essential for indexing and search purposes. |

| Publication date and time | The date and time when the article was published. | Critical for time-sensitive analyses, trending topics, and historical data organization. |

| Author(s) | The name(s) of the person(s) who wrote the article. | Useful for attributing content, tracking author-specific trends, and credibility assessments. |

| Article URL | The web address of the news article. | Necessary for source verification, referencing, and future visits |

| Main content | The full text of the article. | Central to content analysis, sentiment analysis, keyword extraction, and other text-based analyses. |

| Images and media | Photos, videos, and other multimedia elements included in the article. | Enhances understanding of the content and provides material for visual analysis. |

| Tags and categories | Keywords, tags, or categories assigned to the article by the publisher. | Facilitates content organization, search, and filtering by topic. |

| Meta description and keywords | SEO-related metadata that provides a summary and keywords for the article. | Useful for search engine optimization (SEO) analyses and understanding how articles are marketed. |

| Comments and user interactions | Comments left by readers, likes, shares, and other forms of user interaction. | Provides insights into audience engagement and opinions. |

| Source name and domain | The name of the news outlet and its domain (e.g., nytimes.com). | Essential for source attribution, credibility assessment, and domain-specific analyses. |

| Related articles and links | Links to related articles, either within the same website or external sources. | Useful for understanding the context and connections between different pieces of content. |

| Geolocation data | Information about the geographical location relevant to the news article. | Important for regional analysis, mapping news trends, and localized content delivery. |

| Language | The language in which the article is written. | Necessary for language-specific processing and multi-lingual analysis. |

News scraping use cases

News scraping has a wide range of use cases across various industries and applications. Here are some of the most common use cases:

1. Media monitoring and competitive analysis involves tracking mentions of a company, product, or competitor across different news sources. Businesses use news scraping to monitor their brand's presence in the media, analyze competitors’ media coverage, and stay informed about industry trends and market developments.

2. Sentiment analysis involves analyzing the tone and sentiment of news articles to gauge public opinion. Organizations use sentiment analysis to understand public perception of their brand, products, or key issues. This can help in shaping marketing strategies and public relations efforts.

3. Content aggregation and curation involves collecting articles from various sources to create comprehensive news feeds or newsletters. News aggregators and content curators use scraping to provide users with customized news feeds based on their interests. This can also be used to power news sections of websites or apps.

4. Trend analysis and market research involves identifying emerging trends and insights by analyzing large volumes of news data. Researchers and analysts use news scraping to identify trends, track the popularity of certain topics over time, and conduct market research.

5. Academic research involves gathering data for academic studies in fields like journalism, communication, and political science. Scholars use news scraping to collect data for studies on media bias, the dissemination of information, and the impact of news on public opinion.

6. Financial and investment analysis involves monitoring financial news and market developments to inform investment decisions. Investors and financial analysts use news scraping to stay updated on market trends, company announcements, and economic indicators that can impact investment strategies.

7. Event detection and real-time alerts involve detecting and alerting users to significant events as they happen. News scraping can be used to create real-time alert systems that notify users of breaking news, natural disasters, or other significant events.

8. Data journalism involves using scraped data to support investigative journalism and create data-driven stories. Journalists use news scraping to gather data for in-depth reports, uncover patterns, and support their storytelling with empirical evidence.

9. Legal and compliance monitoring involves keeping track of regulatory news and legal developments. Legal professionals and compliance officers use news scraping to stay informed about new laws, regulations, and legal precedents that could impact their clients or organizations.

10. Crisis management involves monitoring news coverage of crises to manage response strategies. Organizations use news scraping during crises to monitor media coverage, understand the public narrative, and adapt their communication strategies accordingly.

11. Archiving and historical research involves creating archives of news articles for historical reference and research. Libraries, historians, and researchers use news scraping to build archives of news content that can be accessed for historical analysis and research purposes.

12. Advertising and marketing insights help to analyze news content to gain insights into advertising trends and media placements. Marketing professionals use news scraping to analyze how and where competitors are advertising, and to identify potential opportunities for their own media campaigns.

Challenges in news scraping

However, news scraping comes with several challenges, – but using tools like Infatica proxies can help mitigate some of these issues. Here are some common challenges in news scraping and how Infatica proxies can be beneficial:

IP Blocking and Rate Limiting

Websites often implement measures to detect and block IP addresses that make too many requests in a short period. This is done to prevent abuse and overloading of servers.

Infatica proxies can distribute requests across a pool of IP addresses, reducing the frequency of requests from any single IP and avoiding detection.

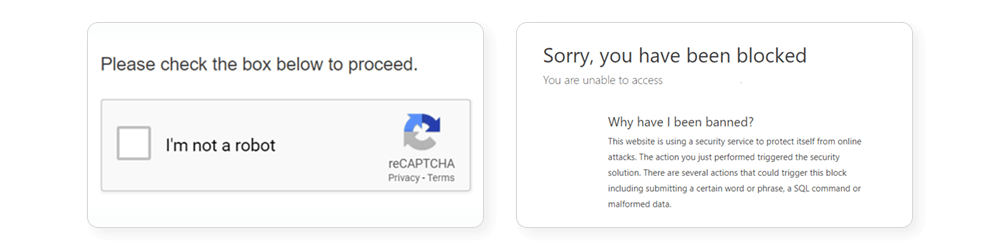

CAPTCHA and Anti-Bot Measures

Many websites use CAPTCHAs and other anti-bot mechanisms to prevent automated scraping.

Infatica proxies can help by rotating IP addresses, which may reduce the likelihood of encountering CAPTCHAs. In some cases, combining proxies with CAPTCHA-solving services can be effective.

Geographical Restrictions

Some websites restrict access to content based on the user’s geographic location.

Infatica offers a network of proxies from various geographic locations, allowing scrapers to access region-specific content by routing requests through proxies in the desired location.

Dynamic and JavaScript-Rendered Content

Websites that heavily rely on JavaScript to render content can be challenging to scrape using traditional methods.

While proxies alone can’t solve this problem, combining Infatica proxies with tools like Selenium or Puppeteer can help access and scrape dynamic content by rendering it as a user would in a real browser.

Ensuring Anonymity

Scraping activities can sometimes be traced back to the scraper’s IP address, leading to potential blocking or legal issues.

Infatica proxies provide anonymity by masking the scraper’s IP address, making it difficult for websites to trace the requests back to the original source.

Maintaining Performance and Speed

High-frequency scraping can lead to performance issues, including slow response times and potential server bans.

Using Infatica’s proxy network can help distribute the load, maintaining performance and speed by avoiding throttling from target websites.

How Infatica Proxies Can Help With News Scraping

By leveraging Infatica proxies, you can address many of the common challenges in news scraping, ensuring more efficient, reliable, and anonymous data extraction from news websites. This allows you to focus on analyzing and utilizing the scraped data without worrying about technical obstacles and restrictions.

1. IP rotation: Infatica provides a large pool of residential and data center proxies. This allows for automatic IP rotation, which distributes requests across multiple IPs, mimicking natural browsing behavior and reducing the risk of getting blocked.

2. High availability and reliability: Infatica’s proxy network is designed to be highly available and reliable, ensuring continuous access to target websites without interruptions due to IP bans.

3. Global coverage: Infatica offers proxies from various countries and regions, enabling scrapers to bypass geo-restrictions and access localized content that would otherwise be unavailable.

4. Scalability: Infatica’s infrastructure supports scalable scraping operations, making it suitable for both small-scale projects and large-scale data extraction needs.

5. Enhanced security: Using Infatica proxies enhances security by masking the scraper’s IP address, reducing the risk of being traced or targeted by anti-scraping measures.

Tools and technologies for news scraping

| Technology | Description | Features |

|---|---|---|

| Scrapy | Open-source and collaborative web crawling framework for Python. It is designed for web scraping but can also be used to extract data using APIs or as a general-purpose web crawler. | Easy to extend with a robust ecosystem of plugins. Built-in support for handling requests, parsing responses, and following links. Can handle multiple pages and concurrent requests efficiently. |

| BeautifulSoup | Python library for parsing HTML and XML documents. It creates a parse tree for parsed pages that can be used to extract data easily. | User-friendly, with a simple and intuitive syntax. Supports different parsers (e.g., lxml, html.parser). Excellent for navigating, searching, and modifying the parse tree. |

| Selenium | Browser automation tool used to control web browsers through programs and automate web application testing. It is also used for scraping dynamic content rendered by JavaScript. | Supports multiple browsers (Chrome, Firefox, Safari, etc.). Can handle JavaScript-heavy websites that require interaction to load content. Useful for automated testing and simulating user interactions. |

| Requests | Simple and elegant HTTP library for Python. It is designed to make HTTP requests simpler and more human-friendly. | Easy to send HTTP/1.1 requests using methods like GET, POST, PUT, DELETE. Supports cookies, sessions, and multipart file uploads. Handles URL query strings and form-encoded data easily. |

| Puppeteer | Node.js library that provides a high-level API to control Chrome or Chromium over the DevTools Protocol. It is primarily used for automating web page interaction. | Can generate screenshots and PDFs of web pages. Supports automated form submission, UI testing, keyboard input, etc. Excellent for scraping and interacting with JavaScript-rendered content. |

| lxml | Python library that provides a comprehensive API for parsing and manipulating XML and HTML documents. It is known for its performance and ease of use. | Fast and memory-efficient. Supports XPath and XSLT for powerful document querying and transformation. Integrates well with other Python libraries like BeautifulSoup. |

| Newspaper3k | Python library specifically designed for extracting and curating articles. It’s a simple yet powerful tool for scraping news articles. | Automatically extracts article text, authors, publish date, and keywords. Provides an easy-to-use API for downloading and parsing articles. Supports article categorization and summary generation. |

| RSS feeds | Many news websites offer RSS feeds, which are XML files that provide summaries of web content. These feeds can be parsed and processed to extract news articles. | Easy to access and parse using libraries like feedparser. Provides structured and regularly updated data. Reduces the need for complex scraping of HTML content. |

| Airflow | Open-source platform to programmatically author, schedule, and monitor workflows. It is often used to automate and manage web scraping pipelines. | Supports defining workflows as code, making them easy to maintain and version control. Provides scheduling and monitoring capabilities. Scales well with large and complex workflows. |

| MongoDB | NoSQL database that stores data in flexible, JSON-like documents. It is often used for storing scraped data due to its scalability and flexibility. | Schema-less design allows for storing diverse data structures. Scales horizontally, making it suitable for large datasets. Provides powerful querying capabilities and easy integration with various programming languages. |