Google is an ever-changing organism – and changes to its backend structure are no surprise. However, their recent update was particularly important: Dubbed “data blackout” by the web scraping community, it created a significant disruption in many data collection pipelines.

Here’s the good news: Infatica’s Google scraping services remain fully operational. In this article, we’ll explore this Google update in greater detail – and learn how Infatica experts managed to bypass it.

Google January Update Explained

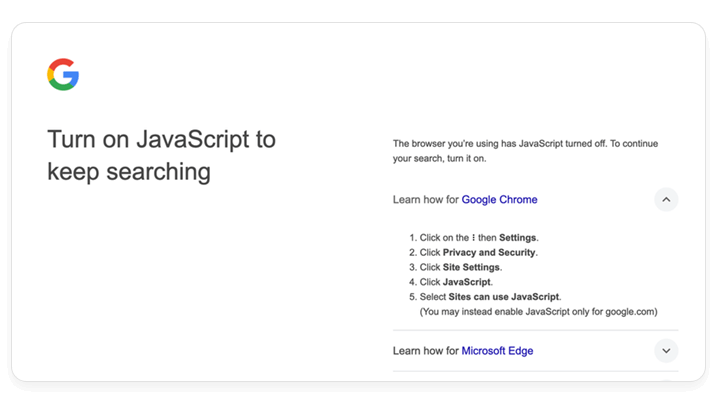

On January 15, 2025, Google rolled out a significant update that prevented users from accessing Google Search if they had JavaScript disabled. JavaScript plays a crucial role in rendering and delivering dynamic web content. Unlike static HTML pages, modern websites – including Google Search – use JavaScript to generate and modify elements in real time, load additional data asynchronously, and improve user experience through interactivity. Search engines, e-commerce sites, and social media platforms heavily depend on JavaScript to present content efficiently and prevent abuse.

By enforcing JavaScript execution, Google strengthened its control over how search results are accessed and interacted with. However, Google had to sacrifice some of its usability – more on that below.

The Impact of These Changes

Google asserts that “fewer than 0.1%” of searches are performed without JavaScript enabled. While this seems like a negligible percentage, in absolute numbers, it still represents millions of searches per day. This update disproportionately affects privacy-conscious users, specialized browsers, and automated systems like SEO tools and web scrapers that rely on traditional HTML-based parsing.

Many accessibility-dependent users rely on lightweight browsers, screen readers, or simplified browsing environments where JavaScript is disabled to improve readability and reduce distractions. With this update, those users now face barriers in accessing Google Search, limiting their ability to retrieve essential information.

Similarly, privacy-conscious users who disable JavaScript to minimize tracking and fingerprinting found themselves locked out of Google Search, forcing them to either enable JavaScript – compromising their privacy – or seek alternative search engines.

Ultimately, while Google frames this as a security and UX improvement, it also serves their broader goal of controlling how their search data is accessed and monetized.

The Fallout for SEO Tools and Automated Systems

Beyond everyday users, this change had a major impact on businesses and services that rely on automated web data collection, particularly SEO tools, rank trackers, and competitive intelligence platforms. Many of these tools operate in headless browsing environments with JavaScript disabled to improve efficiency, reduce resource consumption, and process large volumes of search queries at scale. However, with Google enforcing JavaScript execution, these tools either stopped working altogether or experienced drastic slowdowns as they had to switch to resource-intensive methods involving full browser automation.

For companies that rely on search data to track rankings, analyze trends, and optimize their online presence, this update disrupted key workflows, forcing them to either adopt expensive workarounds or risk falling behind their competitors.

How Infatica Overcame These Changes

While many web scraping solutions struggled with Google’s new JavaScript requirement, Infatica’s scrapers remained operational thanks to advanced JavaScript rendering and anti-blocking mechanisms. Our technology allows us to fully execute JavaScript while maintaining a low detection footprint, ensuring seamless data extraction from Google Search.

JavaScript Rendering: Executing Google’s Search Logic

Since Google now requires JavaScript execution to access search results, our scrapers use headless browsers that fully render web pages, just like a real user’s browser would. This allows us to:

- Load dynamic search result pages, including JavaScript-powered elements like “People Also Ask” and auto-generated snippets.

- Accurately extract search rankings and metadata that would otherwise be hidden in raw HTML.

- Adapt to Google’s ever-changing frontend logic, ensuring consistent scraping performance.

Advanced Anti-Blocking Mechanisms

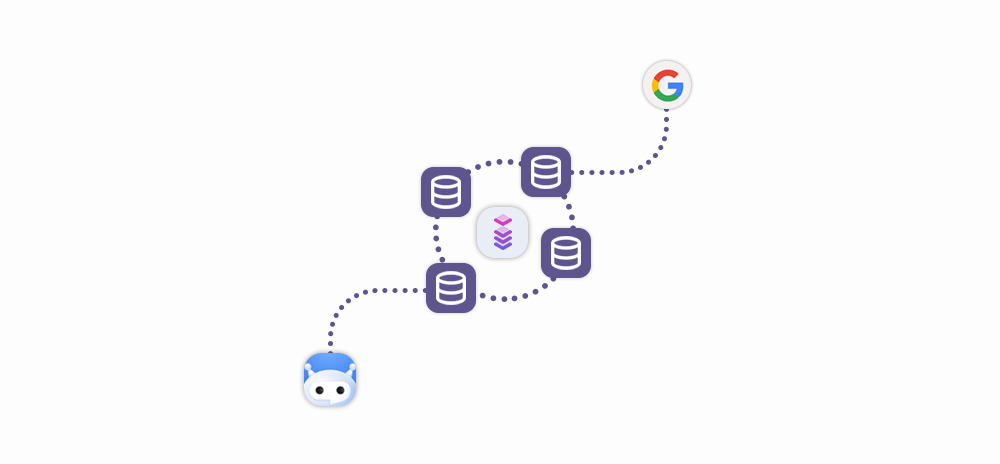

To avoid detection and maintain uninterrupted access to Google Search, Infatica employs multiple anti-blocking techniques:

- IP rotation: Our system automatically cycles through a large pool of residential and datacenter IPs, preventing Google from detecting repeated automated requests from the same address. This mimics real user behavior and reduces the risk of captchas or bans.

- User-Agent simulation: We dynamically alter user-agent strings to match legitimate browser requests, making our scrapers indistinguishable from normal human users. This prevents Google from flagging requests as automated.

- Residential proxies: Unlike datacenter proxies, residential proxies use real IP addresses assigned by ISPs, making them much harder for Google to detect and block. By leveraging a diverse residential proxy network, we ensure that our requests appear natural and distributed across multiple locations.

Ready to unlock the power of Google data? Infatica’s scraping solutions are simple, efficient, and tailored to your needs. Get started now and see the difference.