In today’s data-driven world, organizations rely on information from countless sources – internal systems, third-party databases, and the web – to make decisions. But not all data is equally reliable. Inaccurate, incomplete, or outdated data can quickly lead to flawed analyses, misguided strategies, and lost opportunities.

Let’s explore data verification:How does it differ from data validation? Why does it play a vital role in maintaining data integrity? More importantly, let's see how reliable collection infrastructure – like Infatica’s Web Scraper API – helps minimize errors at the source.

Data Verification vs. Data Validation

| Aspect | Data Validation | Data Verification |

|---|---|---|

| Definition | Checks whether data is correctly formatted, complete, and compliant before processing. | Confirms that data is accurate, reliable, and reflects the real-world truth after collection or processing. |

| Main Purpose | Ensures data meets required rules, formats, and constraints. | Ensures data is correct, trustworthy, and suitable for decision-making. |

| Key Questions Answered | “Is this data acceptable?” “Does it follow the required structure?” | “Is this data true?” “Does it match reality?” |

| Typical Checks | Format checks, type checks, mandatory field checks, range checks, schema validation. | Cross-referencing with authoritative sources, deduplication, anomaly detection, consistency checks. |

| Stage in Workflow | Early stage — during data entry, ingestion, or scraping. | Later stage — after collection, cleaning, or integration. |

| Sources Used | Internal rules, schemas, and business logic. | External truth sources, historical data, multi-source comparison. |

| Common Tools | Input validators, ETL/ELT pipelines, schema validators. | Data quality platforms, rule engines, monitoring systems. |

| Relevance to Web-Scraped Data | Ensures scraped data is complete and properly structured. | Confirms scraped data reflects real-world values despite site changes, geo differences, or collection errors. |

| Outcome | Clean, well-structured data ready for further processing. | Accurate, trustworthy data ready for analytics, automation, and decision-making. |

The Core Principles of Data Verification

Data verification ensures that the information flowing into analytics systems, automation pipelines, and business tools is accurate, trustworthy, and fully aligned with real-world values. While specific verification workflows differ across industries and datasets, most rely on several universal principles. Understanding these principles helps teams design more reliable data pipelines – especially when working with dynamic, fast-changing web data.

Accuracy

Accuracy measures how closely the collected data matches the real-world truth. For example, if a hotel room’s price changes overnight, verified data must reflect the most recent value rather than a cached or outdated version. In web-scraping scenarios, accuracy often depends on handling dynamic content, redirects, and location-based variations.

Consistency

Consistency ensures that data remains uniform across sources, formats, and time periods. A dataset that uses different naming conventions or conflicting values for the same entity can cause errors in downstream systems. Verification ensures that the same rules and definitions apply universally – essential for multi-source scraping pipelines or datasets aggregated from different websites.

Timeliness

Data loses value as it gets older. Timeliness verification ensures that records stay fresh and relevant to the use case – critical for pricing intelligence, market monitoring, and real-time dashboards. When scraping websites where values change frequently, automated timestamping and re-validation help maintain up-to-date information.

Completeness

Completeness checks whether all required data fields are present and correctly populated. Missing product attributes, empty tables, or truncated HTML responses often indicate scraping issues. Verification processes catch these gaps early, preventing incomplete datasets from entering production workflows.

Uniqueness

Uniqueness ensures that each record appears only once. Duplicates commonly arise when scraping paginated content, infinite scroll pages, or multiple sources referring to the same entity. Verification processes use identifiers and rules to detect and remove duplicates, preserving clean, deduplicated datasets.

Integrity

Integrity refers to the correctness of relationships between data points – such as matching product IDs, category names, SKUs, or attributes. For scraped data, broken relationships often arise from HTML structure changes, inconsistent selectors, or partial page loads. Verification ensures that the data model remains coherent and logically connected.

How Data Verification Works: Methods & Techniques

Data verification combines automated checks, rule-based logic, and sometimes manual review to ensure that collected information is accurate and trustworthy. Because no single method can catch every issue, verification typically uses multiple complementary techniques.

Automated Verification Techniques

Automated methods form the backbone of modern verification workflows. They scale well, run continuously, and detect issues long before they impact downstream systems.

Pattern & format checks: Automation can verify that data fields match expected structures – such as date formats, numeric ranges, currency symbols, or product identifiers. These checks catch malformed values early.

Schema & type validation: Systems compare incoming data to a defined schema, ensuring that all fields exist and contain the correct types (strings, numbers, arrays, objects). This prevents structural inconsistencies from breaking analytics pipelines.

Deduplication algorithms: Algorithms compare new records to existing entries using unique identifiers, fuzzy matching, or hashing. This helps eliminate duplicates created during pagination or multi-source scraping.

Cross-referencing with known sources: Automated systems can match collected values against internal reference tables or trusted external datasets. This method validates accuracy by comparing data to authoritative benchmarks.

Anomaly & outlier detection: Machine learning or rule-based logic flags values that deviate from historical patterns – for example, a sudden price drop of 90% or a missing SKU attribute. These anomalies often indicate scraping failures or incorrect extraction.

Manual and Hybrid Verification

Despite advances in automation, human review remains a key component of verification – especially for ambiguous cases or new data sources.

Spot checks: Analysts manually inspect random samples to confirm accuracy, validity, and contextual correctness. This is especially useful when onboarding new websites or extraction rules.

Review of edge cases: Humans resolve exceptions where automated systems lack enough context – such as atypical product descriptions, regional naming differences, or unexpected page layouts.

Human-in-the-loop systems: Some workflows integrate human feedback directly into automated models, improving the accuracy of future verification cycles.

Why Data Verification Is Crucial for Web-Scraped Data

Unlike structured internal datasets, web data is messy, inconsistent, and constantly changing. This makes verification a critical component of any scraping workflow. Without it, organizations risk basing decisions on incomplete, outdated, or simply incorrect information:

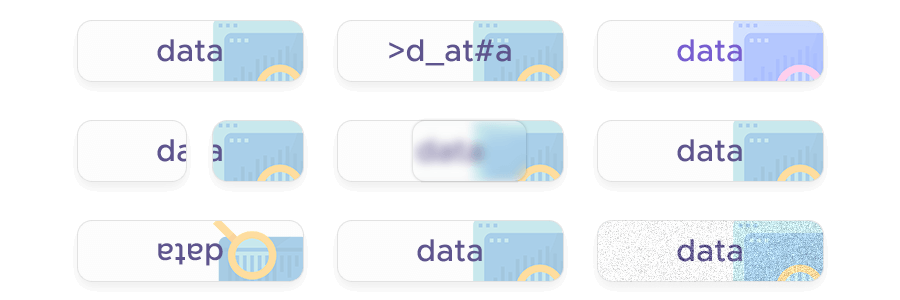

Websites Change Frequently

Websites update layouts, element IDs, and data structures with little or no warning. Even a minor change can break selectors or create misaligned fields. Verification helps detect:

- Missing or shifted fields

- Empty responses disguised as valid pages

- Incorrectly extracted values due to layout changes

Geolocation Affects the Returned Data

Many sites display different values depending on where the request originates – prices, availability, currency, inventory levels, or even entire product lists. Verification is essential for confirming that data from each region matches expected patterns; thatlocation-specific differences are intentional, not extraction errors; and that geotargeting is configured correctly.

Anti-Bot Systems Introduce Hidden Failures

CAPTCHAs, IP blocks, throttling, and stealth bans can generate partial or misleading responses. Pages may load without key elements, or HTML may appear complete while containing no usable data. Verification identifies:

- Suspiciously small payloads

- Missing tables or product cards

- Captured challenge pages returned as “success” responses

Multi-Source Aggregation Introduces Variability

When collecting data from multiple websites, differences in formatting, definitions, and content structure create inconsistencies. Verification ensures:

- Harmonized attributes across sources

- Standardized units and naming conventions

- Correct mapping to internal data models

Verification Protects Against Silent Data Drift

Data drift – slow, subtle changes in website behavior or page structure – can cause quality to degrade over time. Verification processes detect these shifts early, enabling fast fixes before problems scale.

Ready to Strengthen Your Data Quality?

Reliable verification starts with reliable data. Infatica’s Web Scraper API provides stable collection infrastructure, automated retries, JavaScript rendering, and global geotargeting – helping you gather cleaner, more consistent datasets from day one.

Build data you can trust – explore Infatica’s Web Scraper API today.