- What is Data Mining?

- Data Mining Steps

- Types of Data Mining Techniques

- Examples of Data Mining

- What Are the Business Benefits of Data Mining?

- Challenges of Implementation in Data Mining

- Data Mining Software and Tools

- How to Collect Data For Data Mining?

- How To Use Proxies For Data Mining?

- Frequently Asked Questions

Unlocking the potential of your data doesn’t have to be too complicated. In this article, we’ll delve into data mining, exploring its various techniques and applications. Discover how data mining works – and how businesses harness the power of data to generate valuable insights, make data-driven decisions, and stay ahead of the competition. From understanding the steps of a data mining pipeline to exploring the benefits and overcoming challenges, we’ll try to help you transform raw data into actionable intelligence.

What is Data Mining?

The data mining definition is pretty easy to understand: It is the process of analyzing data and, therefore, discovering patterns, correlations, and insights using statistical, mathematical, and computational techniques. It involves extracting intelligence from raw data to uncover hidden patterns and establish relationships that can inform decision-making and provide a competitive advantage. There are numerous data mining methods, including machine learning, artificial intelligence, database management, and data visualization, to analyze and store data – and then transform it into valuable knowledge.

Data Mining Steps

1. Understand Business

This initial step involves comprehensively understanding the business problem or project objectives that need to be addressed. It includes identifying the company situation: key stakeholders, their goals, and how data-driven insights can help achieve these goals. This step ensures that the data mining efforts are aligned with business needs and that the project has a clear direction and purpose.

2. Understand the Data

In this step, data mining professionals perform data exploration to understand its structure, content, and quality. This involves identifying the data sources, assessing its relevance to the business intelligence, and recognizing any potential issues such as missing values or inconsistencies. This step helps in determining whether the required data at hand is sufficient and suitable for the intended analysis.

3. Prepare the Data

Data preparation is a critical step where raw data is cleaned, transformed, and formatted to make it suitable for modeling. This includes handling missing values, normalizing data, encoding categorical variables, and creating new features that may enhance the predictive power of the model. Proper data preparation ensures that the model receives high-quality input and doesn’t run into data quality issues.

4. Model the Data

During this step, various data mining algorithms and techniques are applied to the prepared data for model creation. This involves selecting appropriate modeling techniques, training the models using the data, and model testing to optimize performance. The choice of data model depends on the nature of the business data and the characteristics of the information.

5. Evaluate the Data

After modeling, model evaluation is performed using various metrics and iterative refinement. This step assesses how well the model performs on unseen data and ensures that it generalizes well to new, real-world scenarios. Thanks to evaluation, data mining helps in identifying the most effective model and provides insights into areas where the model may need improvement.

6. Deploy the Solution

The final step involves the deployment of the chosen data mining model into a production environment where it can be used to make real-time or batch predictions. This includes integrating the model into existing systems, ensuring it can handle the required data volumes, and setting up monitoring processes to track its performance over time. Successful deployment turns the data mining process into a practical solution for better decision making.

Types of data mining techniques

Association rule mining is used to discover interesting relationships or associations between variables in large datasets. It identifies frequent itemsets and generates rules that highlight how the occurrence of one item is associated with the occurrence of another. Commonly used in market basket analysis, it helps businesses understand product purchase patterns. For example, an association rule might reveal that customers who buy bread also often buy butter, which can inform product placement and promotional strategies.

Classification is a supervised learning technique used to assign data into predefined categories or classes based on input features. The process involves training a model on a labeled dataset, where the class labels are known, and then using this model to predict the class labels of new, unseen data. Techniques such as decision trees, random forests, and support vector machines are commonly used for classification tasks. In this area, .data mining applications include spam email detection, medical diagnosis, and credit scoring.

Clustering is an unsupervised learning technique that groups data points into clusters based on their similarities, with the aim that data points within the same cluster are more similar to each other than to those in different clusters. This technique does not require labeled data. Common algorithms include k-means, hierarchical clustering, and DBSCAN. Clustering is used in customer segmentation, image analysis, and identifying patterns in genomic data.

Regression analysis is used to predict a continuous numerical value based on input features. It models the relationship between a dependent variable and one or more independent variables. Linear regression, logistic regression, and polynomial regression are popular techniques. Regression is widely used in forecasting, such as predicting sales data, stock prices, or economic indicators, and in evaluating relationships between variables.

Sequence and path analysis focuses on identifying and analyzing sequences or patterns in data over time. These processes often use data mining to analyze temporal data or event sequences to uncover trends, cycles, or other temporal structures. Sequence analysis is commonly applied in web usage mining to understand user navigation paths, in bioinformatics to analyze DNA sequences, and in finance to detect patterns in stock price movements.

Neural networks are a set of algorithms inspired by the human brain that are designed to recognize patterns. They consist of layers of interconnected nodes (neurons) that process input data to identify patterns and make predictions. Deep learning, a subset of neural networks, involves multiple hidden layers that enable the model to learn intricate patterns and representations. Neural networks use data mining in a variety of applications, including image and speech recognition, natural language processing, and autonomous driving.

Examples of Data Mining

Shopping Market Analysis

Market basket analysis involves examining large volumes of retail transaction data to discover patterns and trends in customer purchasing behavior. Techniques such as purchase associations can identify frequently bought together items, helping retailers optimize product placements and promotions. Clustering can segment customers into different groups based on their buying habits, enabling targeted marketing campaigns. This differential analysis helps retailers improve inventory management, enhance customer satisfaction, and increase sales.

Weather Forecasting Analysis

In weather forecasting, the data mining process is used to analyze vast amounts of historical data for the prediction of future weather conditions. Regression models can forecast temperature, precipitation, and other weather variables by identifying patterns in historical data. Clustering can categorize weather patterns, while sequence analysis can detect recurring trends and anomalies. These data mining techniques help meteorologists provide accurate forecasts, issue timely warnings for severe weather events, and aid in planning activities affected by weather conditions.

Stock Market Analysis

Data mining techniques in stock market analysis involve massive data that includes historical stock prices, trading volumes, and financial indicators to predict future market trends and investment opportunities. Techniques like regression can forecast stock prices, while classification models can identify potential buy or sell signals. Clustering can segment stocks into different categories based on performance, and neural networks can analyze complex, non-linear relationships in the data. This analysis assists investors in making informed decisions and developing trading strategies.

Intrusion Detection

Intrusion detection systems (IDS) is one of data mining use cases that identifies suspicious network activity and potential security breaches in network traffic. Classification algorithms can distinguish between normal and malicious traffic by analyzing patterns and features of network packets. Anomaly detection techniques can identify unusual behaviors that may indicate an intrusion. By continuously monitoring network data, IDS can provide real-time alerts, helping organizations protect their systems from cyber threats and attacks.

Fraud Detection

The data mining process is crucial in fraud detection to identify fraudulent activities in financial transactions, insurance claims, and other domains. Techniques such as classification can differentiate between legitimate and fraudulent transactions based on historical data. Anomaly detection can perform pattern discovery, finding markers that deviate from normal behavior, while association rule learning can uncover hidden relationships indicative of fraud. This helps organizations minimize financial losses, enhance security, and maintain customer trust.

Surveillance

In surveillance, data mining analyzes data from various sources such as video feeds, social media, and sensors to detect suspicious activities and ensure public safety. Pattern recognition and classification techniques can identify and track objects or individuals, while anomaly detection can flag unusual behaviors. By integrating and analyzing large data volume in real time, video surveillance systems can provide early warnings and support law enforcement efforts in preventing and responding to potential threats.

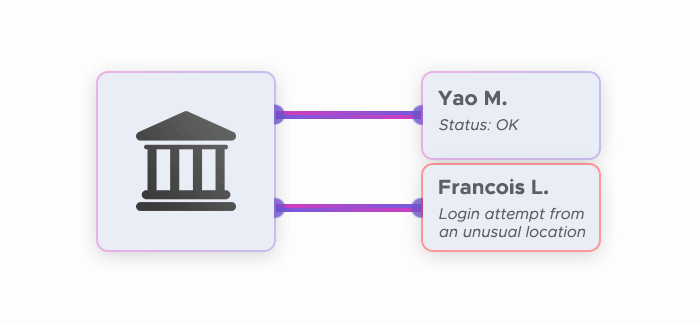

Banking

The data mining process in financial banking involves analyzing correlations, transactions, and patterns to improve banking services and decision-making. Techniques like customer segmentation through clustering can identify different customer groups for targeted marketing efforts. Predictive models using regression and classification can assess credit risk, detect fraudulent activities, and forecast financial performance. This enables banks to enhance customer experience, manage business challenges, and optimize their operations.

Academic

Nowadays, we have access to tremendous volumes of data. In its unstructured form, however, it has almost no use for us. Data mining speeds up academic research, making it more precise and true. Educators can use data mining to predict and track the performance of their students to realize who might need some more help than others.

Insurance Companies

Using data mining, insurance agencies can manage risks, take compliance under control, and detect fraud easily. Such companies can use data mining to study their customers and gain better positions on the market.

Manufacturing

It’s difficult to forecast the demand precisely — but data mining makes this process way more accurate and straightforward as structured information provides manufacturers with the opportunity to analyze trends. Using this data, they can optimize the processes by aligning them with demand. Also, with the help of data mining, they can easily detect fraud, improve their positions on the market, and make sure they’re compliant with all the regulations.

What Are the Business Benefits of Data Mining?

Generating business insights can help uncover hidden patterns, correlations, and perform trend predictions within the target dataset that would be difficult to detect manually. These insights can reveal customer preferences, market trends, and operational inefficiencies, enabling businesses to gain a deeper understanding of their operations and environment. By leveraging these insights, companies can innovate, improve customer satisfaction, and maintain a competitive edge in their market.

Cost-effective analysis provides a cost-effective means of analyzing vast amounts of data quickly and accurately. Automated data analysis reduces the need for manual data processing, saving time and resources. This efficiency allows businesses to allocate their budget and manpower more effectively, focusing on strategic initiatives rather than extensive data analysis, ultimately leading to improved productivity and profitability.

Data-driven decisions are based on solid data rather than intuition or guesswork. By analyzing historical data and identifying patterns, businesses can make informed predictions about future trends and outcomes. This data-driven approach enhances decision making accuracy, improves risk mitigation, and increases the likelihood of problem resolution, leading to better strategic planning and operational execution.

Flexible deployment can be useful in a variety of environments, whether on-premises, in the cloud, or in hybrid setups, providing businesses with the flexibility to choose the best deployment model for their needs. This adaptability ensures that data mining tools can be integrated seamlessly with existing systems and scaled according to the organization's growth and changing requirements. Flexible deployment options make it easier for businesses to implement data mining solutions without significant disruption and to adapt to new challenges and opportunities as they arise.

Challenges of Implementation in Data Mining

Distributed Data

Managing and analyzing decentralized data that is distributed across different locations, systems, or platforms presents a significant challenge in data mining. Integrating and synchronizing disparate datasets requires robust distributed mining algorithms and can involve complex data transfer and storage solutions. Ensuring data consistency, accuracy, and real-time access while dealing with different data formats and sources complicates the process, impacting the efficiency and effectiveness of data mining activities.

Complex Data

Data mining often involves working with complex and unstructured data, such as text, images, video, and social media feeds. Processing and analyzing such data require advanced algorithms and computational power, which can be difficult to implement and manage. Due to the variety of data, there are also challenges in information extraction, pattern recognition, and model building, necessitating specialized techniques and tools to handle the intricacies involved.

Domain Knowledge

Effective data mining requires a deep understanding of the specific domain in which it is applied. Without domain expertise, interpreting the data mining results and translating them into actionable insights can be challenging. Data mining helps in selecting the right data, choosing appropriate algorithms, and understanding the context of the patterns discovered, ensuring that the analysis contains relevant data and is meaningful to the business objectives – but in order to achieve this, domain knowledge is required.

Data Visualization

Presenting the results of data mining in a clear and comprehensible manner is crucial for decision-making. Data visualization transforms complex data into intuitive graphical representations, but designing engaging visualization can be challenging. It requires balancing detail and interpretability, choosing the right type of chart or graph, and ensuring that the visualization accurately conveys the insights without overwhelming or misleading the audience.

Incomplete Data

Incomplete or missing data can significantly hinder the data mining process. Missing values, incomplete records, or inconsistencies can lead to biased or inaccurate analysis. Handling noisy data also requires sophisticated data mining techniques for data imputation, cleaning, and preprocessing to fill gaps and ensure that the resulting dataset is robust and reliable for analysis, which can be time-consuming and complex.

Security and Privacy

Ensuring the security and privacy of sensitive data is a major concern in data mining. Protecting data from unauthorized access, breaches, and privacy risks is essential to maintain trust and comply with regulatory requirements. Implementing robust security measures, such as encryption, access controls, and confidentiality, while balancing the need for data accessibility and usability, presents an ongoing challenge for organizations.

Higher Costs

Data mining can involve substantial costs related to software, hardware, and skilled personnel. Implementing advanced data mining solutions requires significant investment in technology and infrastructure, as well as continuous training and development of staff. Balancing the costs with the expected benefits and ensuring a return on investment is a critical challenge for businesses considering data mining initiatives.

Performance Issues

Processing and analyzing large datasets can lead to performance issues, such as slow processing times and high computational resource requirements. Optimization techniques and methods to handle big data analytics efficiently are essential to ensure timely insights and decisions. Performance bottlenecks can affect the scalability and responsiveness of data mining solutions, necessitating continuous monitoring and optimizations.

User Interface

Designing user-friendly interfaces for data mining tools is crucial for their adoption and effective use. A complex or unintuitive visualization interface can hinder users from fully leveraging the capabilities of data mining solutions. Creating interfaces that are accessible, avoid unnecessary design trends, and respect the needs of various users, from data miners to data analysts, is essential to facilitate seamless interaction and maximize the optimization of user experience.

Data mining software and tools

Python with libraries (Pandas, Scikit-learn, TensorFlow, Keras): Python is a popular programming language for data science, equipped with numerous libraries for data mining and machine learning. Pandas is used for data manipulation and analysis, Scikit-learn for machine learning algorithms, and TensorFlow and Keras for deep learning models. These libraries make Python a powerful and flexible choice for building and deploying data mining solutions.

R and RStudio: R is a programming language and software environment for statistical analysis and graphics, widely used for data mining and machine learning tasks by numerous data scientists. RStudio is an integrated development environment (IDE) for R that provides a user-friendly interface for writing and executing R scripts. R and RStudio offer extensive libraries and packages for data analysis, making them highly versatile tools for data engineering.

Orange: An open-source data visualization and analysis tool for both novices and experts. It provides a visual programming interface for data mining and machine learning workflows. Orange is particularly well-suited for educational purposes and rapid prototyping, offering a wide range of widgets for data preprocessing, visualization, and modeling.

Microsoft Azure Machine Learning: A cloud-based service that provides a comprehensive environment for building, deploying, and managing machine learning models. It supports almost any data mining technique and integrates with other Azure services, enabling scalable and efficient data processing and analysis.

IBM SPSS Modeler: A data mining application from IBM. It provides a visual interface for building predictive models and performing advanced analytics. SPSS Modeler supports various data mining techniques and integrates well with IBM's other data products, making it a popular choice for data analytics and research.

How to collect data for data mining?

Web scraping serves as a powerful tool for data mining by providing access to vast amounts of online data that can be systematically extracted and analyzed to uncover valuable insights and support decision-making across various domains:

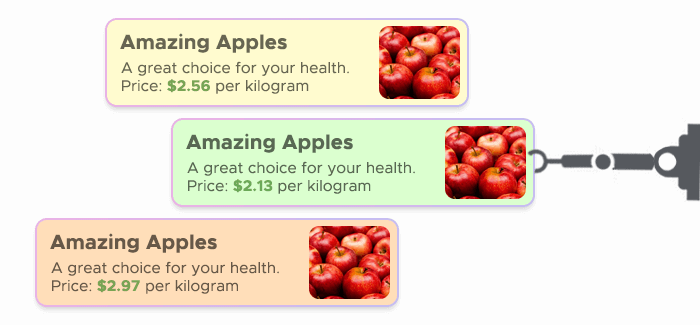

Market research: Businesses can use data collection to gather information on competitors, industry trends, and consumer data. By scraping information such as product offerings, pricing strategies, and customer feedback from competitor websites, companies can gain insights into market dynamics and identify opportunities for differentiation and competitive advantage.

Social media analysis: Web scraping can be employed to extract data from social media platforms, such as user posts, comments, likes, and shares. This data can be analyzed to understand public sentiment, track brand reputation, and identify emerging trends. For instance, scraping Twitter or Facebook for mentions of a brand or product can help businesses gauge customer opinions and reactions in real time.

Real estate analysis: Real estate companies can use web scraping to gather data on property listings, prices, locations, and amenities from real estate websites. This data can be mined to identify market trends, assess property values, and make informed investment decisions. For example, analyzing scraped data can help determine the best locations for new developments based on price trends and demand patterns.

How To Use Proxies For Data Mining?

There are three kinds of proxies — residential, data center, and mobile. We need the first type for data gathering. Residential proxies are real devices you can connect to in order to route your traffic through a proxy gadget. By doing so, you will pick up the IP address of this device and cover your real one with it.

Then once you access the destination website, its servers will see the IP of a proxy, not your real one. And since residential proxies are real devices, you will appear as a resident of a certain location to a destination website — not as a person who is using proxies.

So if you apply a number of residential proxies to your scraper and set it up so that the bot changes IPs with every request, you can expect the data gathering to be smooth. The only thing to watch out for in this situation is the quality of proxies.

You can find free residential proxies, but we advise against using them. It’s not easy to manage the network of proxies, let alone obtaining residential IPs. So you can’t expect such a complex service to be free and high-quality. Quite likely, once you get those free proxies, you will see that most of them are blocked already.

Infatica offers affordable residential proxies that won’t be a burden for your budget. You can choose the number of IPs and locations you need — our pricing plans are flexible enough to fit any needs. And if you feel like none of the options we offer truly meet your requirements, just contact us so that we create a custom pricing plan for you. Easy as that! Our proxies are always ready to work with as we expand the pool of IPs constantly and replace blocked ones quickly.