- What is data extraction?

- How does data extraction work?

- What is the purpose of extracting data?

- Data extraction example

- Types of data you can extract

- Data extraction methods

- Data extraction ETL

- Why do businesses need automated data extraction?

- How can different businesses leverage data extraction?

- Frequently Asked Questions

Data extraction is an important component of a web scraping pipeline: It allows businesses to save their professionals time by reducing manual oversight. So how do you set this workflow most efficiently? In this article, we’ll take a closer look at various data extraction tools and two major types of data extraction – this way, you’ll be better equipped to choose between them in the long run.

What is data extraction?

Data extraction refers to the process of systematically pulling usable and targeted information from various sources, including structured databases and sources like unstructured logs, emails, social media, audio recordings, and more. The goal is to identify and pull out specific data elements that are relevant for analysis, reporting, or further processing. This process is essential for businesses to gain insights into customer behavior, market trends, operational efficiencies, and more.

Structured extraction typically involves querying databases using SQL or similar languages to retrieve information such as financial numbers, contact information, usage habits, and demographics. This type of data is organized into predefined formats, making it relatively straightforward to extract and analyze. On the other hand, unstructured data extraction requires more advanced techniques, such as natural language processing (NLP) and text data mining, to extract meaningful information from sources like social media posts, emails, and audio recordings. These sources contain valuable but less organized data that, when extracted and analyzed, can provide rich insights into customer sentiments, product feedback, and emerging trends.

How does data extraction work?

A data extraction pipeline is a series of processes that are used to collect data from various sources, transform it into a usable format, and load it into a destination system for analysis or further processing:

1. Choose data sources: structured (databases, spreadsheets, and other organized data formats) or unstructured sources (text files, web pages, social media posts, and other formats that do not follow a specific schema.)

2. Extract data: Some options include pulling data directly from databases using SQL queries; extracting data from web pages using data extraction software like BeautifulSoup, Scrapy, or Selenium; using HTTP requests to get data from external APIs; and reading data from Excel spreadsheets, JSON, XML, or other file formats.

3. Transform data: Data transformation involves removing or correcting erroneous data, handling missing values, and filtering out irrelevant information – and converting data into the desired format, such as JSON, CSV, or a database table.

4. Load data: Use a combination of data warehouses (centralized repositories for storing large volumes of structured data), data lakes (storage systems that can handle large volumes of structured and unstructured data), databases (SQL or NoSQL databases to prepare for querying and analyzing data), and business intelligence tools (e.g. Tableau, Power BI, or Looker for data visualization and analysis.)

What is the purpose of extracting data?

The purpose of extracting data is to gather valuable information from large unusable data sources to enable informed decision-making and strategic planning. Organizations often deal with data stored in various difficult-to-parse formats and locations, such as databases, tables, APIs, web pages, and other files. By extracting this data, they can consolidate it into the central repository to later process and analyze data effectively. This process helps in identifying patterns, trends, and critical insights that are crucial for business intelligence, enhancing operational efficiency, and driving growth. One of the examples of data extraction is extracting customer data from multiple touchpoints. This allows a company to gain a comprehensive view of customer behavior, preferences, and needs, gaining access to actionable data.

Additionally, data extraction supports compliance and reporting requirements by ensuring that accurate and up-to-date information is readily available. Many industries are subject to regulations that mandate regular reporting and audits. Extracting data systematically ensures that organizations can distill datasets and compile necessary reports and demonstrate compliance without significant manual effort. Furthermore, data extraction enables integration between various systems and applications, fostering better collaboration and data sharing across departments. This integration is vital for maintaining data consistency, reducing redundancy, and ensuring that all stakeholders can access data, thereby enhancing overall organizational effectiveness.

Data extraction example

Let's break down this scenario: The goal is to draw specific information (e.g. contact details or refined URLs) from hundreds of user-submitted PDFs and save detailed information to a logfile (e.g. an Excel spreadsheet) using a data extraction pipeline.

1. Identify data sources: PDF files stored in a local directory, cloud storage, or received via email.

2. Extraction: We can use a PDF processing library or tool like PyPDF2, PDFMiner, or Adobe Acrobat API to read and extract data from PDFs – and write a script in Python (or another suitable programming language) to automate the process. Moreover, we can set up a cloud-based trigger (e.g., AWS Lambda or Google Cloud Functions) to run the script when new PDFs are added to the centralized location.

3. Transformation: We’ll need to ensure extracted data is correctly formatted and free of errors (e.g., remove duplicate entries, validate email formats) – and standardize data formats via keyword values (e.g., phone numbers, names).

4. Loading: We can use a library like Pandas in Python to load the cleaned and standardized data into a spreadsheet (e.g., Excel or Google Sheets). We can also implement logging within the script to change data capture during the extraction and loading process and update the spreadsheet.

Types of data you can extract

We’re generally working with two main types: structured data and unstructured data. Understanding these types is crucial for designing an effective information extraction pipeline.

| Structured data | Unstructured data | |

|---|---|---|

| Characteristics | Follows defined parameters or format, making it highly crawlable and easily searchable. | Does not follow a specific schema, making it more complex to process and analyze. |

| Examples |

Databases, spreadsheets, CSV files, and XML files.

|

Text documents, emails, social media posts, images, videos, audio files, and web pages.

|

| Searchability |

Easily searchable using queries. For instance, SQL can be used to search and manipulate data stored in relational databases.

|

Requires additional processing such as text mining, natural language processing (NLP), and machine learning to extract meaningful information.

|

|

Data types

|

Commonly includes numerical data, dates, and text that adhere to a fixed structure (e.g., tables with rows and columns).

|

Includes a wide variety of content like text, multimedia, and loosely formatted information.

|

|

Example scenario

|

A company maintains a database with customer information, including names, email addresses, phone numbers, and purchase history. Extracting this data is straightforward because each record follows the same format and can be queried directly.

|

A company collects customer feedback through various channels such as social media, emails, and customer reviews. This feedback is in different formats and requires text analysis to identify key sentiments, common issues, and suggestions. |

Data extraction methods

Incremental extraction

Incremental data extraction involves pulling only the data that has changed or been added since the last extraction from the existing dataset. This method is efficient and minimizes the amount of data transferred, reducing processing time and resource usage. It is particularly useful for maintaining up-to-date datasets without reprocessing the entire dataset each time. For example, in a database, this might involve extracting records with a timestamp greater than the last extraction time. Incremental extraction is ideal for applications requiring frequent updates, such as data warehousing and real-time analytics, as it ensures data freshness while optimizing performance.

Full extraction

Full information extraction entails retrieving the initial dataset from the source each time the extraction process is run. This method is straightforward and ensures that the extracted data is complete and consistent, but it can be resource-intensive and time-consuming, especially with large datasets. Full extraction is typically used when the dataset is relatively small, when changes and notification updates are difficult to track, or during the initial load of a data warehouse. It ensures that no data is missed and can be useful for situations where data integrity and completeness are critical, but may not be suitable for frequent updates due to its higher overhead.

Data Extraction ETL

| Feature/Aspect | Data extraction with ETL (extract, transform, load) | Data extraction without ETL |

|---|---|---|

|

Process complexity

|

Involves multiple stages: extraction, transformation, and loading. Often requires specialized ETL data extraction tools.

|

Typically simpler, focusing only on extracting data from sources.

|

|

Data transformation

|

Includes transformation processes to clean, format, and enrich data.

|

Limited or no transformation; raw data is extracted.

|

|

Data quality

|

High, due to cleaning and normalization during the transformation phase.

|

Variable, as data is not necessarily cleaned or normalized.

|

| Performance |

Can be optimized for large-scale operations, but may require significant resources.

|

Faster for small-scale operations, but may suffer with large volumes or complex queries.

|

|

Use cases

|

Ideal for data warehousing, business intelligence, and integrating disparate data sources.

|

Suitable for simple data collection, reporting, or applications where raw data is sufficient.

|

|

Data extraction software

|

Uses ETL data extraction tools like Talend, Informatica, Apache Nifi, Microsoft SSIS.

|

Can use basic open-source tools and scripts, like Python scripts, SQL queries, or simple APIs.

|

|

Data integration process

|

Facilitates integration of data from multiple sources into actionable data.

|

Generally extracts from single sources, integration is limited or manual.

|

| Maintenance |

Requires ongoing maintenance to handle changes in data sources, formats, and transformation rules.

|

Easier to maintain due to its simplicity, but may require manual adjustments for changes in data sources. |

|

Initial setup

|

More complex and time-consuming to set up due to the need for defining transformation rules and loading processes.

|

Quicker and simpler to set up, focusing only on the extraction process.

|

| Scalability |

Highly scalable for complete data integration across multiple sources.

|

Limited scalability, better suited for smaller datasets or simple extraction tasks.

|

Why Do Businesses Need Automated Data Extraction?

The more information you have, the better decisions you can make. Gathering valuable data you can get a lot of valuable insights that will improve the vision of the current situation and help forecast possible events. This allows business owners to make data-driven decisions and predict the outcome with high accuracy.

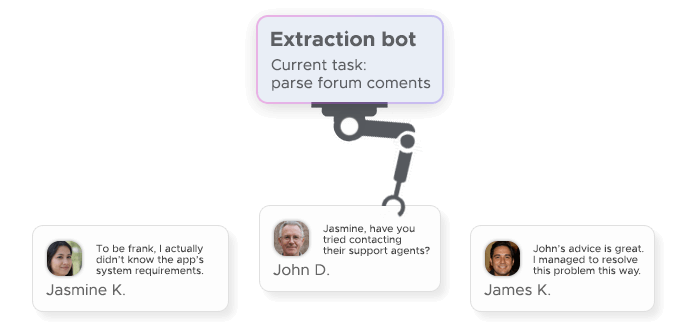

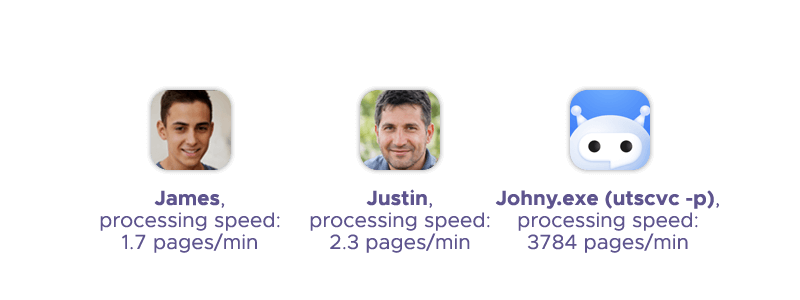

If you automate the data extraction process, you will receive information much faster. Also, manual work is always more expensive. But today businesses have an alternative: scrapers. They will save you a lot of costs while doing the job much better and quicker than humans. There is no such thing as a mistake for robots — they always extract exactly the information you need. So in the end, you will receive quality and perfectly correct insights.

Increased Accuracy

Increased accuracy in data extraction significantly enhances the reliability and quality of business insights. Accurate data extraction ensures that the information collected from various sources is precise and free from errors, which is crucial for making informed decisions. This precision helps businesses minimize the risks associated with incorrect data interpretations, such as flawed financial data, misguided marketing strategies, or poor customer service. By maintaining high accuracy in information extraction, companies can trust their data-driven processes, leading to more effective decision-making, optimized operations, and improved overall performance.

Reducing manual work

Reducing manual oversight through automated data extraction processes brings substantial efficiency and productivity benefits to businesses. Manual data extraction is often time-consuming and labor-intensive, requiring employees with technical backgrounds to spend countless hours on repetitive tasks such as data layouts, entering data into supplier invoices, or scanning documents for relevant details. By automating these processes, businesses can free up valuable employee time, allowing staff to focus on more strategic and creative tasks that require human expertise and decision-making. This shift not only enhances overall productivity but also boosts employee morale by reducing the tedium and potential for burnout associated with monotonous work.

Moreover, automation minimizes the risk of human error, which is a common issue in manual data entry and processing. Errors in data can lead to significant downstream issues, such as inaccurate reports, misguided business strategies, and regulatory compliance problems. By implementing automated extraction solutions, businesses can ensure that data is consistently accurate and reliable. This reliability enhances the quality of business insights and decision-making, ultimately leading to better outcomes and a competitive edge in the market. Additionally, the reduction in manual work can result in cost savings, as businesses can achieve more with fewer resources and potentially lower staffing costs for data-intensive tasks.

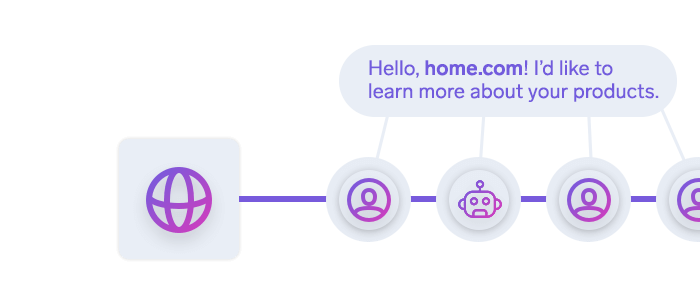

Connecting data from various sources

Connecting data from various sources and file types provides a comprehensive view of an organization’s operations, customers, and market environment. By integrating data from different departments, such as sales, marketing, finance, and customer service, businesses can break down silos and enable cross-functional data analysis. This holistic perspective allows for a deeper understanding of relationships and trends that might not be evident when data is isolated. For example, correlating sales data with customer feedback and marketing campaign performance can reveal customer insights and behaviors, helping businesses tailor their strategies to better meet market demands and enhance customer satisfaction.

Moreover, connecting data from various sources supports more informed and strategic decision-making. When executives and managers have access to a complete dataset, they can make decisions based on a full picture of the business landscape. This integration facilitates real-time analytics, predictive modeling, and more accurate forecasting. For instance, integrating supply chain data with sales forecasts can help optimize inventory management, reduce costs, and prevent stock outs or overstock situations. Additionally, the ability to quickly access and analyze important data from multiple sources empowers businesses to respond agilely to changing market conditions, identify new opportunities, and mitigate risks effectively. This interconnected approach to data enhances overall organizational agility and competitiveness.

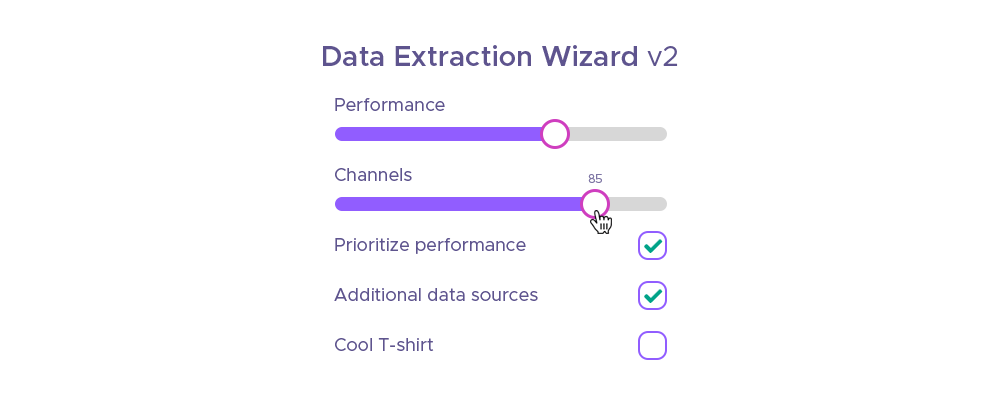

Scalability

Scalability in data extraction allows businesses to efficiently handle growing volumes of data without compromising performance or accuracy. As organizations expand and their data needs increase, scalable data extraction solutions ensure that systems can seamlessly accommodate this growth, whether it's due to higher transaction volumes, more data sources, or increased complexity in data processing. This adaptability prevents bottlenecks and ensures consistent data quality and speed of extraction, enabling businesses to continue leveraging data for decision-making and strategic planning. By implementing scalable data extraction processes, companies can future-proof their operations, ensuring they can meet evolving data demands and maintain competitive advantages as they grow.

Cost-effectiveness

Cost-effectiveness in data extraction stems from reduced manual effort, improved operational efficiency, and optimized resource utilization. By automating data extraction processes, businesses can lower labor costs associated with manual data entry and processing. Automated systems also minimize the risk of errors, which can lead to costly mistakes in decision-making or compliance. Furthermore, efficient extraction allows organizations to allocate resources more effectively, focusing investments on strategic initiatives rather than routine data management tasks. Additionally, scalable extraction solutions can grow with the organization's needs without requiring significant additional investment in infrastructure or personnel, thus maximizing return on investment (ROI) and overall cost-effectiveness.

Ensuring data security

Ensuring data security through robust extraction processes is crucial for protecting sensitive data from unauthorized access, breaches, or loss. Secure extraction frameworks implement encryption techniques and access controls to safeguard data both in transit and at rest. By centralizing data extraction through secure channels and adhering to industry standards and regulations (such as GDPR or HIPAA), businesses can mitigate risks associated with data breaches and maintain compliance. This not only protects the organization's reputation but also instills trust among customers, partners, and stakeholders, fostering long-term relationships and business continuity. Furthermore, secure extraction practices enable businesses to confidently leverage data for future analysis, decision-making, and innovation without compromising confidentiality, integrity, or availability.

How Can Different Businesses Leverage Data Extraction?

The use of scraping depends on the goals of the company and on its industry, of course. Many firms come up with their own approaches and techniques, and it’s hard to mention each of them. So we will talk about the most widely spread ones.

How e-commerce businesses use scraping

Prices grow all the time. But how does a seller keep their customers while raising the pricing? By following the prices of their competitors. Using extraction, e-commerce businesses can follow the changes and maintain the golden mean to win the competition. Also, when researching the prices of other retailers, a seller can detect certain patterns and get inspiration for a better pricing strategy.

Another use of scraping for e-commerce firms is studying the products to determine which ones are popular among their target audience. Performing such research, a seller can offer buyers exactly what they want thus increasing the revenue of the online store.

Finally, extraction eases the management of numerous distribution channels. It’s quite effortless to monitor all of them with scraping and detect if someone violates the rules of a manufacturer.

Data science and scraping

Data gathering lies in the core of data science. Specialists use scraping to provide machine learning models with much-needed information so that artificial intelligence can perform the needed actions better. Also, data scientists use scraping to study trends and predict future outcomes to offer businesses useful insights.

Data extraction for marketing

A good marketing strategy heavily relies on information. Marketing managers utilize scraping to monitor the activity of competitors and get useful insights and inspiration. Also, they gather data to watch over the ranks of a brand in search engines and improve the SEO strategy. Scraping can also bring a business a lot of leads since it’s quite easy to gather emails or other contact information of the target audience from the internet. Finally, extraction can provide marketers with the source of inspiration for the content they generate for a brand they’re promoting.

Data extraction for finance

Investors and companies that work in finance absolutely adore scraping as it can bring them all the information they need. Using data extraction, it’s easy for them to follow the trends in different markets, be aware of all the news and changes, and accelerate due diligence. The biggest part of the everyday job of an investor is studying different kinds of information. So why not let a bot do this job instead of you to save lots of time and effort? Then, you will simply go through the most essential and structured information to make a correct data-driven decision.

Data extraction for real estate

Real estate agencies can benefit greatly from data extraction as it will bring them all the information on available properties, details about them, prices, current needs of buyers, and much more. Using web scraping, real estate agents can follow the activity of their competitors to make the right move and close deals faster.

Conclusion

Information is valuable for all companies, and it’s hard and quite pointless to list each industry. The approaches we’ve mentioned are the most popular ones. And we hope they will serve you as an inspiration for creating your own practice. Infatica specialists are always ready to give you advice on how to use scraping for your business — a lot of our customers use our proxies for scraping, so we dealt with numerous different approaches. Also, we will help you choose a suitable plan for your business. Simply drop us a line and we will assist you.