Data aggregation plays a vital role in gaining meaningful insights. Whether it’s monitoring real estate listings, tracking social media sentiment, or compiling news from various sources, aggregation ensures that businesses and analysts can access organized, actionable data – and Infatica’s proxy solutions can supercharge your data aggregation pipeline. Let’s see how!

What is Data Aggregation?

Data aggregation involves collecting, cleaning, transforming, and combining data from multiple sources to create a unified and structured dataset. This can involve anything from compiling financial records from different institutions to scraping product listings from various e-commerce websites and merging them into a single database.

At its core, aggregation helps turn scattered, raw information into a more organized and usable format. It can be as simple as gathering weather reports from different cities into a single dashboard or as complex as compiling real-time stock market data from multiple exchanges to generate financial insights.

Data Aggregation Steps

Step 1: Data Collection (Scraping & Extraction)

The first step in data aggregation is gathering raw information from various sources. This often involves web scraping, where automated scripts extract data from websites, APIs, or other online platforms. The choice of scraping method depends on the structure of the target site – some websites allow direct API access, while others require HTML parsing.

To avoid detection and bans, web scrapers often use proxies to rotate IP addresses, mimicking organic user behavior. Residential and datacenter proxies are commonly used for this purpose. Headless browsers, such as Puppeteer or Selenium, can help bypass bot-detection mechanisms by simulating real user interactions. Scrapers must also handle challenges like CAPTCHAs, JavaScript-rendered content, and rate limits, ensuring smooth and continuous data collection.

Step 2: Data Cleaning & Normalization

Once the raw data is extracted, it typically contains inconsistencies, missing values, or redundant entries. At this stage, data cleaning is performed to ensure accuracy and uniformity. Formatting discrepancies – such as different date formats, currency symbols, or unit measurements – are resolved so that all data follows a standardized structure.

Another critical part of this process is deduplication. Since data is often collected from multiple sources, there can be overlapping information, which must be identified and removed. Additionally, missing values may need to be addressed by imputing estimates, filling in gaps from other sources, or flagging incomplete records for review.

Step 3: Data Transformation

Raw, unstructured data is often not immediately useful. Before it can be analyzed, it needs to be transformed into a structured format. This involves organizing extracted data into a common schema – whether it’s a relational database (SQL) format, a NoSQL document structure, or a simple CSV/JSON file.

If multiple sources are aggregated, their data must be aligned based on shared identifiers. For example, product listings scraped from different e-commerce sites may use different naming conventions for the same item, requiring mapping and standardization. At this stage, unnecessary data points can also be filtered out, ensuring that only relevant fields are retained.

Step 4: Data Storage

Once cleaned and structured, the data must be stored in a way that allows efficient access and retrieval. The choice of storage depends on the nature of the dataset. Structured data, such as customer records or financial transactions, often fits well into relational databases like MySQL or PostgreSQL. Unstructured or semi-structured data, such as scraped social media posts, may be better suited for NoSQL databases like MongoDB or Elasticsearch.

For large-scale aggregation projects, cloud storage solutions like AWS S3 or Google Cloud Storage provide scalable options for storing raw and processed data. Optimizations such as indexing and caching can significantly improve query performance, making retrieval faster when dealing with large datasets.

Step 5: Data Processing & Analysis

With all the data cleaned, structured, and stored, the next step is making sense of it. This can involve running analytical queries, generating reports, or detecting patterns in the data. Aggregation functions – such as calculating averages, identifying trends, or grouping data by category – help in transforming raw information into meaningful insights.

Sometimes, datasets are enriched by cross-referencing them with external information. For example, scraped job postings might be combined with company profiles to provide deeper context about hiring trends. This stage also involves formatting the processed data into reports, visual summaries, or API-ready formats.

Step 6: Data Delivery & Distribution

Finally, the aggregated data needs to be delivered in a usable format. Depending on the use case, this could mean serving data through an API, exporting structured files (CSV, JSON), or visualizing insights through dashboards and business intelligence tools. APIs allow real-time data access, enabling automated systems to fetch updates as needed. Alternatively, companies may offer periodic data dumps or reports that users can download and analyze.

At this point, the aggregated dataset is ready to be used – whether for market analysis, competitive intelligence, or powering data-driven applications. The success of this entire process depends on maintaining data quality, ensuring efficient storage, and optimizing the delivery method based on user needs.

Why is Data Aggregation Important?

Data aggregation is crucial for businesses, researchers, and analysts because raw data is often fragmented, inconsistent, or incomplete. Without aggregation, valuable insights remain buried in disconnected data points, making it difficult to draw meaningful conclusions.

- Better decision-making: Businesses use aggregated data to identify trends, optimize strategies, and make data-driven decisions. For example, an e-commerce company might analyze pricing data from competitors to adjust its own pricing strategy.

- Market and competitive intelligence: Aggregated data helps companies monitor competitors, track industry trends, and adapt to changing market conditions. For instance, a web scraping-based aggregator can track product availability and price fluctuations across online retailers.

- Efficiency and automation: Rather than manually collecting and organizing data, aggregation automates the process, saving time and reducing human error. This is particularly useful for large-scale operations such as financial analytics, lead generation, or ad performance tracking.

- Enhanced data quality: By merging data from different sources, aggregation can fill gaps, correct inconsistencies, and provide a more complete picture of a given dataset. This is essential for industries like healthcare and finance, where accurate data is critical.

- Personalization and insights: Many modern applications, from recommendation engines to targeted advertising, rely on aggregated data to understand user behavior and preferences.

Picking the Right Data Aggregation Toolset

| Tool | Type | Features | Best For | Pricing Model |

|---|---|---|---|---|

| Apache Nifi | Open-source ETL | Real-time data ingestion, automation, UI-based workflow design | Enterprises needing scalable data pipelines | Free (Open Source) |

| Talend Data Integration | ETL & Aggregation | Drag-and-drop interface, cloud & on-premise support, big data compatibility | Enterprises handling structured & unstructured data | Freemium (Paid for full features) |

| Google BigQuery | Cloud Data Warehouse | SQL-based queries, real-time analytics, AI integration | Businesses needing fast cloud-based analytics | Pay-as-you-go |

| Microsoft Power BI | BI & Analytics | Data visualization, real-time dashboards, integration with Microsoft tools | Organizations requiring visual insights | Freemium (Paid for advanced features) |

| Web Scrapers (BeautifulSoup, Scrapy) | Web Scraping | HTML parsing, automation, API-based extraction | Developers & businesses collecting web data | Free (Open Source) |

| Mozenda | No-Code Web Scraping | Cloud-based, point-and-click scraping, structured data output | Non-technical users needing simple web scraping | Subscription-based |

| Supermetrics | Marketing Data Aggregation | Connects APIs from marketing platforms, automates reporting | Marketers and advertisers tracking performance | Subscription-based |

Challenges in Data Aggregation & How Infatica Helps

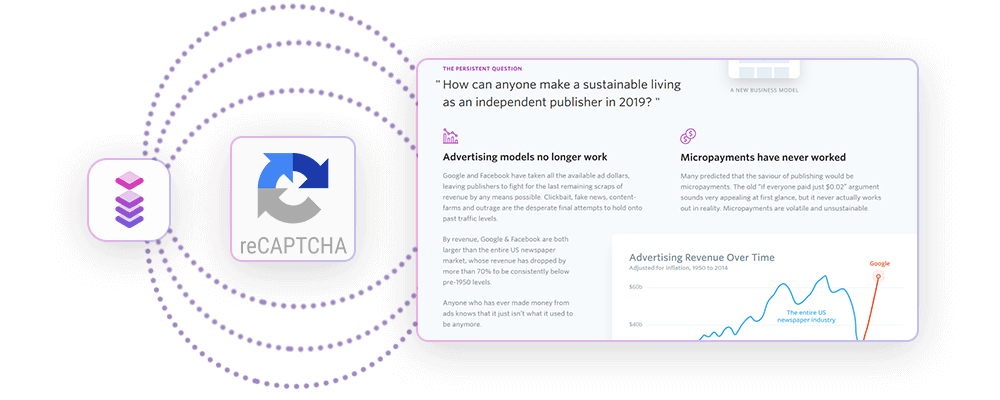

Website Restrictions & Anti-Bot Mechanisms

Many websites actively block automated data collection through CAPTCHAs, rate limiting, and fingerprinting techniques. This can prevent scrapers from consistently accessing and aggregating data. Here's how Infatica can help you:

- Rotating residential proxies provide a large pool of real residential IPs, helping scrapers mimic real user behavior and avoid detection.

- ISP and mobile proxies offer high trust scores, making them ideal for scraping sites that employ aggressive anti-bot measures.

- Automated IP rotation ensures that requests are routed through different IPs, reducing the risk of getting blocked.

Data Inconsistency Across Sources

When aggregating data from multiple websites, inconsistencies arise in formats, naming conventions, and data structures. For example, e-commerce platforms may display prices with different currencies, date formats, or unit measurements. Here's how Infatica can help you:

- While data normalization is handled at the processing stage, continuous access to diverse sources (enabled by Infatica's proxies) ensures that aggregation scripts can fetch fresh, structured data.

- By preventing frequent blocks or incomplete scrapes, Infatica helps maintain data completeness, making it easier to standardize information.

Geolocation Barriers & Regional Data Variations

Some websites display different content based on the visitor’s location (geo-blocking). This is especially problematic for aggregators monitoring prices, news, or availability of region-specific services. Here's how Infatica can help you:

- Global proxy network allows scrapers to appear as local users in different countries, bypassing geo-restrictions.

- Thanks to IP targeting, users can choose specific geographic locations to ensure accurate, region-specific data collection.

High Traffic Volumes & Rate Limiting

Many websites impose rate limits on requests from the same IP address, slowing down data collection or blocking scrapers altogether. Here's how Infatica can help you:

- High-throughput datacenter proxies are ideal for bulk scraping operations where speed and stability are priorities.

- Thanks to session persistence, Infatica allows maintaining the same IP for a set duration, preventing frequent logouts or captchas when scraping logged-in data.

- Load distribution allows requests to be distributed across multiple IPs to avoid hitting rate limits.

CAPTCHAs & JavaScript-Rendered Content

Many sites use CAPTCHAs or rely heavily on JavaScript to load content dynamically, making scraping difficult. Here's how Infatica can help you:

- Proxies can be integrated with headless browsers (e.g., Puppeteer, Selenium) that execute JavaScript and bypass CAPTCHAs using automated solving techniques.

- Residential IPs with high trust scores appear as regular users – and CAPTCHAs are triggered less frequently, reducing disruptions in scraping workflows.

Real-World Use Cases of Data Aggregation

Data aggregation is a critical process across numerous industries, enabling businesses to make data-driven decisions, enhance customer experiences, and optimize operations.

| Industry | Use Case | Example | Infatica's Impact |

|---|---|---|---|

| E-commerce & Retail | Price monitoring & competitive intelligence | Amazon tracks competitor prices to adjust dynamically | Rotating residential proxies prevent detection, IP rotation bypasses blocks |

| Finance & Trading | Financial data aggregation & stock market analysis | Bloomberg aggregates stock prices, news, and market sentiment | Low-latency datacenter proxies ensure real-time data access |

| Sales & Marketing | Lead generation & B2B data enrichment | A SaaS company scrapes LinkedIn to collect leads | Rotating proxies prevent bans, geo-targeting extracts region-specific data |

| Travel & Hospitality | Aggregating flight, hotel, and vacation data | Skyscanner compares airline ticket prices | Residential proxies bypass geo-restrictions, rotating IPs avoid detection |

| Journalism & Media | News aggregation | Google News aggregates articles from multiple publishers | Scraping-friendly proxies ensure uninterrupted access to news portals |

| Cybersecurity | Fraud detection & threat intelligence | Security firms monitor dark web activity for stolen credentials | Anonymous & residential proxies provide safe access to high-risk sources |

| Real Estate | Aggregating property listings & market trends | Zillow aggregates MLS data for real estate insights | Geo-targeted proxies help extract location-specific listings |

| Social Media & AI | Social media monitoring & sentiment analysis | Nike tracks Twitter & Instagram mentions to gauge product reception | Social media-compatible & mobile proxies ensure real-time data collection |