From startups to enterprise teams, APIs are essential for connecting systems, automating tasks, and – perhaps most crucially – accessing data. For businesses that rely on real-time insights, APIs offer a cleaner, faster alternative to traditional web scraping. Let’s explore how APIs function, explore their different types, and show how they fit into the broader world of data collection – including how tools like proxies can help when APIs fall short.

What Does API Stand For?

API stands for Application Programming Interface. It might sound technical, but the concept is surprisingly simple: an API is a way for two software applications to communicate with each other using a predefined set of rules.

Think of it like a restaurant menu. You (the user) tell the waiter (the API) what you want from the kitchen (the system). The waiter takes your order, delivers it to the kitchen, and then brings your food back to you – all without you needing to know how to cook or what happens behind the scenes. That’s essentially how APIs work. In technical terms:

- The application is the software you’re interacting with.

- The interface is the set of rules that allows different software components to exchange information.

For example:

- When a weather app fetches current temperatures, it’s calling an API.

- When a business pulls product data from an e-commerce site, it might use that site’s public API.

- When developers automate data collection, they often rely on APIs for faster, structured access.

How Do APIs Work?

APIs work by defining a set of requests and responses that allow software systems to exchange information – without needing to know how the other system is built. They act as messengers that handle your request, talk to the system, and return a response.

The Basic Flow of an API Call

- Client sends a request to the API.

- The API processes the request and forwards it to the appropriate system.

- That system generates a response – usually in JSON or XML format.

- The API returns the response to the client.

You send this request to a weather API. The API receives it, checks the data source, and sends back:

{

"location": "Berlin",

"temperature": "24°C",

"condition": "Sunny"

}

Key API Components

- Endpoints – Specific URLs where data is accessed (e.g.,

/weather/berlin). - HTTP Methods – Common ones include

GET(to fetch data),POST(to send or update data),DELETE, andPUT. - Headers – Include information like authentication keys.

- Query Parameters – Filters and options in the request (e.g.,

?units=metric&lang=de). - Rate Limits – Many APIs restrict how many requests you can send per minute or hour.

Types of APIs

Not all APIs are created equal – different types serve different purposes and levels of access. Understanding these distinctions helps businesses and developers choose the right tools for the job, especially when building data pipelines or automation systems. Here are the most common types of APIs:

Open (or Public) APIs

These are available to anyone – often with just a quick sign-up and an API key. Companies use public APIs to increase adoption of their platforms or share useful data. Examples:

- OpenWeatherMap (weather data)

- CoinGecko (crypto data)

- Twitter/X public data (with limitations)

💡 Relevance: Public APIs are great for scraping alternatives – but they often include rate limits or partial data, prompting some businesses to supplement them with scraping solutions when they need more depth or frequency.

Partner APIs

These are shared only with specific business partners. They may offer deeper access to data or premium features but require approval or contractual agreements. Examples:

- Amazon or eBay partner APIs for vendors

- Financial APIs for fintech partners

💡 Relevance: Businesses often use proxies to simulate access across different regions or accounts when working with partner APIs that enforce restrictions.

Internal APIs

Used inside organizations to connect internal systems – e.g., linking a company’s inventory database to its sales dashboard. These APIs aren’t exposed to external users. Examples:

- HR tools integrating with internal databases

- Backend services sharing data within a company’s infrastructure

💡 Relevance: These don’t apply directly to scraping or public data access – but the same API concepts apply when businesses build their own scraping or data aggregation tools.

Composite APIs

These bundle multiple data sources or endpoints into a single request. They’re useful for complex workflows or improving efficiency. For example, an e-commerce dashboard API that fetches orders, customer info, and product stock in one request

💡 Relevance: In web scraping or data aggregation, composite APIs are rare – but building something similar internally (with the help of proxies or scraping) can streamline large-scale data operations.

REST vs. SOAP APIs

These refer to API styles – or protocols.

- REST (Representational State Transfer) is the most common. It’s lightweight, fast, and typically uses JSON. Ideal for web and mobile apps – and scraping targets.

- SOAP (Simple Object Access Protocol) is heavier, uses XML, and is common in legacy enterprise systems.

💡 Relevance: Most public-facing web APIs are REST-based – and REST endpoints are often the first thing targeted by web scrapers or automated data collectors.

Choosing the Right API (or Alternative)

When APIs are available and reliable, they’re usually the best option. But when they’re restricted, incomplete, or missing altogether, businesses turn to web scraping to access the same data from a site’s front end.

That’s where Infatica’s tools come in: Whether you’re collecting data through a REST API or scraping it directly from a website, our proxy infrastructure helps ensure access, scalability, and reliability – even at high volumes.

Common Use Cases for APIs

APIs are everywhere – quietly powering countless tools, apps, and services we use every day. Whether you're ordering a taxi, checking exchange rates, or automating a report, there's likely an API making it happen behind the scenes.

Automation & Workflow Integration

APIs are essential for connecting services and automating repetitive tasks. APIs eliminate manual work and enable real-time data flow – which is essential for modern, agile teams. Businesses often use them to streamline operations across platforms. Examples:

- Syncing data between CRMs and email tools

- Automatically generating reports from analytics platforms

- Triggering workflows via services like Zapier or Make

App & Software Development

Developers use APIs to add functionality without building everything from scratch – saving time and resources. They also enable rich user experiences and help products scale faster by connecting to existing infrastructure. Examples:

- Integrating maps via Google Maps API

- Embedding payment systems like Stripe or PayPal

- Adding weather, news, or social feeds into apps

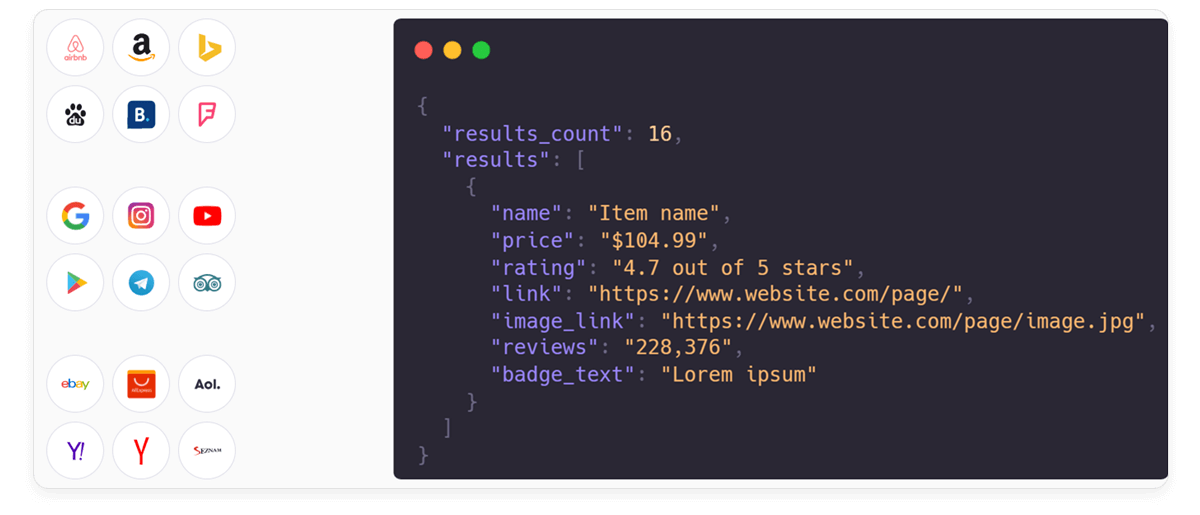

Data Collection & Market Intelligence

APIs are often the primary gateway to real-time or large-scale data collection – especially for businesses doing competitive analysis, price monitoring, or research. Examples:

- Monitoring product prices from e-commerce platforms

- Collecting SEO or ad performance data

- Aggregating financial data from public sources

This is where APIs and web scraping often intersect. When a site’s API is limited, outdated, or paywalled, scraping the website itself becomes the only option – and that's where proxy solutions like Infatica’s can bridge the gap.

Public Data Access & Open Government Initiatives

Many government bodies and public institutions provide open APIs to promote transparency and innovation. These APIs are often free but can come with rate limits or availability issues – making reliable, distributed access via proxies a useful fallback. Examples:

- COVID-19 case tracking APIs

- Public transport schedules

- Environmental and weather data

AI, Machine Learning & Big Data

Developers use APIs to access training datasets, real-time inputs, or integrate third-party AI tools. These applications require high-volume, uninterrupted access to structured data, which can be limited by API usage caps or IP restrictions – another strong use case for residential or mobile proxies. Examples:

- Sentiment analysis APIs

- Image and text recognition

- Streaming data from news or social media feeds

APIs and Web Scraping: What’s the Connection?

| Feature | APIs | Web Scraping |

|---|---|---|

| Data Format | Structured (JSON, XML) | Unstructured by default (HTML, text) |

| Reliability | More stable and consistent | Can break if website layout changes |

| Speed | Faster, optimized for data retrieval | Slower, requires parsing full web pages |

| Access Limitations | Often rate-limited, may require authentication | Limited only by IP bans and CAPTCHA |

| Data Availability | Only what API exposes | Can extract all visible data on web pages |

| Legal & Ethical Issues | Usually authorized and documented | May violate site terms if not careful |

| Use Cases | Integration, automation, app development | Competitive intelligence, data mining |

| Need for Proxies | Helpful to avoid rate limits and geo-blocks | Essential to avoid IP bans and CAPTCHAs |

Challenges When Using APIs

APIs are often seen as the gold standard for accessing data – and for good reason. They’re structured, efficient, and built for developers. But in practice, working with APIs comes with its own set of challenges, especially when you’re dealing with large-scale or competitive data collection.

Rate Limits

Most APIs restrict how many requests you can send per minute, hour, or day. Once you hit the limit, you’ll need to wait or risk getting blocked. For example, a pricing API might only allow 100 requests/hour – not ideal if you’re tracking thousands of products in real time.

✅ Workaround: Use rotating proxies to distribute requests across multiple IPs. This helps avoid hitting rate limits tied to individual IP addresses.

Geo-Restrictions

Some APIs only allow access from certain regions or serve different content based on your location. For example, a flight API might display different fares to users in the U.S. vs. Europe.

✅ Workaround: Use geo-targeted residential or mobile proxies to access APIs as if you're in a specific country or region.

Authentication Complexity

Many APIs require API keys, OAuth tokens, or multi-step authentication – which can be difficult to manage at scale or across multiple accounts. For example, some social platforms restrict access unless you’ve registered as a verified partner, adding legal and technical overhead.

✅ Workaround: When API access isn’t feasible, scraping public frontend data via proxy-backed crawlers can offer a legal and effective alternative – especially if the data is publicly visible.

Incomplete or Missing Data

Some APIs intentionally limit access to critical information or provide only partial datasets unless you upgrade to a paid tier or become a business partner. For example, a retail API might only show high-level product info, omitting pricing history or seller details.

✅ Workaround: Many businesses use scraping to supplement API data – extracting what’s visible on the site but missing in the API.

IP Blocking and Detection

High-frequency API access from the same IP can trigger firewalls, bot detection systems, or even CAPTCHAs – especially with sensitive or competitive data. For example, you may get blocked or blacklisted after just a few hundred API calls from one server.

✅ Workaround: Infatica’s rotating proxy infrastructure helps prevent detection by distributing traffic across real residential and mobile IPs – keeping your operations smooth and ban-free.

Downtime and Stability

APIs aren’t always stable – they may go offline, return errors, or change without notice. For example, a public API might change its response format overnight, breaking your data pipeline.

✅ Workaround: Having a scraper fallback ready – or combining API + scraping – ensures your data access stays uninterrupted.

How Infatica Helps

Infatica’s proxy solutions are built with developers and data engineers in mind:

- Global proxy pool spanning 195+ countries

- Easy integration with API clients, scripts, and scraping tools

- Real-time rotation and session control

- Dedicated support for high-frequency and enterprise-grade data access

Whether you’re accessing a public API, building a scraper fallback, or scaling a data pipeline, Infatica helps you unlock reliable, compliant, and efficient access to the data that drives your business.