- What Is Data Parsing?

- What Data Can You Parse?

- Why Using a Data Parser Can Improve Your Business Processes?

- Common Data Formats

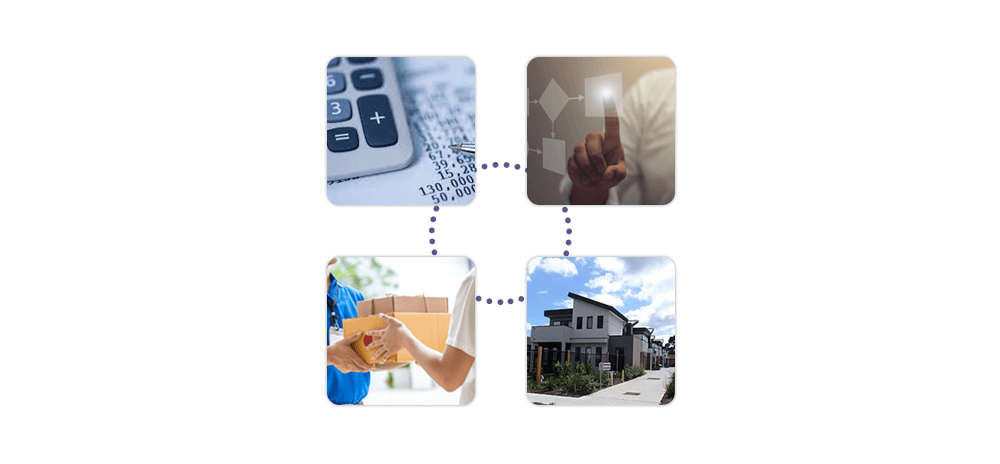

- Business Use Cases of Data Parsing

- Challenges in Data Parsing

- Tools and Technologies for Data Parsing

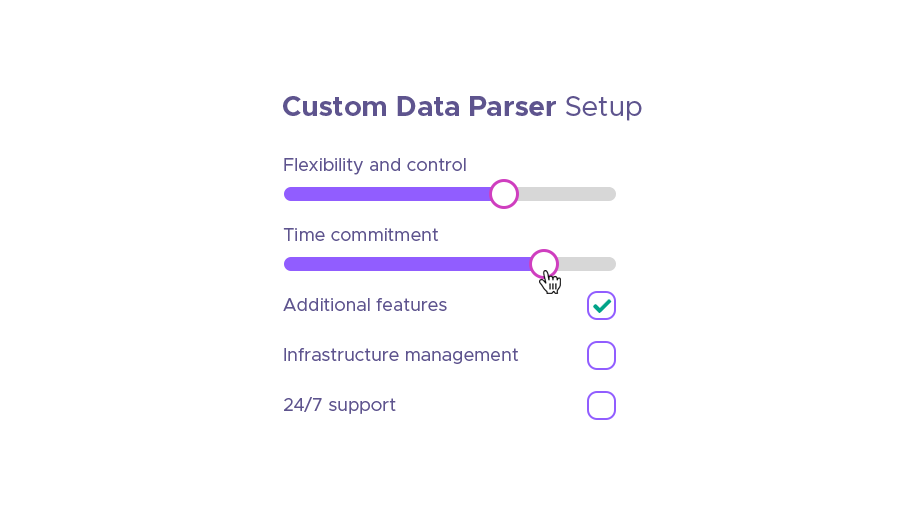

- Buy Or Build a Data Parser?

- How to Do Data Scraping With Infatica

- Frequently Asked Questions

Efficient data parsing is essential for businesses to unlock valuable insights from raw data. This article explores the fundamentals of how data parser works, including types of data parsing, its processes and benefits. We will delve into the advantages of data parsing for businesses, such as time and cost savings, improved data quality, and enhanced data analysis capabilities. Additionally, we will discuss various data formats, common challenges in building a data parser, and the pros and cons of third-party data parsers. Finally, we will highlight some essential data parsing tools and how services like Infatica can facilitate effective data scraping.

What is data parsing?

Data parsing is the process of analyzing a string of symbols (or text) and converting data into a structured format that can be easily understood and processed by a computer. This involves breaking down the input data into manageable pieces, extracting meaningful information, and organizing it in a way that can be utilized for further processing or analysis.

How does it work?

A data parsing pipeline is a system of pre-written rules and codes – and it consists of several stages through which unreadable data passes to be processed and transformed into a structured output. Here's a breakdown of a typical data parsing setup:

- Data ingestion: Raw data is collected from various sources such as files, databases, APIs, or user inputs.

- Preprocessing: The raw data undergoes initial cleaning and preprocessing. This might include removing unwanted characters, normalizing data formats, handling missing values, and performing initial validation.

- Lexical analysis: Scraped data is divided into tokens. This step often uses lexical analyzers or tokenizers to break the data into meaningful units.

- Syntax analysis: Tokens are arranged into a hierarchical structure based on predefined grammar rules. A data parser or syntax analyzer creates a parse tree that represents the structure of the data.

- Semantic analysis: The parse tree is validated to ensure semantic correctness. The semantic analysis component might involve type checking, ensuring consistency of data relationships, and verifying business rules.

- Transformation: The validated and converted data is transformed into the desired output format. This can involve converting data types, restructuring data, and formatting output data according to specifications.

- Output generation: The final structured data is produced. This could be in the form of JSON, XML, database entries, or any other data format suitable for the application.

What data can you parse?

Data parsing technologies support various types of data, depending on the format and structure of the input. Here are some common types of data that can be processed by the data parser:

Text Data

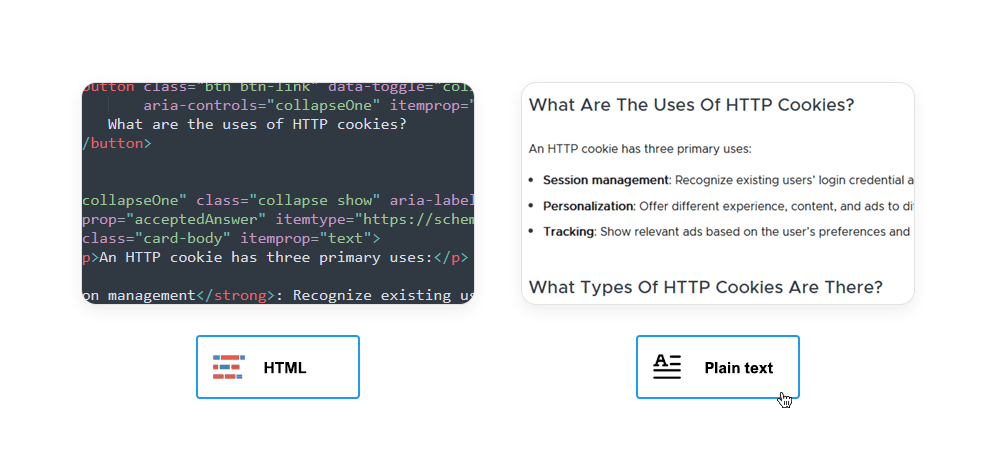

Plain text: Simple text files containing unformatted text. A data parsing tool identifies meaningful sections, such as lines or words.

Log files: These files often contain entries with a specific format, such as timestamps, error messages, and user actions.The log data parsing process helps in extracting and analyzing specific information.

Markup Languages

HTML (HyperText Markup Language): The standard markup language used to create web pages. A data parsing tool processes an HTML string by extracting information from web pages, such as text content, links, and images.

Markdown: A lightweight markup language with plain text formatting syntax. A Markdown data parser converts it into HTML or another structured format.

Binary Data

Images: Binary files that contain image data.A data parser works here by extracting metadata (e.g., dimensions, color space) and sometimes the actual pixel data.

Audio and video: Binary files containing multimedia content. What makes data parsing important here is extracting metadata (e.g., duration, codec information) and streams for playback or processing.

Data from APIs

RESTful APIs: These APIs often return data in JSON or XML format. The data parsing process involves converting the response data into a usable format for further processing or display.

SOAP APIs: These APIs utilize XML-based messaging. We can use a data parser to read the XML response and extract the necessary information.

Natural Language

Sentences and phrases: Natural language processing involves breaking down sentences into parts of speech (e.g., nouns, verbs, adjectives) and understanding the grammatical structure.

Named entities: Identifying and classifying entities in text (e.g., names of people, organizations, locations) through Named Entity Recognition (NER) grammar-driven data parsing.

Sensor Data

IoT devices: Data from Internet of Things (IoT) devices, often in JSON or binary formats. The data parsing process involves extracting sensor readings, timestamps, and other relevant information.

Telemetry data: Data collected from various sensors, typically involving time-series data. In this scenario, data parsing refers to reading the data streams and converting them into structured formats for analysis.

Financial Data

Market research: Stock prices, trading volumes, and other financial metrics, often in CSV or JSON formats. Here, data parsing refers to extracting and structuring the data for analysis.

Financial reports: Company financial statements in various formats (PDF, HTML). Data parsing involves extracting key financial metrics and information.

Scientific Data

Genomic data: DNA sequences and related data, often in FASTA or FASTQ formats. Data parsing involves reading the sequence data and metadata.

Climate data: Weather and climate records in various formats (e.g., netCDF). Data parsing involves extracting and structuring data for analysis.

Why Using a Data Parser Can Improve Your Business Processes?

Different parser technologies can be really useful in certain scenarios. Let’s explore the most interesting data parser use cases:

Time and Money Saved

Data parsing automates the process of collecting data, significantly reducing the need for manual intervention. This automation accelerates data processing, enabling businesses to quickly access and use their data. As a result, employees spend less time on repetitive tasks, focus on higher-value activities such as strategic planning and analysis, and understand data better. The ability to automate tasks also minimizes the risk of human errors, leading to more accurate and reliable data, which further saves time and reduces costs associated with error correction.

Greater Data Flexibility

Data parsing enables businesses to reuse data and convert data formats into standardized structures, enhancing data flexibility. With parsed data, organizations can easily integrate and use information from various sources, regardless of its original format. This standardization simplifies data manipulation, making it easier to perform operations such as filtering, sorting, and transforming data for different analytical purposes. As a result, businesses can adapt quickly to changing data requirements and leverage a wide range of data sources to gain comprehensive insights.

Higher Quality Data

Data parsing plays a vital role in improving data quality by helping in cleaning and standardizing collected information. During the data parsing process, data is validated and transformed to meet predefined standards and formats, which helps in identifying and correcting errors early on. This structured approach to data handling minimizes inconsistencies and discrepancies, leading to a higher degree of data integrity. High-quality data is essential for reliable analytics, as it ensures that insights and decisions are based on accurate and dependable information.

Simplified Data Integration

Data parsing simplifies the integration of information from multiple sources by converting raw data into a single format. This uniformity allows businesses to seamlessly integrate data from disparate systems, such as databases, APIs, and flat files, creating a consolidated view of information. Simplified data integration facilitates the development of comprehensive datasets that provide a holistic perspective on business operations, customer behavior, and market trends. As a result, organizations can perform more thorough and insightful analyses, driving better strategic decisions.

Improved Data Analysis

Data parsing enhances data analysis by providing well-structured and clean data that is ready for in-depth examination. Parsed data allows data analysts to focus on deriving insights rather than spending time on data cleaning and preparation. This streamlined approach enables more efficient and effective analysis, as high-quality, structured data is essential for accurate and meaningful results. An improved data analysis process leads to better understanding of business trends, customer preferences, and operational efficiencies, supporting informed decision-making.

Common Data Formats

When it comes to data parsing, the most popular data are JSON, XML, and CSV. Let’s explore their pros and cons:

JSON (JavaScript Object Notation)

JSON is a lightweight data-interchange format that is easy for humans to read and write and easy for machines to parse and generate. It is primarily used for transmitting data in web applications between a server and a client.

JSON data is represented as key-value pairs. Keys are strings, and values can be strings, numbers, arrays, objects, true/false, or null. JSON objects are enclosed in curly braces {}, and arrays are enclosed in square brackets []. Parsing JSON involves converting the JSON string into a data structure like a dictionary in Python or an object in JavaScript. This allows easy access and manipulation of the data.

Example:

{

"name": "John Doe",

"age": 30,

"email": "john.doe@example.com",

"hobbies": ["reading", "traveling", "swimming"]

}

JSON offers these benefits:

- Easily readable data.

- Supported by many programming languages.

- Ideal for hierarchical data representation.

- Widely used in web APIs and configuration files.

XML (eXtensible Markup Language)

XML is a markup language designed to store and transport data. It supports both human-readable and machine-readable formats, with a focus on simplicity, generality, and usability across the Internet.

XML data is organized into a hierarchical structure with elements enclosed in tags. Each element has an opening tag <tag> and a closing tag </tag>. Elements can have attributes, text content, and nested child elements. Parsing XML involves reading the XML tags and attributes and converting them into a tree or hierarchical data structure. This allows easy traversal and manipulation of information using XML data parser like DOM (Document Object Model) or SAX (Simple API for XML).

Example:

<person>

<name>John Doe</name>

<age>30</age>

<email>john.doe@example.com</email>

<hobbies>

<hobby>reading</hobby>

<hobby>traveling</hobby>

<hobby>swimming</hobby>

</hobbies>

</person>

XML offers these benefits:

- Highly flexible and self-descriptive.

- Supports nested and complex data structures.

- Extensively used in web services (SOAP) and document storage.

- Facilitates data validation using DTD or XML Schema.

CSV (Comma-Separated Values)

CSV is a simple text format for storing tabular data, where each line represents a data record, and each record consists of fields separated by commas. It is widely used for data exchange between applications, especially spreadsheets and databases.

CSV data is structured in rows and columns. The first row often contains headers (column names), and subsequent rows contain data values. Each field is separated by a comma, and each row is a new line. Parsing CSV involves reading the file line by line, splitting each line into fields based on the delimiter (comma), and converting the data into a structured format like a list of dictionaries or a data frame. Libraries like Python's csv module or pandas make this process straightforward.

Example:

name,age,email,hobbies

John Doe,30,john.doe@example.com,"reading, traveling, swimming"CSV offers these benefits:

- Easy to read and write using simple text editors.

- Supported by many data processing tools and applications.

- Ideal for flat, tabular data representation.

- Lightweight and easy to parse.

Business Use Cases of Data Parsing

Resume parsing

In this scenario, a data parser is extracting data from resumes and converting it into a structured format for easy analysis and integration into applicant tracking systems (ATS). This process identifies key details such as the candidate's name, contact information, work experience, education, skills, and certifications. By automating resume data parsing, HR teams can quickly screen and sort candidates, significantly reducing the time and effort required for the initial stages of the recruiting process. This efficiency allows HR departments to focus on more strategic activities, such as interviewing and candidate engagement, while ensuring a consistent and unbiased evaluation of applicants.

Email parsing

Email data parsing automates the extraction of structured data from emails, converting unstructured data into meaningful information. This can include parsing order confirmations, support tickets, lead information, or any other data embedded within email content. Businesses use email parsing to streamline workflows by automatically processing incoming emails, updating databases, triggering actions, creating marketing campaigns, or integrating with CRM systems. For instance, support teams can use email data parsing to create tickets directly from customer inquiries, ensuring timely responses and better customer service. By reducing manual data entry, email parsing enhances productivity and accuracy in handling email communications.

Investments

In the investment sector, data parsing is used to extract and analyze financial information from diverse sources such as market feeds, financial reports, business investments, news articles, and regulatory filings. Parsing tools can quickly process large volumes of data to identify trends, patterns, and insights that inform investment strategies. For instance, parsing earnings reports and market news allows analysts to assess company performance and market conditions in real-time. This timely and accurate information enables investors to make informed decisions, optimize portfolio management, and perform stock analysis.

Ecommerce and marketing

Data parsing in ecommerce and marketing involves extracting actionable insights from various data sources like customer reviews, product descriptions, sales data, and social media feeds. By parsing this data, businesses can gain a deeper understanding of customer preferences, market trends, and competitors’ performance. For example, analyzing customer reviews and feedback helps identify popular products and areas for improvement. Similarly, parsing sales data can reveal purchasing patterns and optimize inventory management. In marketing, parsed data supports targeted campaigns by identifying customer segments, optimizing content, and measuring campaign performance, ultimately driving higher engagement and sales.

Finance and Accounting

In finance and accounting, data parsing is used to automate the extraction and processing of financial data from documents like invoices, credit reports, bank statements, and tax forms. This automation reduces manual data entry, minimizes errors, and ensures that financial records are accurate and up-to-date. For example, parsing invoices can automate accounts payable processes by extracting loan periods and integrating interest rates into accounting software. Similarly, parsing bank statements helps in reconciling transactions and maintaining accurate customer data. By improving data accuracy and efficiency, data parsing supports better financial reporting, compliance, and decision-making.

Shipping and Logistics

Data parsing in shipping and logistics involves extracting and organizing data from sources such as shipping labels, tracking updates, delivery receipts, and inventory reports. Thanks to the structure of data formats, companies can utilize better tracking of shipments, billing, and optimization of delivery routes. For instance, parsing tracking updates allows real-time monitoring of shipments, providing accurate delivery estimates and enhancing customer communication. Parsing inventory reports ensures that stock levels are accurately maintained, preventing stockouts and overstock situations. By streamlining data handling, data parsing enhances operational efficiency, reduces costs, and improves customer satisfaction in the logistics sector.

Real Estate

In real estate, real estate emails and other media can help to extract and analyze information from property listings, market reports, legal documents, and customer inquiries. This data is crucial for real estate agents, investors, and property managers to make informed decisions. For example, parsing property listings helps agents quickly identify suitable properties for clients by extracting key details like price, location, and features. Parsing market reports provides insights into lead sources and cash flow data, aiding investment decisions. Additionally, parsing legal documents such as lease agreements ensures compliance and accurate record-keeping. By converting unstructured data into actionable insights, data parsing enhances efficiency and decision-making in the real estate industry.

Challenges in Data Parsing

However, data parsing isn't without its share of obstacles and challenges. The most common problems you may encounter are:

Handling Errors and Inconsistencies

One of the significant challenges in data parsing is managing errors and inconsistencies within the raw input data. Data often comes from various sources with differing standards and quality levels, leading to issues such as missing values, incorrect formats, and syntax errors. These inconsistencies can cause data parsing failures or incorrect data extraction, which in turn affects the reliability of the parsed data. Addressing this challenge requires implementing robust error handling and data validation techniques to identify, report, and correct errors during the data parsing process. Ensuring data quality through pre-processing steps, such as data cleaning and normalization, is crucial for maintaining accuracy and reliability in the parsed output.

Dealing With Large Amounts of Data

A data parser works by processing large volumes of web data – and it can be challenging due to the significant computational resources and time required. High data volume can lead to performance bottlenecks, making it difficult to process data in a timely manner. Efficient algorithms and scalable data parsing solutions are essential to handle large datasets effectively. Techniques such as parallel processing, distributed computing, and optimizing data storage and retrieval methods can help manage large-scale data parsing. Additionally, leveraging cloud-based solutions and big data technologies can enhance the ability to process and parse data, ensuring that businesses can extract valuable insights without being hindered by data volume.

Handling Different Data Formats

Another challenge in data parsing is dealing with various data formats, each with its unique structure and data parsing requirements. Businesses often receive data in multiple formats such as JSON, XML, CSV, and proprietary formats, which necessitates different data parsing strategies. Ensuring compatibility and interoperability of character encodings between these diverse formats can be complex and time-consuming. Developing flexible and adaptable data parsing tools that can handle multiple formats and seamlessly integrate them into a unified structure is crucial. Utilizing libraries and frameworks that support a particular data format can simplify this process, enabling efficient and accurate data parsing across different sources.

Tools and Technologies for Data Parsing

| Tool | Description | Use case | Pros | Cons |

|---|---|---|---|---|

| BeautifulSoup | Python library used for data parsing HTML and XML documents. Creates a parse tree for parsed pages, which can be used to extract data from HTML tags. | Web scraping to extract data from websites. | Easy to use, well-documented, handles messy and raw HTML. | Slower compared to some alternatives for large documents. |

| pandas | Powerful Python library for data manipulation and analysis. Provides data structures like DataFrame and Series to handle tabular data and includes robust tools for reading and writing data from various formats such as CSV, Excel, SQL databases, and JSON. | Data analysis, manipulation, and transformation. | Versatile, integrates well with other Python libraries, handles large datasets efficiently. | Steeper learning curve for beginners. |

| Apache Spark | Unified analytics engine for large-scale data processing. Provides an interface for programming entire clusters with implicit data parallelism and fault tolerance. |

Big data processing, distributed computing.

|

Extremely fast, handles large datasets, supports multiple languages (Python, Java, Scala, R). |

Complex to set up, requires significant computational resources.

|

| Scrapy |

Open-source and collaborative web crawling framework for Python. Designed to scrape and extract data from websites in a fast, simple, and extensible way.

|

Web scraping and crawling. |

Powerful and flexible, supports handling complex websites, well-documented.

|

Requires some programming knowledge, not as beginner-friendly as some other tools.

|

| OpenRefine |

Powerful tool for working with messy data: cleaning it; transforming it from one format into another; and extending it with web services and external data.

|

Data cleaning and transformation.

|

User-friendly interface, powerful for data cleaning tasks.

|

Limited to data cleaning and transformation, not a full-fledged data analysis tool.

|

| JSoup |

Java library for working with real-world HTML documents. Provides a very convenient API for extracting and manipulating data, using the best of DOM, CSS, and jquery-like methods.

|

HTML format data parsing and web scraping.

|

Simple API, handles malformed HTML documents well, fast.

|

Limited to Java, not as versatile as some other multi-purpose libraries.

|

|

Regex (Regular Expressions)

|

Regular expressions are a sequence of characters that define a search pattern. They are used for string matching and manipulation.

|

Text processing and extraction.

|

Powerful for pattern matching, available in many programming languages.

|

Can be difficult to write and understand complex patterns, not suitable for very large or complex data parsing tasks.

|

| Logstash |

Open-source server-side data processing pipeline that ingests data from a multitude of sources simultaneously, transforms it, and then sends it to a “stash” like Elasticsearch.

|

Log and event data processing.

|

Powerful and scalable, integrates well with the Elastic Stack, supports a wide range of input sources.

|

Can be resource-intensive, requires configuration and setup

|

| Talend |

Open-source data integration platform that provides tools for data integration, data management, enterprise application integration, data quality, and big data.

|

ETL (Extract, Transform, Load) processes.

|

Comprehensive suite of tools, user-friendly interface, supports big data technologies.

|

Can be complex for beginners, some features are only available in the paid version.

|

|

Xml.etree.ElementTree

|

Built-in Python library for data parsing and creating XML data. Provides a simple and efficient API for data parsing and creating XML documents.

|

XML data parsing and manipulation.

|

Lightweight, easy to use, included in Python standard library.

|

Limited to XML files, less powerful compared to some dedicated XML libraries like lxml.

|

Buy or build a data parser?

Choosing whether to build a parser yourself or to buy a parser from a third party depends on the specific needs, parser maintenance resources, and strategic goals of the business.

Benefits of Building a Data Parser

- Customization: Building a custom parser allows for complete customization to meet specific business needs, control decisions, and handle unique data formats and structures. You can tailor the data parser to integrate seamlessly with existing systems and workflows.

- Control: Having control over the data parser's functionality, updates, and enhancements ensures that your own data parser evolves according to your requirements. This can lead to better performance and more precise handling of specific data parsing tasks.

- Security: A tailor-made parser can be designed with stringent security measures tailored to the business's specific needs, reducing the risk of vulnerabilities and data breaches.

Downsides of Building Your Own Data Parser

- Time-consuming: Building a data parser from scratch can be a lengthy process, requiring significant time for hiring developers, design, development, testing, and debugging.

- Resource-intensive: Building a data parser requires skilled developers, server costs, ongoing maintenance, which can be resource-intensive, especially for small businesses with limited technical expertise.

- Complex: Handling different data formats and ensuring robustness in error handling introduces numerous decision-making challenges to the development process, making it challenging to achieve a fully functional data parser without extensive testing and refinement.

Benefits of Buying a Data Parser

- Quick deployment: Premade data parsing tools have faster issue resolution, enabling businesses to start the data parsing process almost immediately without the long development timelines associated with custom builds.

- Cost-effective: With maintenance included, while there is an initial purchase cost, premade solutions often prove more cost-effective in the long run by eliminating the need for dedicated development resources.

- Reliability: Established data parsers undergo constant testing, ensuring reliability and robustness. They also typically come with customer support and regular updates, enhancing their stability and performance.

Downsides of Buying a Data Parser

- Limited customization: Premade solutions may offer less control over customization, making it challenging to tailor the data parsing tool to specific business needs or unique data formats.

- Dependency: Relying on third-party solutions means dependency on the vendor for updates, support, and future enhancements. This can be problematic if the vendor discontinues the product or makes it much more expensive.

- Integration challenges: While many premade solutions offer broad compatibility, integrating them with existing systems and workflows can still pose challenges, potentially requiring additional customization or middleware to ensure seamless operation.

How to do data scraping with Infatica

Infatica is a proxy service provider that can significantly enhance data collection efforts by offering a range of features designed to improve efficiency, reliability, and anonymity. Here's how Infatica can help with data scraping:

- IP rotation: Infatica provides a large pool of residential IP addresses that can be rotated automatically. This helps in distributing requests across multiple IPs, reducing the risk of getting blocked by target websites.

- Residential proxies: Infatica offers residential proxies, which are IP addresses provided by ISPs to homeowners. These proxies are legitimate IP addresses assigned to real devices.

- High uptime and reliability: Infatica ensures high uptime and reliable proxy services, crucial for continuous and large-scale data scraping operations.

- Fast and secure connections: Infatica provides fast proxy servers with secure connections, ensuring that data scraping is both quick and safe from interception.

- User-friendly interface and support: Infatica offers an easy-to-use interface for managing proxies and provides robust customer support to assist with any issues or queries.