With correct data as your company’s most important asset, data parsing can unleash its potential and help you create better products. The problem, however, is data parsing tools effectively – and choosing the right ones for the job. In this article, we’re taking a closer look at data parsing’s under-the-hood mechanisms: How do data parsers work? How can they be useful? What is data-driven data parsing? What’s more optimal: building a custom parser or using a SaaS solution? Let’s dive into data parsers and see their strengths and weaknesses.

What is Data Parsing?

Data parsing is converting data from one format into the other.

Why is data parsing important? The ability to juggle different data formats may seem insignificant, but we need to remember the difference between human-readable and machine-readable data. To store and process data efficiently via computer/programming languages, it needs to be organized in a strict (i.e. predictable) way: This way, the machine can work with said complex data by following specific instructions.

The HTML format, HyperText Markup Language, is a good example of this concept: It uses tags to mark up and organize the contents of every web page. For instance, a table containing product prices will be enclosed in the <table> tag, allowing web scraping tools to quickly locate any table across the web.

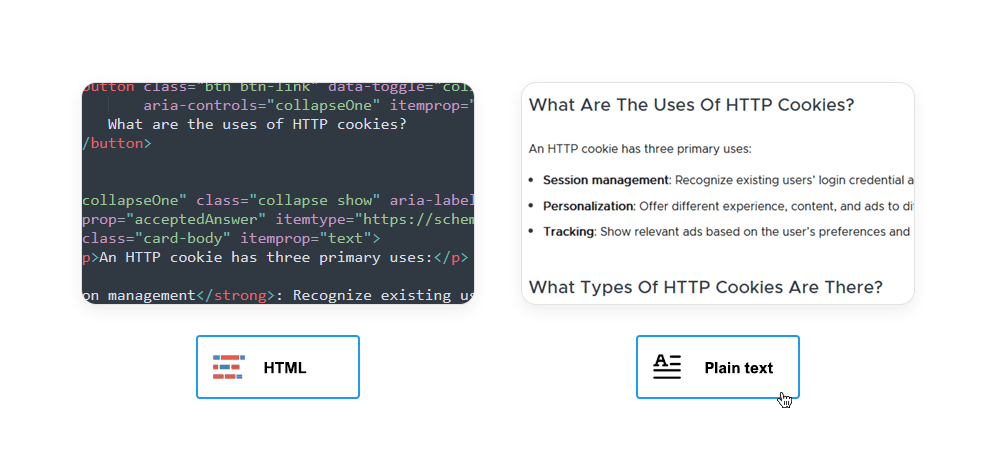

The problem with machine-readable data is the abundance of information that is irrelevant to humans who just want to see the page’s contents at a glance. Here’s what a short paragraph about the <table> tag looks like in raw HTML – although it’s not “unreadable” data per se, it takes more time to scan:

<div class="section-content"><p>The <strong><code><table></code></strong> <a href="/en-US/docs/Web/HTML">HTML</a> element represents tabular web data — that is, information presented in a two-dimensional table comprised of rows and columns of cells containing data.</p></div>Using a data parser, you can strip the HTML tags from the text and get easily readable data. In other scenarios, you may be working with databases – and you can input unstructured data and transform it into structured formats like JSON or CSV.

How Does The Data Parser Work?

At its core level, every data parser is a collection of technologies, all of which are responsible for a particular task:

- Interpret the device’s commands,

- Segment the source data into separate strings,

- Analyze the strings, and

- Modify the strings’ structure to make it more structured/readable.

Data parsing allows you to work with a wide variety of data structures, languages, and other technology types: programming languages (e.g. Python, Java), markup languages (e.g. HTML, XML), database languages (e.g. SQL) modeling languages (artificial languages for expressing information), and more.

Types of Data Parsing

Over the years, data parsing has boiled down to two approaches: grammar-driven and data-driven data parsing. They differ in design goals and capabilities, so let’s take a closer look at their strengths and weaknesses:

Grammar Driven Data Parsing

Grammar is a system of formal rules – and parsing can also use a collection of formal rules similar to natural languages’ grammar. With this approach, the data stream gets divided into segments (sentences) – and the parser analyzes their contents using the predefined rules.

Grammar-driven data parsing, however, may have problems with flexibility: Some data segments may not match the pattern laid out by the model – and the parser would consider them irrelevant. To avoid this, you may need to adjust the grammatical model’s requirements, allowing non-standard data segments to pass the initial filter.

Data-Driven Data Parsing

Alternatively, information can be parsed via natural language processing models and treebanks, which use semantics to structure data into segments and analyze them. Data-driven data parsing relies more on probabilities and statistics, which allows it to be flexible.

Use Cases of Data Parser

We’ve established the general use of data parsers – but it may be tricky to see its usefulness in real-world scenarios and business cases. Over the years, different companies and industries have utilized the ability to parse data:

Finance and Accounting

In finance, billions of transactions between different parties contain lots of valuable customer data – and finance and accounting banks use data parsing to perform investment analysis and make better predictions about interests, customer behavior, and more. One example is using AI algorithms to make loan decisions and analyzing credit reports, which is possible via scanning the applicant’s social media profiles and other digital footprints.

Business Workflow Optimization

Structured data can provide a considerable productivity boost, so data analysts utilize parsers to make sense of raw information provided by their data extraction pipeline. This allows companies to acquire actionable data, i.e. one that can help them make informed decisions.

Shipping and Logistics

E-commerce and delivery businesses rely on precise shipping data – they use data parsers to find relevant shipping details and check that its formatting hasn’t been edited.

Real Estate Industry

Real estate agents depend on lead generation from various sources, which can include emails, CRM platforms, market research, and documentation. With the right parser, real estate agents can collect data points like clients’ contact details, property locations, cash flow data, and more – and all of this can help build a more effective sales strategy.

How To Build Your Own Data Parser

A well-functioning data parser will need two components: One enables lexical analysis, while the other makes syntactic analysis possible. Lexical analysis takes the entire dataset and divides it into separate strings (called tokens) based on their lexical qualities (e.g. keywords.) Additionally, irrelevant information like whitespaces and tags get removed from the dataset.

During syntactic analysis, the parse tree is created – and each string is arranged into an interconnected system of tokens. Upon creating the tree, the parser can finally save it to a file format of your choice (e.g. JSON) as all irrelevant information is separated from the relevant one.

To Build Or To Buy?

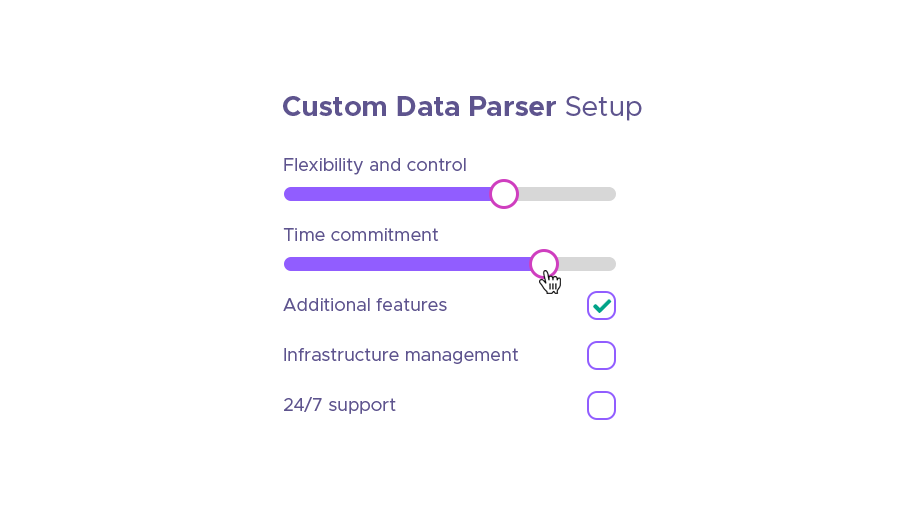

The steps required to build your data parser that we’ve outlined above may seem daunting to some users – and they choose ready-made data parsing tools instead. Like any software-as-a-service product, they have their pros and cons – read this section to make an informed decision later:

Control is arguably the most important factor: Even though a custom data parser requires time and money, at the end of the day, it’s yours. Many businesses try to limit their dependence on third-party services, wary of sudden changes in pricing or functionality – these risks are much lower with a custom data parsing tool that offers complete control.

Flexibility is another advantage: You’re free to choose your parser’s technology stack, which would include components like programming language and database management system. Oftentimes, the right technology stack can make data parsing much easier – and a custom data parser can provide this level of flexibility.

In some cases, cost can be lower if you have an in-house programming team – and the time and resources to spend. This way, a custom data parser may be cheaper than its SaaS counterparts in the long run.

Buying a Data Parser

On the other hand, time can be an issue if you can’t afford to dedicate resources to building your own parser. Firstly, the time commitment has to do with web pages’ ever-changing HTML structure. In part, platforms like Amazon and Instagram update the HTML structure to prevent unauthorized third parties from accessing this data. Having your own parser entails the responsibility to monitor these changes: Even a slight code difference can put your entire data extraction setup on pause.

Secondly, you won't have to invest time into managing your data parsing backend infrastructure. Servers are an important component: They’ll process and store data, so server performance can be a bottleneck if set up incorrectly. With the rise of cybercrime incidents like DDoS attacks and identity thefts, server security is another priority – and setting up adequate protection against cyberattacks takes time and expertise.

Cost of development can be another problem for smaller companies and individuals: Developing a full-blown data parsing solution can keep the whole programming team busy for a few months. In some cases, it would be more optimal to choose a SaaS monthly subscription.

Existing Data Parsing Tools

Data parsers are typically bundled with web scraping suites and libraries. Full-blown web scrapers may be an overkill for a beginner project, so you can try using HTML parsing libraries, which are often open-source and free. Many data parsing components are premade, so you won’t have to program custom data parsing solution.

Scrapy

Scrapy is a framework for web crawling and data extraction. In addition to crawling websites and extracting their data, it can also perform data parsing tasks. Scrapy is optimal when you need a more custom solution – and it’s even more powerful when you have some Python skills under your belt.

Further reading: An Extensive Overview of Python Web Crawlers

Cheerio

Cheerio is a great alternative to parsing tools like Scrapy and BeautifulSoup if you prefer JavaScript: It offers similar functionality like markup parsing and API capabilities. Moreover, it offers useful utilities like PDF/screenshot generation and integration with other scrapers.

Conclusion

Data parsing is an essential component in any web scraping pipeline, which has found its use in spheres like marketing, finance, e-commerce, and more. Thanks to data parsers, data extraction can actually fulfill its goal and help companies build products like price aggregation platforms, marketing services, search engine optimization software, and more.